apache Kyuubi部署及对接hive

1、背景

客户重度使用spark sql,但是使用spark thriftserver存在各种各样的问题,我们选择使用kyuubi来替代spark thriftserver的使用

2、安装包下载

下载地址:https://dlcdn.apache.org/kyuubi/kyuubi-1.8.0/apache-kyuubi-1.8.0-bin.tgz

cd /opt/ wget https://dlcdn.apache.org/kyuubi/kyuubi-1.8.0/apache-kyuubi-1.8.0-bin.tgz

3、安装配置

1、下载解压

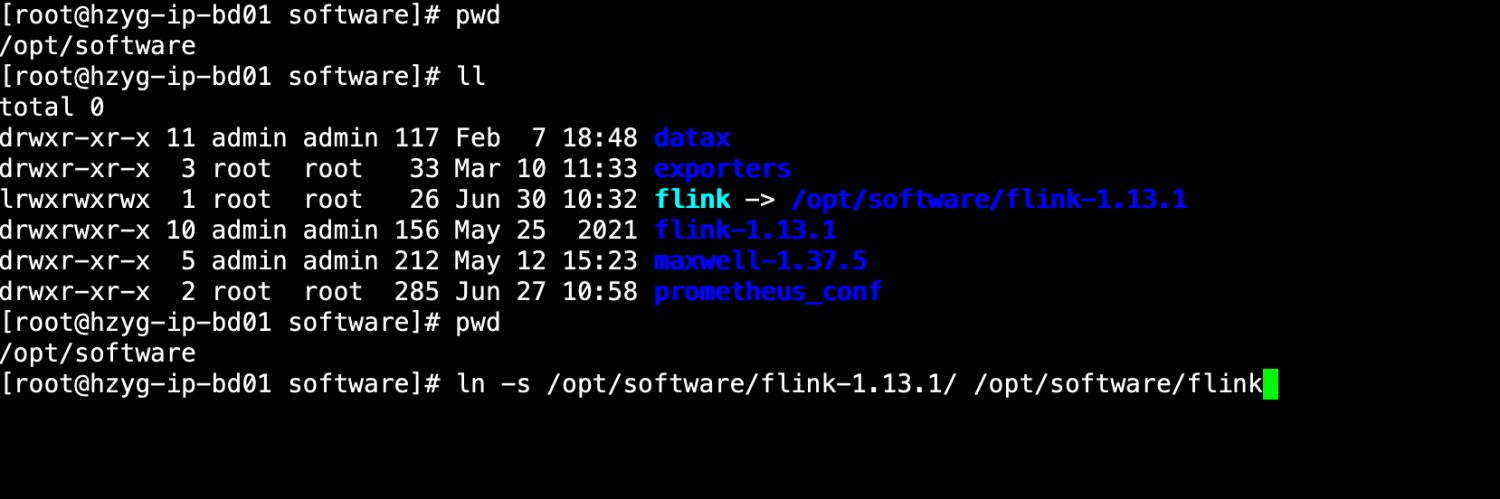

cd /opt tar -xzvf apache-kyuubi-1.8.0-bin.gz ln -s apache-kyuubi-1.8.0-bin kyuubi

2、参数配置

(1) 配置kyuubi-env.sh

cd /opt/kyuubi/conf cp kyuubi-env.sh.template kyuubi-env.sh

编辑kyuubi-env.sh 添加以下内容

vi kyuubi-env.sh export JAVA_HOME=/opt/java export SPARK_HOME=/opt/spark export FLINK_HOME=/opt/flink export HIVE_HOME=/opt/hive export HADOOP_CONF_DIR=/opt/hadoop/etc/hadoop export FLINK_HADOOP_CLASSPATH=/opt/hadoop/share/hadoop/client/hadoop-client-runtime-3.2.4.jar:/opt/hadoop/share/hadoop/client/hadoop-client-api-3.2.4.jar #如果开启kerberos,需要添加以下参数,指定krb5cache文件名称,防止名称相同互相冲突 export KRB5CCNAME=/tmp/krb5cc_kyuubi

(2) 配置kyuubi-defaults.conf

cd /opt/kyuubi/conf cp kyuubi-defaults.conf.template kyuubi-defaults.conf

编辑kyuubi-defaults.conf并添加以下内容

vim kyuubi-defaults.conf #启动端口是10009 kyuubi.frontend.bind.port 10009 #使用的引擎为spark kyuubi.engine.type SPARK_SQL #kyuubi使用zk做高可用的配置及 kyuubi.ha.addresses hd1.dtstack.com,hd2.dtstack.com,hd3.dtstack.com:2181 kyuubi.ha.client.class org.apache.kyuubi.ha.client.zookeeper.ZookeeperDiscoveryClient kyuubi.ha.namespace kyuubi #当zk开启kerberos时,相关的认证参数 kyuubi.ha.zookeeper.acl.enabled true kyuubi.ha.zookeeper.auth.keytab /etc/security/keytab/kyuubi.keytab kyuubi.ha.zookeeper.auth.principal kyuubi/hd1.dtstack.com@DTSTACK.COM kyuubi.ha.zookeeper.auth.type KERBEROS #kyuubi kerberos参数 kyuubi.authentication KERBEROS kyuubi.kinit.keytab /etc/security/keytab/hive.keytab kyuubi.kinit.principal hive/hd1.dtstack.com@DTSTACK.COM #spark 的配置参数 spark.master=yarn ##指定队列数,可以不用配置 spark.yarn.queue=default #对接iceberg数据湖时添加以下参数。并且在spark 的jar中添加相关iceberg的jar包 spark.sql.catalog.spark_catalog=org.apache.iceberg.spark.SparkCatalog spark.sql.catalog.spark_catalog=org.apache.iceberg.spark.SparkSessionCatalog spark.sql.catalog.spark_catalog.type=hive spark.sql.catalog.spark_catalog.uri=thrift://hd1.dtstack.com:9083 spark.sql.catalog.spark_catalog.warehouse=/user/hive/warehouse spark.sql.extensions=org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions #对接hudi时添加参数(hudi 版本0.13.1) spark.serializer=org.apache.spark.serializer.KryoSerializer spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension spark.sql.catalog.spark_catalog=org.apache.spark.sql.hudi.catalog.HoodieCatalog #集成ranger权限的参数 spark.sql.extensions=org.apache.kyuubi.plugin.spark.authz.ranger.RangerSparkExtension

配置hudi时,需要将kyuubi-spark-sql-engine_2.12-1.8.0.jar 和hudi-spark3.3-bundle_2.12-0.13.1.jar 放在SPARK_HOME/jars 目录。

注意SPARK_HOME/conf 中需要hive-site.xml

4、启动kyuubi

./bin/kyuubi start

查看端口是否正常

ss -tunlp | grep 10009

#高可用对接 bin/beeline -u 'jdbc:hive2://172.16.38.218:10009/' -n testuser