Clickhouse数据复制的原理与实践

说明

在clickhouse中,如果我们想实现数据多副本存储,以提高数据可用率和查询性能,可以使用复制表。支持数据复制的表引擎包括:

ReplicatedMergeTree

ReplicatedSummingMergeTree

ReplicatedReplacingMergeTree

ReplicatedAggregatingMergeTree

ReplicatedCollapsingMergeTree

ReplicatedVersionedCollapsingMergeTree

ReplicatedGraphiteMergeTree

使用复制表的前置条件是clickhouse配置了zookeeper。需要在配置文件中配置,如:

<zookeeper> <node> <host>example1</host> <port>2181</port> </node> <node> <host>example2</host> <port>2181</port> </node> <node> <host>example3</host> <port>2181</port> </node> </zookeeper>

在clickhouse中,以表为单位进行复制。不同的表可以配置不同的复制策略。

需要注意的是,clickhouse不会复制CREATE, DROP, ATTACH, DETACH和RENAME这些操作。

而通过alter table给表增加字段的操作会进行复制。

创建复制表

CREATE TABLE table_name ( ... )

ENGINE = ReplicatedMergeTree('path_in_zookeeper', 'replica_name')

...创建复制表需要指定两个关键参数:

path_in_zookeeper: zookeeper中的路径,同一个表的多个副本,该参数必须一样。

replica_name: 多个副本需要配置不同的replica_name。

一般在建表时,我们会使用{shard}, {replica}等宏变量:

create table rep_table(id int, val String)

engine ReplicatedMergeTree(

'/clickhouse/tables/{shard_id}/rep/rep_table',

'{replica}'

) order by id;上面例子中,{shard_id}, {replica}都是在macros中定义的宏,我们以在系统表system.macros中查看当前实例的宏定义。

## 节点ck01 ck01 :) select * from system.macros; SELECT * FROM system.macros Query id: a85a2f99-e2dd-4ba4-9b5e-519e7b5c9f40 ┌─macro────┬─substitution───┐ │ cluster │ cluster-zero │ │ replica │ 172.16.121.248 │ │ shard_id │ 01 │ └──────────┴────────────────┘ 4 rows in set. Elapsed: 0.001 sec. ## 节点ck02 ck02 :) select * from system.macros; SELECT * FROM system.macros Query id: a11a1a07-0757-414a-954a-dd716d0cda3d ┌─macro────┬─substitution──┐ │ cluster │ cluster-zero │ │ replica │ 172.16.121.48 │ │ shard_id │ 01 │ └──────────┴───────────────┘ 4 rows in set. Elapsed: 0.002 sec.

有几点需要注意:

1、如果replica, shard_id等宏定义在建表之后发生了变化,则可能会导致相关的表出现异常。

## ck01中将shard_id定义修改为02, 重启clickhouse后,再写入数据 ck01 :) insert into rep_table values(2, 'two', 'xx'); INSERT INTO rep_table FORMAT Values Query id: 882aa305-a7a7-48d4-b27e-d9564c046693 0 rows in set. Elapsed: 0.004 sec. Received exception from server (version 22.6.3): Code: 242. DB::Exception: Received from localhost:9000. DB::Exception: Table is in readonly mode (replica path: /clickhouse/tables/02/rep/rep_table/replicas/172.16.121.248). (TABLE_IS_READ_ONLY)

由于在zookeeper中并不存在/clickhouse/tables/02/rep/rep_table这个路径,数据无法写入。在clickhouse的启动日志中,可以看到相关的信息:

2022.12.20 03:42:42.505570 [ 177009 ] {} <Warning> rep.rep_table (01b4ad06-4d45-4451-808b-8403e8b1b6c8):

No metadata in ZooKeeper for /clickhouse/tables/02/rep/rep_table: table will be in readonly mode.2、复制表的多个副本表结构必须一致

如果建表时表结构不一致,则无法创建表:

ck01 :) create table rep_table(id int, val String, id2 int)

engine ReplicatedMergeTree('/clickhouse/tables/{shard_id}/rep/rep_table', '{replica}')

order by id;

CREATE TABLE rep_table

(

`id` int,

`val` String,

`id2` int

)

ENGINE = ReplicatedMergeTree('/clickhouse/tables/{shard_id}/rep/rep_table', '{replica}')

ORDER BY id

Query id: 8aa10add-590a-4129-9420-a625edd9d5e1

0 rows in set. Elapsed: 0.240 sec.

Received exception from server (version 22.6.3):

Code: 122. DB::Exception: Received from localhost:9000. DB::Exception: Table columns structure in ZooKeeper is different from local table structure. Local columns:

columns format version: 1

3 columns:

`id` Int32

`val` String

`id2` Int32

Zookeeper columns:

columns format version: 1

2 columns:

`id` Int32

`val` String

. (INCOMPATIBLE_COLUMNS)3、多个副本的replica需要唯一

如果建表时,在zookeeper中已经存在对应replica的路径,则无法创建成功:

ck02 :) create table rep_table2(id int, val String)

engine ReplicatedMergeTree('/clickhouse/tables/{shard_id}/rep/rep_table', '{replica}')

order by id

;

CREATE TABLE rep_table2

(

`id` int,

`val` String

)

ENGINE = ReplicatedMergeTree('/clickhouse/tables/{shard_id}/rep/rep_table', '{replica}')

ORDER BY id

Query id: 6231b6f8-3421-4c5f-90b4-d13e1cdb6463

0 rows in set. Elapsed: 0.750 sec.

Received exception from server (version 22.6.3):

Code: 253. DB::Exception: Received from localhost:9000. DB::Exception:

Replica /clickhouse/tables/01/rep/rep_table/replicas/172.16.121.48 already exists. (REPLICA_IS_ALREADY_EXIST)复制表在zookeeper中存了哪些信息

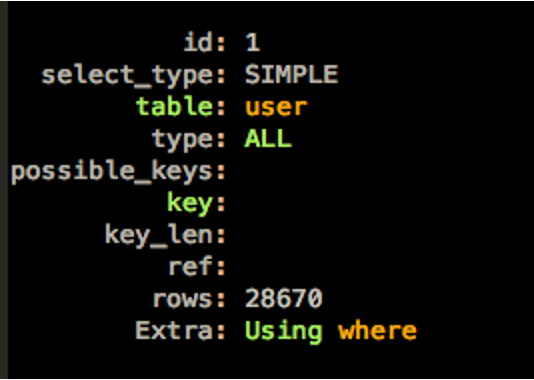

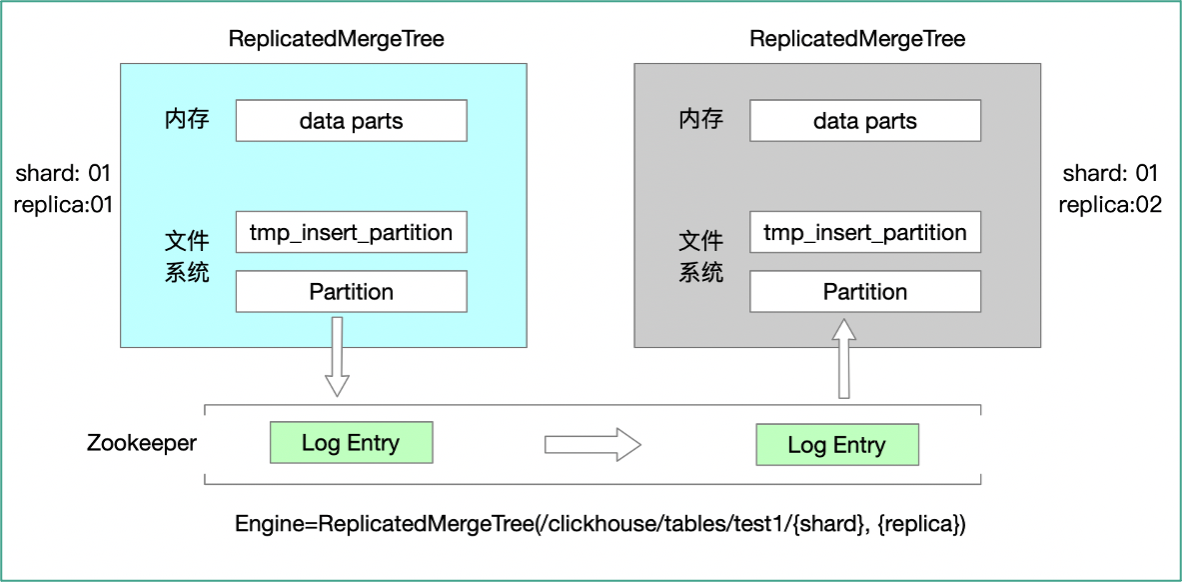

zookeeper在clickhouse的数据复制中起着关键作用。

如上图所示,对复制表的操作,会在zookeeper中记录日志信息,副本通过记录在zookeeper中的信息,实现数据复制。

如下操作都会在zookeeper中记录日志:

insert

merge(optimize table)

alter table attach/detach partition/part

alter table update/delete

alter table add column

复制表zookeeper节点内容

通过zookeeper客户端,或者使用系统表system.zookeeper,都可以查看复制表zookeeper对应节点中存储了哪些信息

ck01 :) select name from system.zookeeper where path='/clickhouse/tables/01/rep/rep_table'; SELECT name FROM system.zookeeper WHERE path = '/clickhouse/tables/01/rep/rep_table' Query id: 59cb84f5-87ad-4ec4-8fd6-974dbce358a1 ┌─name───────────────────────┐ │ alter_partition_version │ │ metadata │ │ temp │ │ table_shared_id │ │ log │ │ leader_election │ │ columns │ │ blocks │ │ nonincrement_block_numbers │ │ replicas │ │ quorum │ │ pinned_part_uuids │ │ block_numbers │ │ mutations │ │ zero_copy_s3 │ │ zero_copy_hdfs │ │ part_moves_shard │

metadata:表结构信息

log: 数据复制关键信息。log节点下,每一条日志都对应着对表的一个动作。

replicas:每一个副本会在replicas下有一个节点。

mutations:对表的mutation操作(如alter table update/delete)

log节点信息

ck02 :) select name, value from system.zookeeper where path='/clickhouse/tables/01/rep/rep_table/log' order by name\G SELECT name, value FROM system.zookeeper WHERE path = '/clickhouse/tables/01/rep/rep_table/log' ORDER BY name ASC Query id: 82f00616-9c18-4bc6-a107-189ac4a67aa1 -- 对应一个mutation操作的日志 Row 2: ────── name: log-0000000008 value: format version: 4 create_time: 2022-12-20 06:06:11 source replica: 172.16.121.248 block_id: mutate all_1_1_0_2 to all_1_1_0_3 -- merge 操作的日志 Row 3: ────── name: log-0000000009 value: format version: 4 create_time: 2022-12-20 06:06:20 source replica: 172.16.121.248 block_id: merge all_0_0_0_3 all_1_1_0_3 into all_0_1_1_3 deduplicate: 0 part_type: Compact -- alter table的日志 Row 8: ────── name: log-0000000014 value: format version: 4 create_time: 2022-12-20 06:08:14 source replica: 172.16.121.248 block_id: alter alter_version 6 have_mutation 1 columns_str_size: 61 columns format version: 1 2 columns: `id` Int32 `val` String metadata_str_size: 192 metadata format version: 1 date column: sampling expression: index granularity: 8192 mode: 0 sign column: primary key: id data format version: 1 partition key: granularity bytes: 10485760 -- alter table attach partition的日志 Row 10: ─────── name: log-0000000016 value: format version: 4 create_time: 2022-12-20 06:11:59 source replica: 172.16.121.248 block_id: REPLACE_RANGE drop_range_name: all_0_0_0 from_database: rep from_table: tmp_rep source_parts: ['all_1_1_0'] new_parts: ['all_8_8_0'] part_checksums: ['5381E04F17BD6299E7C1F56B445FB8DB'] columns_version: -1 -- insert操作对应的日志 Row 11: ─────── name: log-0000000017 value: format version: 4 create_time: 2022-12-20 06:17:38 source replica: 172.16.121.248 block_id: all_1659522035524593032_2034088950575960742 get all_9_9_0 part_type: Compact

mutation节点信息

ck02 :) select name, value from system.zookeeper where path='/clickhouse/tables/01/rep/rep_table/mutations'\G SELECT name, value FROM system.zookeeper WHERE path = '/clickhouse/tables/01/rep/rep_table/mutations' Query id: 2cea07ee-0b0b-4a6d-85f0-f73fcb129f06 Row 1: ────── name: 0000000001 value: format version: 1 create time: 2022-12-20 06:06:11 source replica: 172.16.121.248 block numbers count: 1 all 3 commands: DELETE WHERE id = 1 alter version: -1 Row 2: ────── name: 0000000000 value: format version: 1 create time: 2022-12-20 06:05:40 source replica: 172.16.121.248 block numbers count: 1 all 2 commands: UPDATE val = \'updated\' WHERE 1 alter version: -1 Row 3: ────── name: 0000000003 value: format version: 1 create time: 2022-12-20 06:08:01 source replica: 172.16.121.248 block numbers count: 1 all 5 commands: DROP COLUMN padding2 alter version: 5

replicas节点

每一个副本都会在replicas路径下建立一个节点。

ck02 :) select name from system.zookeeper where path='/clickhouse/tables/01/rep/rep_table/replicas/172.16.121.48' ; SELECT name FROM system.zookeeper WHERE path = '/clickhouse/tables/01/rep/rep_table/replicas/172.16.121.48' Query id: 9f4dd647-39fa-42ed-a8a2-beb642673018 ┌─name────────────────────────┐ │ is_lost │ │ metadata │ │ is_active │ │ mutation_pointer │ │ columns │ │ max_processed_insert_time │ │ flags │ │ log_pointer │ │ min_unprocessed_insert_time │ │ host │ │ parts │ │ queue │ │ metadata_version │ └─────────────────────────────┘

replicas下的关键信息:

log_pointer: 当前副本处理的日志位点

queue:当前节点待处理任务队列

metadata_version: 元数据版本

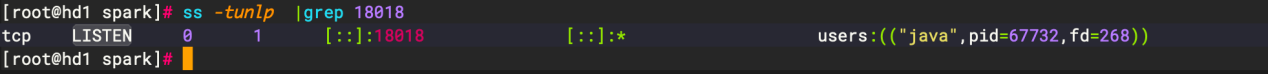

添加新副本

给已有的表添加新副本时,新副本会选择一个原有的节点做全量数据同步。

从clickhouse的debug日志中可以看到复制的大概流程:

executeQuery: (from [::ffff:127.0.0.1]:42530) CREATE TABLE rep.rep_table ( `id` Int32, `val` String ) ENGINE = ReplicatedMergeTree('/clickhouse/tables/{shard_id}/rep/rep_table', '{replica}') ORDER BY id SETTINGS index_granularity = 8192; (stage: Complete)

rep.rep_table (b2fe260c-ca4f-4e6d-903a-6a4f0358777e): Loading data parts

rep.rep_table (b2fe260c-ca4f-4e6d-903a-6a4f0358777e): There are no data parts

rep.rep_table (b2fe260c-ca4f-4e6d-903a-6a4f0358777e): This table /clickhouse/tables/01/rep/rep_table is already created, will add new replica

rep.rep_table (b2fe260c-ca4f-4e6d-903a-6a4f0358777e): Creating replica /clickhouse/tables/01/rep/rep_table/replicas/ck03

rep.rep_table (b2fe260c-ca4f-4e6d-903a-6a4f0358777e): Became leader

rep.rep_table (ReplicatedMergeTreeRestartingThread): Activating replica.

rep.rep_table (b2fe260c-ca4f-4e6d-903a-6a4f0358777e): Replica 172.16.121.48 has log pointer '18', approximate 0 queue lag and 0 queue size

rep.rep_table (b2fe260c-ca4f-4e6d-903a-6a4f0358777e): Replica 172.16.121.248 has log pointer '18', approximate 0 queue lag and 0 queue size

rep.rep_table (b2fe260c-ca4f-4e6d-903a-6a4f0358777e): Will mimic 172.16.121.48

rep.rep_table (b2fe260c-ca4f-4e6d-903a-6a4f0358777e): Queued 3 parts to be fetched, 0 parts ignored

rep.rep_table (b2fe260c-ca4f-4e6d-903a-6a4f0358777e): Fetching part all_0_1_1_6 from /clickhouse/tables/01/rep/rep_table/replicas/172.16.121.248

rep.rep_table (b2fe260c-ca4f-4e6d-903a-6a4f0358777e): Fetching part all_9_9_0 from /clickhouse/tables/01/rep/rep_table/replicas/172.16.121.248

rep.rep_table (b2fe260c-ca4f-4e6d-903a-6a4f0358777e): Fetching part all_8_8_0 from /clickhouse/tables/01/rep/rep_table/replicas/172.16.121.248在zookeeper中添加新的replica节点

根据其他节点的log pointer和queue信息,选择复制的源端节点

将源端节点的parts信息加入到本节点的queue中。

将part下载到本节点并attach到表中。