Doris审计插件的安装

插件下载地址:https://doris.apache.org/download

1.安装Doris审计插件

(1)解压 Audit Loader 插件

步骤1. 复制插件文件

cp /opt/dtstack/Doris/extensions/audit_loader/auditloader.zip

/opt/dtstack/Doris/fe/plugins/

步骤2. 进入目录并解压插件包

cd /opt/dtstack/Doris/fe/plugins/

unzip auditloader.zip

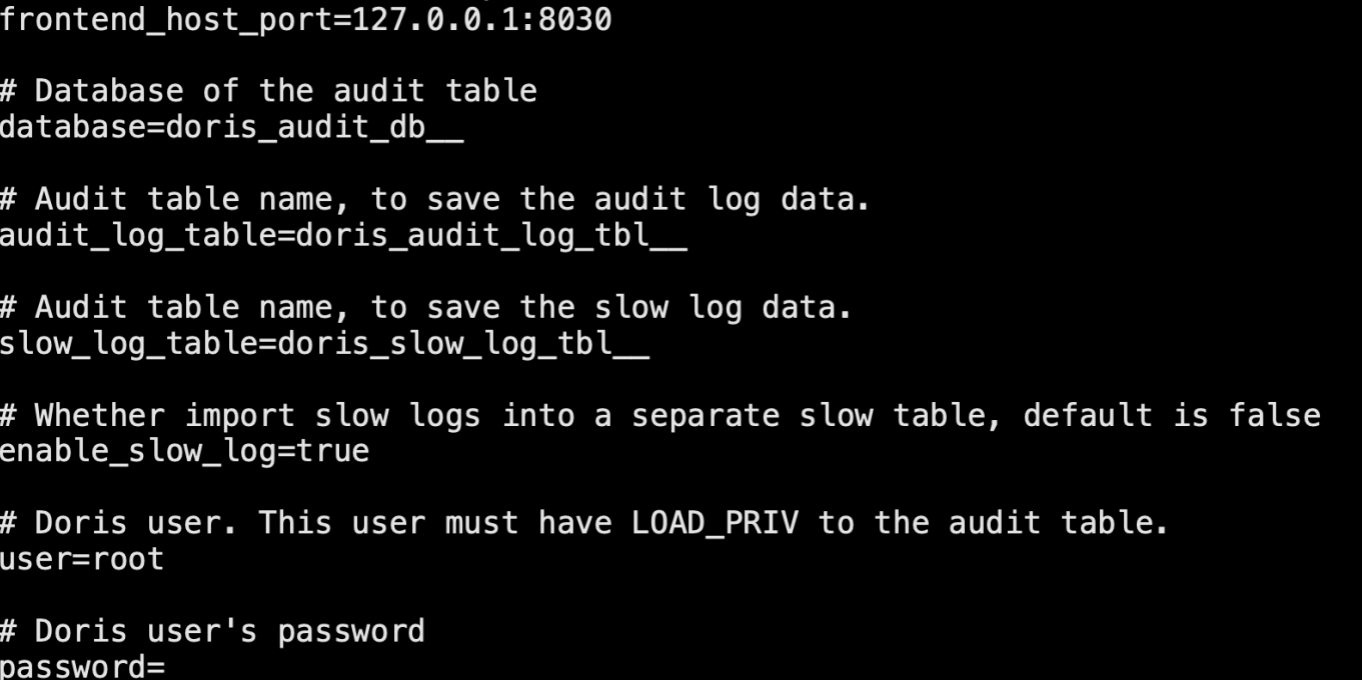

(2)修改配置

vi plugin.conf

配置说明:

frontend_host_port:FE 节点 IP 地址和 HTTP 端口,格式为 <fe_ip>:<fe_http_port>。 默认值为 127.0.0.1:8030。

database:审计日志库名。

audit_log_table:审计日志表名。

slow_log_table:慢查询日志表名。

enable_slow_log:是否开启慢查询日志导入功能。默认值为 false。

user:集群用户名。该用户必须具有对应表的 INSERT 权限。

password:集群用户密码。

(3)重新打包 Audit Loader 插件

zip -r -q -m auditloader.zip auditloader.jar plugin.properties plugin.conf

(4)创建库表

若需开启慢查询日志导入功能,还需要额外创建慢表 doris_slow_log_tbl__,其表结构与 doris_audit_log_tbl__ 一致。其中 dynamic_partition 属性根据自己的需要,选择审计日志保留的天数。

create database doris_audit_db__;

create table doris_audit_db__.doris_audit_log_tbl__

(

query_id varchar(48) comment "Unique query id",

`time` datetime not null comment "Query start time",

client_ip varchar(32) comment "Client IP",

user varchar(64) comment "User name",

db varchar(96) comment "Database of this query",

state varchar(8) comment "Query result state. EOF, ERR, OK",

error_code int comment "Error code of failing query.",

error_message string comment "Error message of failing query.",

query_time bigint comment "Query execution time in millisecond",

scan_bytes bigint comment "Total scan bytes of this query",

scan_rows bigint comment "Total scan rows of this query",

return_rows bigint comment "Returned rows of this query",

stmt_id int comment "An incremental id of statement",

is_query tinyint comment "Is this statemt a query. 1 or 0",

frontend_ip varchar(32) comment "Frontend ip of executing this statement",

cpu_time_ms bigint comment "Total scan cpu time in millisecond of this query",

sql_hash varchar(48) comment "Hash value for this query",

sql_digest varchar(48) comment "Sql digest for this query",

peak_memory_bytes bigint comment "Peak memory bytes used on all backends of this query",

stmt string comment "The original statement, trimed if longer than 2G"

) engine=OLAP

duplicate key(query_id, `time`, client_ip)

partition by range(`time`) ()

distributed by hash(query_id) buckets 1

properties(

"dynamic_partition.time_unit" = "DAY",

"dynamic_partition.start" = "-30",

"dynamic_partition.end" = "3",

"dynamic_partition.prefix" = "p",

"dynamic_partition.buckets" = "1",

"dynamic_partition.enable" = "true",

"replication_num" = "3"

);

create table doris_audit_db__.doris_slow_log_tbl__

(

query_id varchar(48) comment "Unique query id",

`time` datetime not null comment "Query start time",

client_ip varchar(32) comment "Client IP",

user varchar(64) comment "User name",

db varchar(96) comment "Database of this query",

state varchar(8) comment "Query result state. EOF, ERR, OK",

error_code int comment "Error code of failing query.",

error_message string comment "Error message of failing query.",

query_time bigint comment "Query execution time in millisecond",

scan_bytes bigint comment "Total scan bytes of this query",

scan_rows bigint comment "Total scan rows of this query",

return_rows bigint comment "Returned rows of this query",

stmt_id int comment "An incremental id of statement",

is_query tinyint comment "Is this statemt a query. 1 or 0",

frontend_ip varchar(32) comment "Frontend ip of executing this statement",

cpu_time_ms bigint comment "Total scan cpu time in millisecond of this query",

sql_hash varchar(48) comment "Hash value for this query",

sql_digest varchar(48) comment "Sql digest for this query",

peak_memory_bytes bigint comment "Peak memory bytes used on all backends of this query",

stmt string comment "The original statement, trimed if longer than 2G "

) engine=OLAP

duplicate key(query_id, `time`, client_ip)

partition by range(`time`) ()

distributed by hash(query_id) buckets 1

properties(

"dynamic_partition.time_unit" = "DAY",

"dynamic_partition.start" = "-30",

"dynamic_partition.end" = "3",

"dynamic_partition.prefix" = "p",

"dynamic_partition.buckets" = "1",

"dynamic_partition.enable" = "true",

"replication_num" = "3"

);

(5)部署

步骤1. 拷贝auditloader.zip

将重新打包的zip拷贝到所有 FE 的/opt/dtstack/Doris/fe/plugins/目录

步骤2. 连接FE

mysql -uroot -P9030 -h127.0.0.1

步骤3. 执行安装命令

INSTALL PLUGIN FROM /opt/dtstack/Doris/fe/plugins/auditloader.zip