慢查询导致ES CPU资源打满

o 一、问题背景

§ 1.2 收到告警

o 二、处理过程

§ 2.1 查看监控

o 三、小结

一、问题背景

1.1 集群背景信息

6.7.0 es-cn-xxxxxx

16核32G 6 data node

2核8G 3 master node

跨可用区部署(2个可用区)

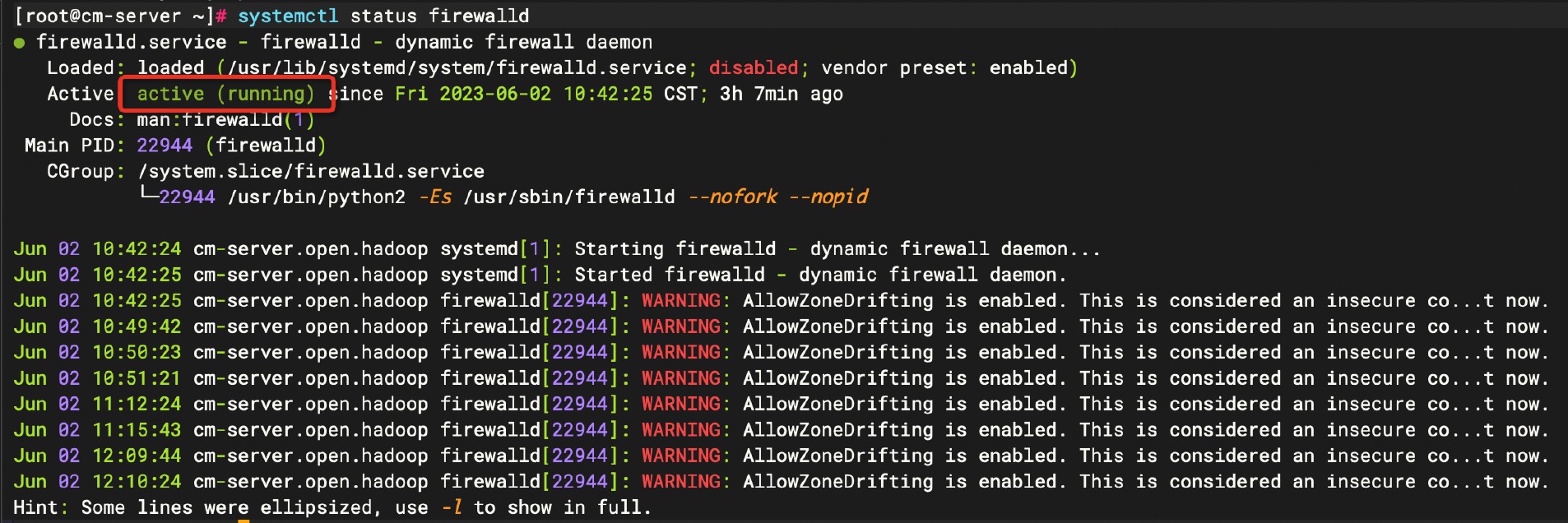

1.2 收到告警

【云监控】Elasticsearch<nodeIP=172.30.203.243,clusterId=es-cn-xxxxxx>于12:00 发生告警,节点CPU使用率平均值(97.24%>=60% ), 持续时间25分钟, 生产ES CPU告警

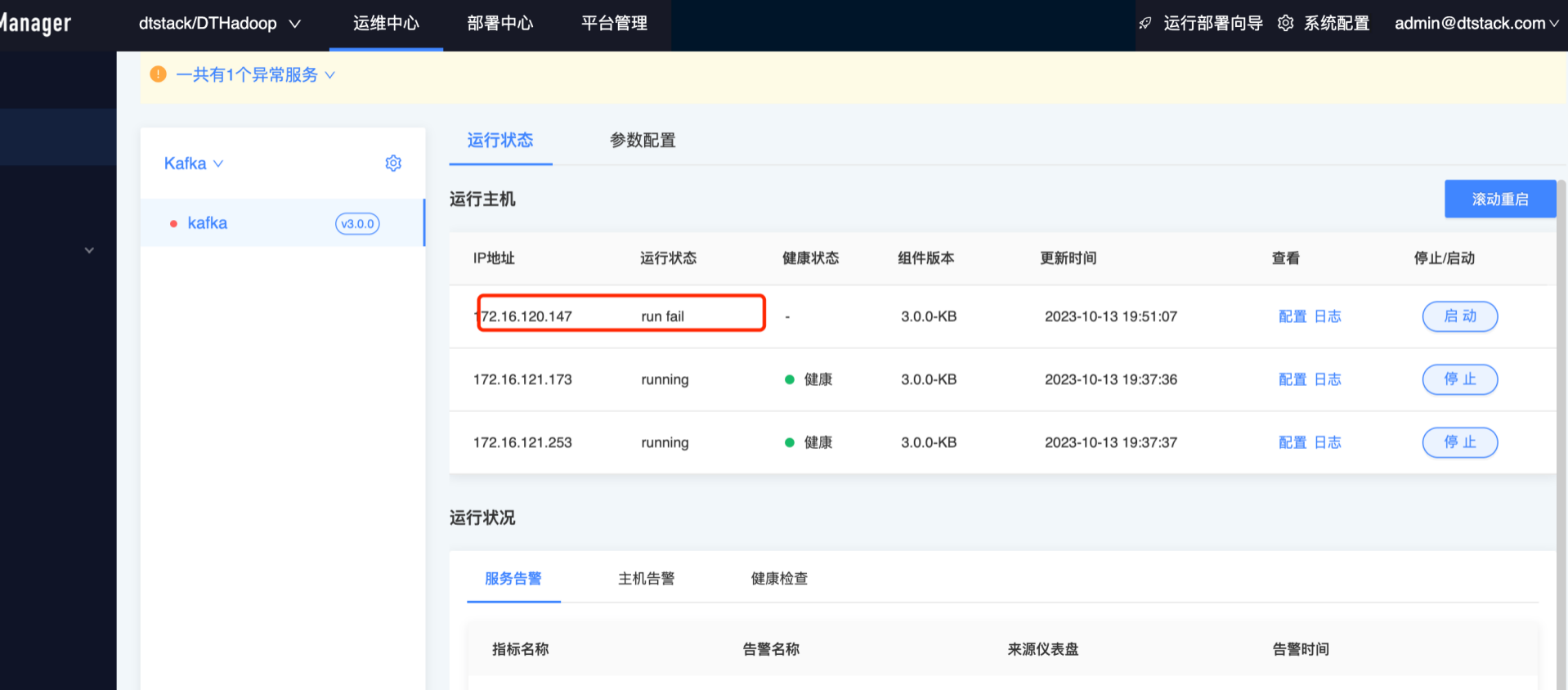

二、处理过程

2.1 查看监控

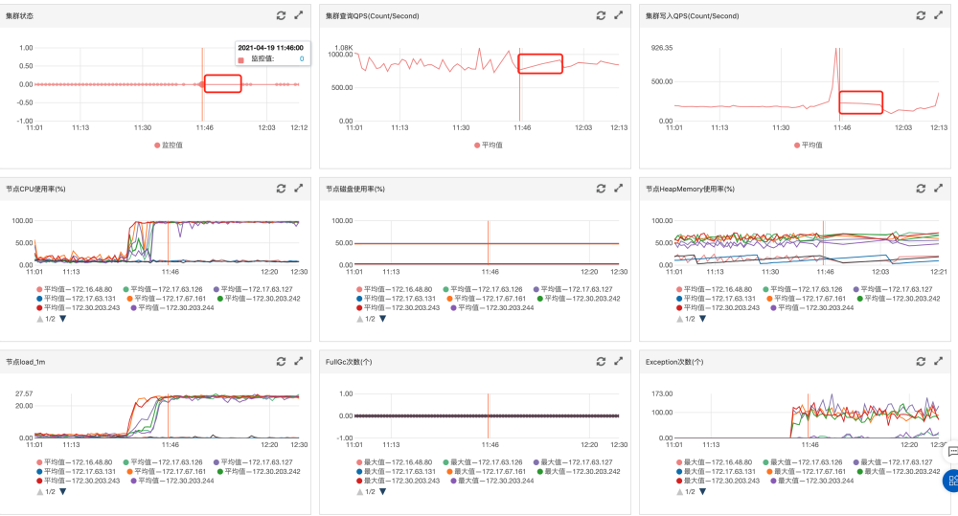

从监控可以看到,在11:30后,集群所有节点的CPU打高,且监控存在断点,这说明集群的压力已经很大了,监控数据都没有办法很好的写入。

但是从已有的点来看,集群的QPS变化不大,所以需要登录ES查看是什么查询或者写入导致。

2.2 登录kibana查看

想要登录kibana查看问题,kibana无法打开,直接报错:

{"statusCode":429,"error":"Too Many Requests","message":"all shards failed: [es_rejected_execution_exception] rejected execution of org.elasticsearch.common.util.concurrent.TimedRunnable@655525d8 on QueueResizingEsThreadPoolExecutor[name = -e1EQiE/search, queue capacity = 1000, min queue capacity = 1000, max queue capacity = 1000, frame size = 2000, targeted response rate = 1s, task execution EWMA = 287.3ms, adjustment amount = 50, org.elasticsearch.common.util.concurrent.QueueResizingEsThreadPoolExecutor@82d5a16[Running, pool size = 25, active threads = 25, queued tasks = 1009, completed tasks = 1383751000]]"}

通过这个报错可以知道,线程池已经打满了,且队列也已经打满了。

2.3 登录服务器使用命令查看

2.3.1 GET _cat/tasks?v

curl -u elastic:****** -XGET http://******.elasticsearch.aliyuncs.com:9200/_cat/tasks?v

事实上,当ES集群负载特别大的时候,特别是队列打满的时候,会拒绝所有的请求

但是此时集群是正常运行的,kibana可能完全无法使用,命令行总有时候可以使用

下面是task的部分结果:

action task_id parent_task_id type start_time timestamp running_time ip nodeindices:data/read/search E63Y3Ku8SHOaJPsTb4PYng:1838483389 - transport 1618810188514 05:29:48 39s 172.17.67.161 E63Y3Kuindices:data/read/search[phase/fetch/id] 7FH0hL6USj2ASP7-OgvZ_A:1692804805 E63Y3Ku8SHOaJPsTb4PYng:1838483389 netty 1618810219234 05:30:19 8.2s 172.30.203.243 7FH0hL6indices:data/read/search[phase/fetch/id] 7FH0hL6USj2ASP7-OgvZ_A:1692804806 E63Y3Ku8SHOaJPsTb4PYng:1838483389 netty 1618810219250 05:30:19 8.2s 172.30.203.243 7FH0hL6indices:data/read/search E63Y3Ku8SHOaJPsTb4PYng:1838484455 - transport 1618810192300 05:29:52 35.2s 172.17.67.161 E63Y3Kuindices:data/read/search[phase/fetch/id] -e1EQiE2SXeZ6iOrXEC8EQ:1827326450 E63Y3Ku8SHOaJPsTb4PYng:1838484455 netty 1618810222326 05:30:22 5.1s 172.17.63.127 -e1EQiEindices:data/read/search E63Y3Ku8SHOaJPsTb4PYng:1838484678 - transport 1618810192925 05:29:52 34.5s 172.17.67.161 E63Y3Kuindices:data/read/search[phase/fetch/id] 3FS5VBXzT3GoxBGQLJrZSg:1742222986 E63Y3Ku8SHOaJPsTb4PYng:1838484678 netty 1618810222435 05:30:22 5s 172.30.203.242 3FS5VBXindices:data/read/search -e1EQiE2SXeZ6iOrXEC8EQ:1827319070 - transport 1618810195495 05:29:55 32s 172.17.63.127 -e1EQiEindices:data/read/search[phase/fetch/id] 7FH0hL6USj2ASP7-OgvZ_A:1692804279 -e1EQiE2SXeZ6iOrXEC8EQ:1827319070 netty 1618810217253 05:30:17 10.2s 172.30.203.243 7FH0hL6indices:data/read/search 7FH0hL6USj2ASP7-OgvZ_A:1692798599 - transport 1618810196684 05:29:56 30.8s 172.30.203.243 7FH0hL6indices:data/read/search[phase/fetch/id] -e1EQiE2SXeZ6iOrXEC8EQ:1827326119 7FH0hL6USj2ASP7-OgvZ_A:1692798599 netty 1618810221253 05:30:21 6.2s 172.17.63.127 -e1EQiEindices:data/read/search 3FS5VBXzT3GoxBGQLJrZSg:1742216171 - transport 1618810199986 05:29:59 27.5s 172.30.203.242 3FS5VBXindices:data/read/search[phase/fetch/id] 7FH0hL6USj2ASP7-OgvZ_A:1692804227 3FS5VBXzT3GoxBGQLJrZSg:1742216171 netty 1618810216997 05:30:16 10.5s 172.30.203.243 7FH0hL6indices:data/read/search -e1EQiE2SXeZ6iOrXEC8EQ:1827320186 - transport 1618810200068 05:30:00 27.4s 172.17.63.127 -e1EQiEindices:data/read/search[phase/fetch/id] 7FH0hL6USj2ASP7-OgvZ_A:1692804235 -e1EQiE2SXeZ6iOrXEC8EQ:1827320186 netty 1618810217038 05:30:17 10.4s 172.30.203.243 7FH0hL6indices:data/read/search 7FH0hL6USj2ASP7-OgvZ_A:1692799668 - transport 1618810200427 05:30:00 27s 172.30.203.243 7FH0hL6indices:data/read/search[phase/fetch/id] 7FH0hL6USj2ASP7-OgvZ_A:1692804482 7FH0hL6USj2ASP7-OgvZ_A:1692799668 direct 1618810217875 05:30:17 9.6s 172.30.203.243 7FH0hL6indices:data/read/search -e1EQiE2SXeZ6iOrXEC8EQ:1827320293 -

可以发现的是,查询时间最长的是30多秒,大部分都是在秒级别。

但是总共有5000多个task,这也印证了登录kibana的时候的报错(队列打满)

2.3.2 GET _tasks?detailed

查看一下task详情,但是其实因为task太多了,基本无法分辨具体是什么查询导致了CPU的打高。

还有其他的查询,有时候会用得到:

GET _nodes/hot_threadsGET /_cat/thread_pool?v

2.4 查看慢日志

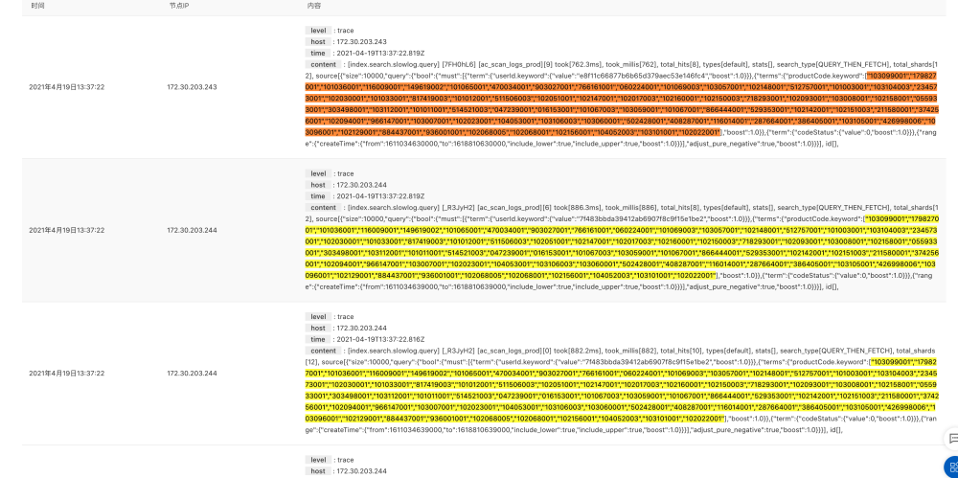

4月18日 11:50到12:00之间的慢日志是19页,4月19日 11:50到11:52之间的慢日志是大于500页,所以明显可以看到今天的慢SQL增多且异常。

翻看慢日志,翻看很多页,大量的是下面这个查询

level : tracehost : 172.17.67.161time : 2021-04-19T11:52:00.921Zcontent : [index.search.slowlog.query] [E63Y3Ku] [aaaaaa_scan_logs_prod][11] took[1.4s], took_millis[1470], total_hits[9], types[default], stats[], search_type[QUERY_THEN_FETCH], total_shards[12], source[{"size":10000,"query":{"bool":{"must":[{"term":{"userId.keyword":{"value":"baff70a0efa5120dd5e20f07618815f0","boost":1.0}}},{"terms":{"productCode.keyword":["103099001","179827001","101036001","116009001","149619002","101065001","470034001","903027001","766161001","060224001","101069003","103057001","102148001","512757001","101003001","103104003","234573001","102030001","101033001","817419003","101012001","511506003","102051001","102147001","102017003","102160001","102150003","718293001","102093001","103008001","102158001","055933001","303498001","103112001","101011001","514521003","047239001","016153001","101067003","103059001","101067001","866444001","529353001","102142001","102151003","211580001","374256001","102094001","966147001","103007001","102023001","104053001","103106003","103060001","502428001","408287001","116014001","287664001","386405001","103105001","426998006","103096001","102129001","884437001","936001001","102068005","102068001","102156001","104052003","103101001","102022001"],"boost":1.0}},{"term":{"codeStatus":{"value":0,"boost":1.0}}},{"range":{"createTime":{"from":1611028314000,"to":1618804314000,"include_lower":true,"include_upper":true,"boost":1.0}}}],"adjust_pure_negative":true,"boost":1.0}}}], id[],

对比task detailed,里面也大量有这个查询,且这个慢查询昨天没有出现,基本可以判断,是这个慢查询并发较大,导致了集群CPU打满。

2.5 和业务沟通

和业务方沟通,给出查询内容和异常情况说明。经过排查,的确是一个之前未曾打开的接口,被前端主业务流程调用,即每个人扫码的时候都会调用一次这个查询。这样这个查询调用就特别多了。

紧急改版禁止这个查询接口,发布后,ES集群负载下降到正常。

三、小结

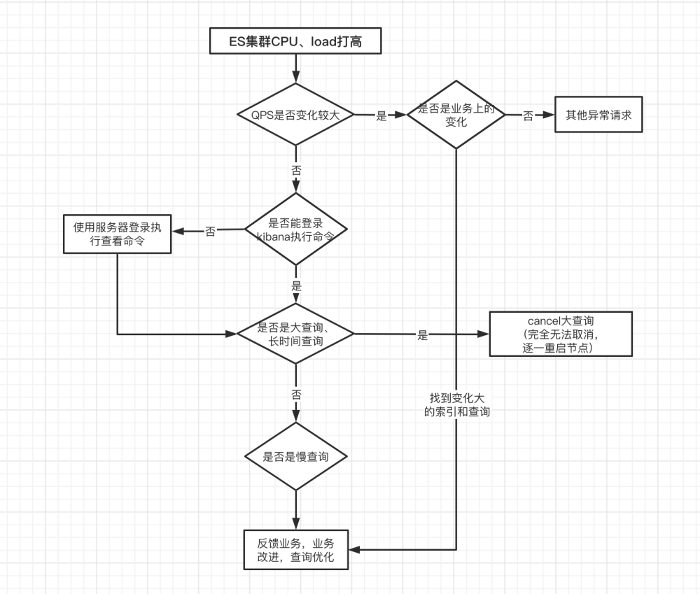

3.1 ES集群CPU打高处理思路

对于ES集群CPU打高,如果判断是查询或者写入导致,大致可以按照下面的思路去处理

3.2 和其他方协同处理

有时候发现了问题,但是在自己这侧是无法解决的,要联系其他人员协助解决。

排查工具不限于kibana,排查方向不限于ES本身,也包括SLB、业务情况等等。