Spark对接ranger

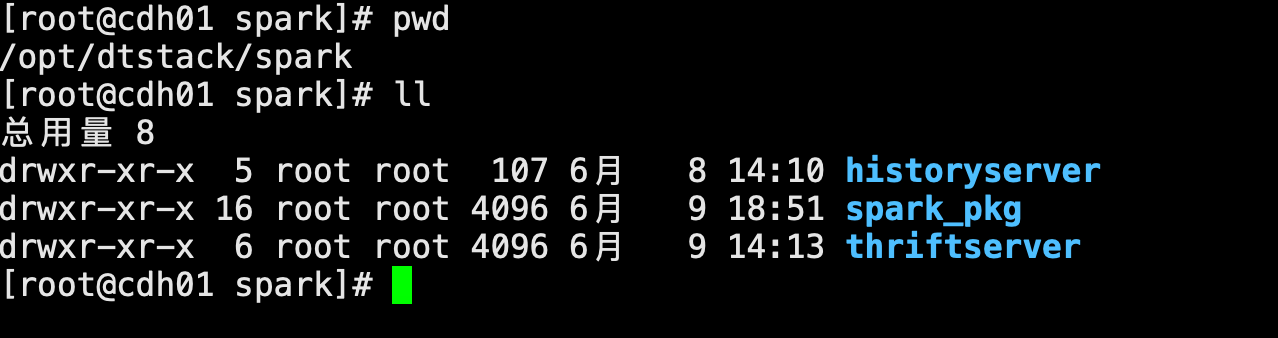

1、包如图所示

https://dtstack-download.oss-cn-hangzhou.aliyuncs.com/insight/insight-4em/release/hadoop/spark/2.4.8_ranger2.2/Spark_2.4.8-dt_centos7_x86_64.tar

2、修改配置文件

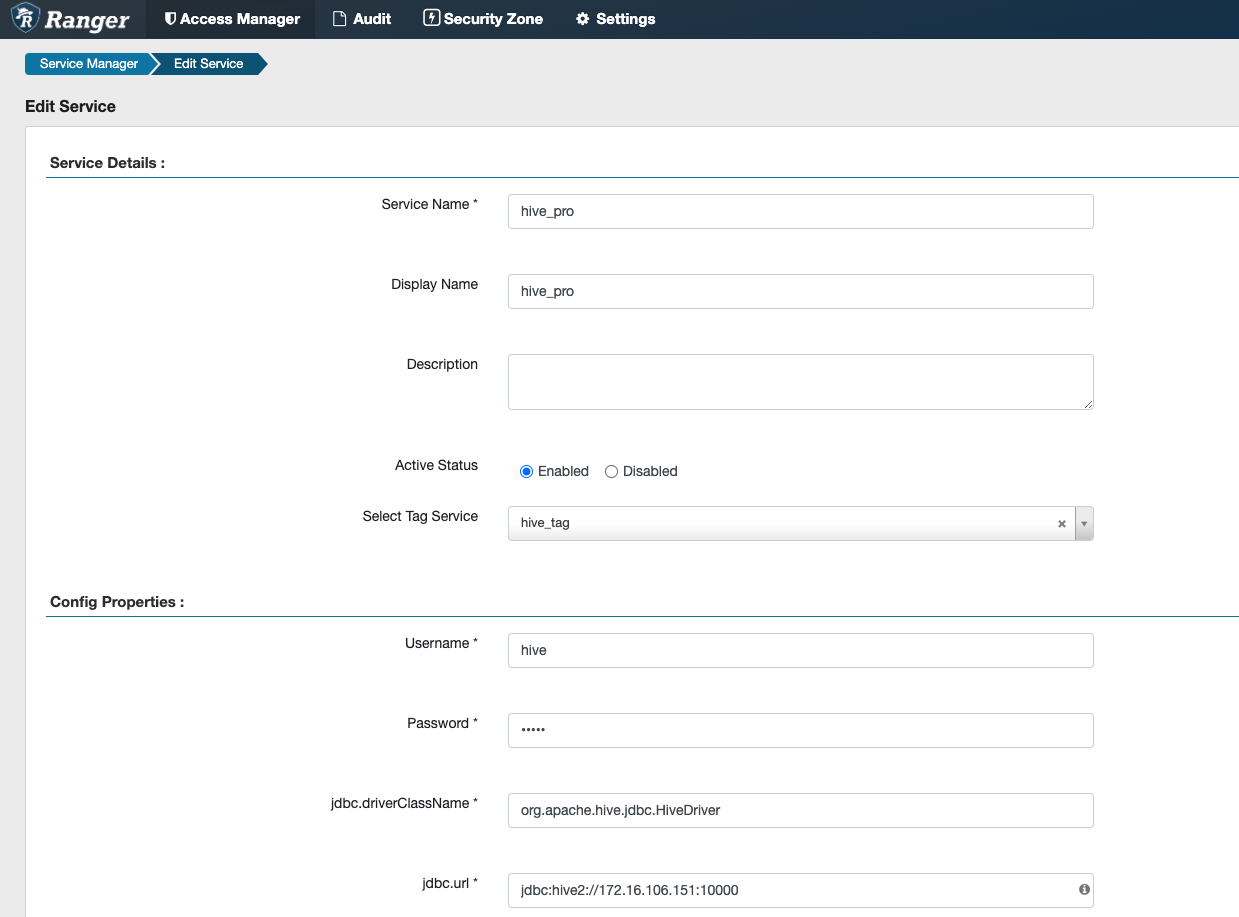

[root@cdh01 conf]# vim ranger-spark-security.xml <configuration> <property> <name>ranger.plugin.spark.policy.rest.url</name> <value>http://172.16.106.151:6080</value> </property> <property> <name>ranger.plugin.spark.service.name</name> <value>hive_pro</value> //这里定义名称要跟ranger页面对接服务名称要对应 </property> <property> <name>ranger.plugin.spark.policy.cache.dir</name> <value>/etc/ranger/spark/policycache</value> </property> <property> <name>ranger.plugin.spark.policy.pollIntervalMs</name> <value>5000</value> </property> <property> <name>ranger.plugin.spark.policy.source.impl</name> <value>org.apache.ranger.admin.client.RangerAdminRESTClient</value> </property> </configuration>

ranger-spark-audit.xml

[root@cdh01 conf]# vim ranger-spark-audit.xml <configuration> <property> <name>xasecure.audit.is.enabled</name> <value>true</value> </property> <property> <name>xasecure.audit.destination.db</name> <value>false</value> </property> <property> <name>xasecure.audit.destination.db.jdbc.driver</name> <value>com.mysql.jdbc.Driver</value> </property> <property> <name>xasecure.audit.destination.db.jdbc.url</name> <value>jdbc:mysql://172.16.106.151:3306/ranger</value> </property> <property> <name>xasecure.audit.destination.db.password</name> <value>123456</value> </property> <property> <name>xasecure.audit.destination.db.user</name> <value>ranger</value> </property> </configuration>

spark-defaults.conf

[root@cdh01 conf]# vim spark-defaults.conf

spark.eventLog.enabled false

spark.scheduler.mode FAIR

spark.scheduler.allocation.file /opt/dtstack/spark/spark_pkg/conf/fairscheduler.xml

#spark.shuffle.service.enabled true

export SPARK_LOCAL_DIRS=/data/spark_tmp/data

# 事件日志

spark.eventLog.enabled=true

spark.eventLog.compress=true

# 保存在hdfs上

spark.eventLog.dir=hdfs://nameservice1/tmp/spark-yarn-logs

spark.history.fs.logDirectory=hdfs://nameservice1/tmp/spark-yarn-logs

#spark.yarn.historyServer.address={{.historyserver_ip}}:18080

# 保存在本地

# spark.eventLog.dir=file://usr/local/hadoop-2.7.3/logs/

# spark.history.fs.logDirectory=file://usr/local/hadoop-2.7.3/logs/

#开启日志定时清除

spark.history.fs.cleaner.enabled=true

#日志有效时间

spark.history.fs.cleaner.maxAge={{.history_cleaner_maxAge}}

#日志检查时间

spark.history.fs.cleaner.interval={{.history_cleaner_interval}}

# 在配置文件底部添加下面的配置:

# # hive metastore的版本设置为 2.1.1

spark.sql.hive.metastore.version=2.1.1

#

# # 引用 hive2.1.1 相关的jar包

spark.sql.hive.metastore.jars=/opt/cloudera/parcels/CDH/lib/hive/lib/*

##开启ranger权限校验

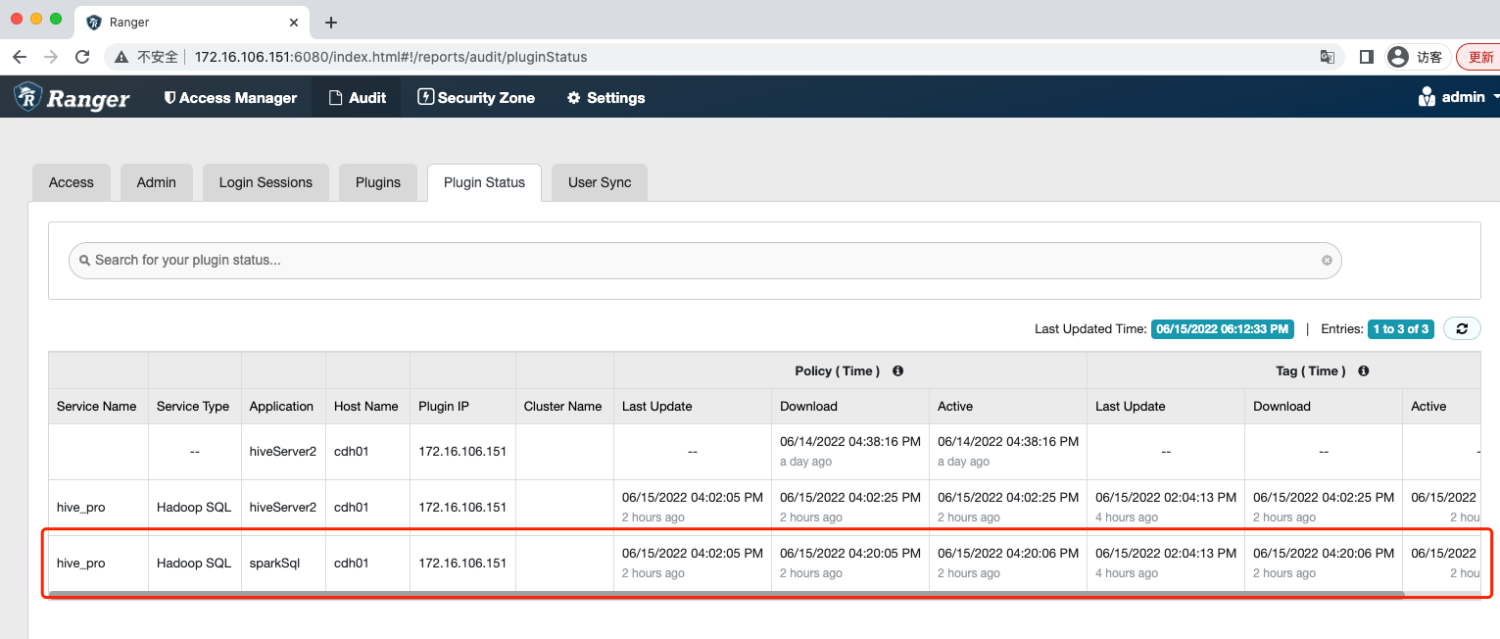

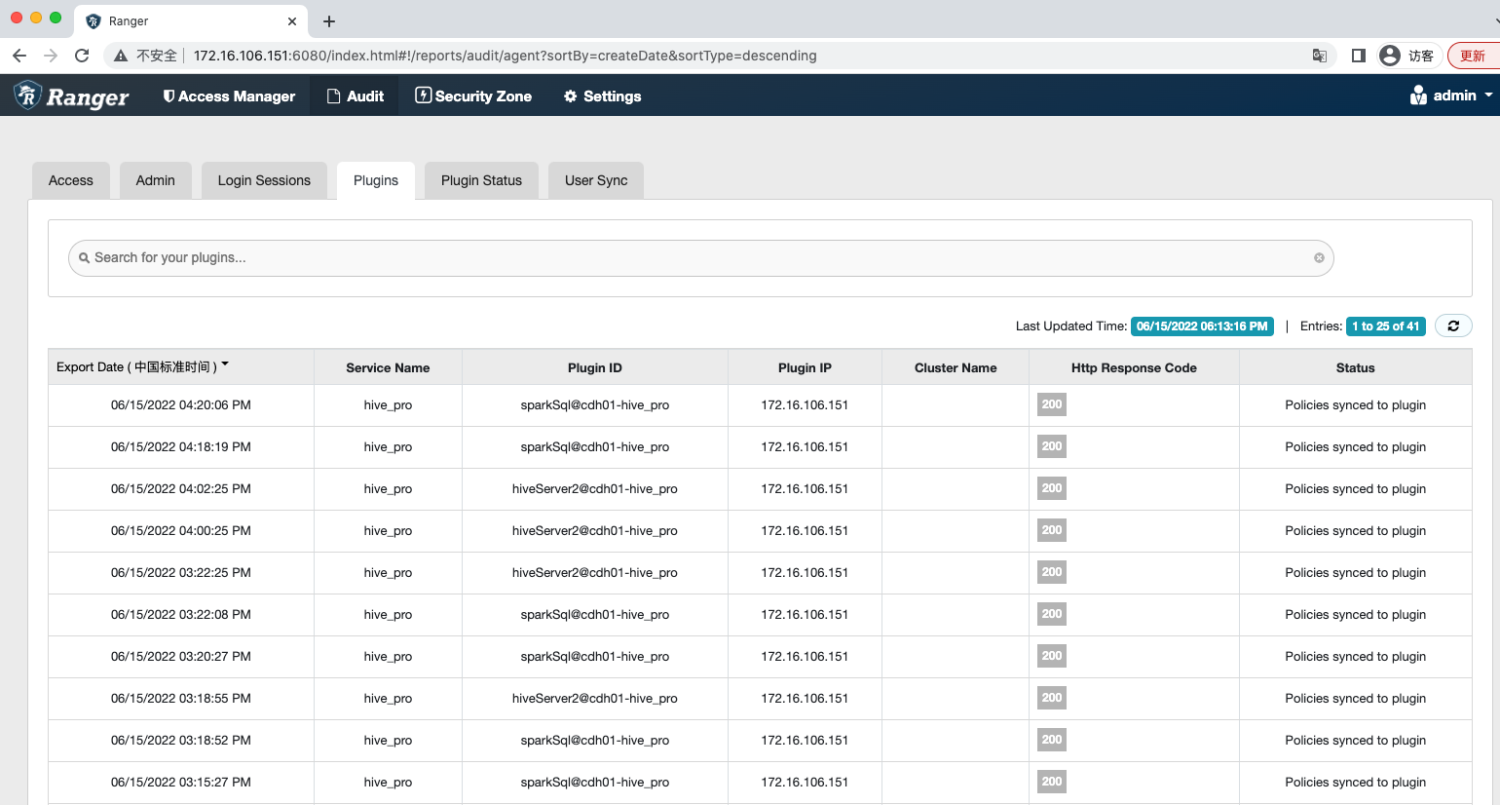

spark.sql.extensions=org.apache.spark.ranger.security.api.RangerSparkSQLExtension3、spark sql插件验证

spark和hive共用权限,配置上面是hive的jdbc,故前提是先要部署hiveserver2.