Hive3 on spark 集成

前置条件

hadoop yarn环境正常

oracle jdk 1.8版本

1、spark2 下载准备

https://archive.apache.org/dist/spark/spark-2.4.5/spark-2.4.5-bin-without-hadoop.tgz

解压到opt目录

2、hive3环境配置

export SPARK_HOME=/opt/spark-2.4.5-bin-without-hadoop export SPARK_CONF_DIR=/opt/spark-2.4.5-bin-without-hadoop/conf export SPARK_DIST_CLASSPATH=$HADOOP_HOME/etc/hadoop/*:$HADOOP_HOME/share/hadoop/common/lib/*:$HADOOP_HOME/share/hadoop/common/*:$HADOOP_HOME/share/hadoop/hdfs/*:$HADOOP_HOME/share/hadoop/hdfs/lib/*:$HADOOP_HOME/share/hadoop/hdfs/*:$HADOOP_HOME/share/hadoop/yarn/lib/*:$HADOOP_HOME/share/hadoop/yarn/*:$HADOOP_HOME/share/hadoop/mapreduce/lib/*:$HADOOP_HOME/share/hadoop/mapreduce/*:$HADOOP_HOME/share/hadoop/tools/lib/*

<configuration>

<!-- Spark2 依赖库位置,在YARN 上运行的任务需要从HDFS 中查找依赖jar 文件 -->

<property>

<name>spark.yarn.jars</name>

<value>${fs.defaultFS}/spark-jars/*</value>

</property>

<!-- Hive3 和Spark2 连接超时时间 -->

<property>

<name>hive.spark.client.connect.timeout</name>

<value>30000ms</value>

</property>

</configuration>

<property>

<name>spark.executor.cores</name>

<value>1</value>

</property>

<property>

<name>spark.executor.memory</name>

<value>1g</value>

</property>

<property>

<name>spark.driver.memory</name>

<value>1g</value>

</property>

<property>

<name>spark.yarn.driver.memoryOverhead</name>

<value>102</value>

</property>

<property>

<name>spark.shuffle.service.enabled</name>

<value>true</value>

</property>

<property>

<name>spark.eventLog.enabled</name>

<value>true</value>

</propertyspark.master=yarn spark.eventLog.dir=hdfs:///user/spark/applicationHistory spark.eventLog.enabled=true spark.executor.memory=1g spark.driver.memory=1g

3、spark 依赖库配置

cd /opt/spark-2.4.5-bin-without-hadoop/jars mv orc-core-1.5.5-nohive.jar orc-core-1.5.5-nohive.jar.bak //上传jar包到hdfs hdfs dfs -rm -r -f /spark-jars hdfs dfs -mkdir /spark-jars cd /opt/spark-2.4.5-bin-without-hadoop/jars hdfs dfs -put * /spark-jars hdfs dfs -ls /spark-jars //拷贝jar包到hive cp scala-compiler-2.11.12.jar scala-library-2.11.12.jar scala-reflect-2.11.12.jar spark-core_2.11-2.4.5.jar spark-network-common_2.11-2.4.5.jar spark-unsafe_2.11-2.4.5.jar spark-yarn_2.11-2.4.5.jar /opt/hive/lib/

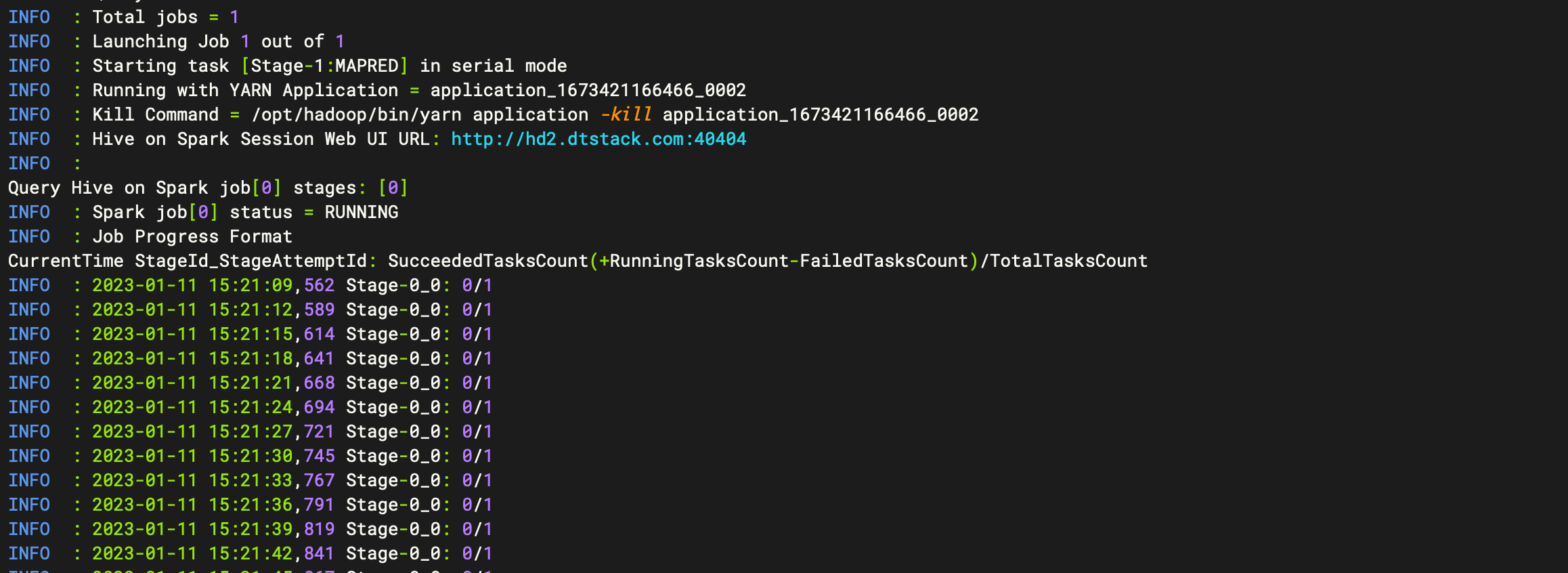

4、连接beeline进行测试

查看详细日志路径 /tmp/hive/*.log

cd $HIVE_HOME ./bin/beeline -u 'jdbc:hive2://hd1:10000/default;principal=hive/hd1.dtstack.com@DTSTACK.COM' set hive.execution.engine=spark; insert into test1 values(1);