Solr常用API详细操作

1. 监督集群的状态和统计

返回监督器(overseer)的当前状态,各种监督器API的性能统计信息以及每种操作类型的最近10次故障

/admin/collections?action=OVERSEERSTATUS

直接在浏览器的URL地址栏进行操作, 命令如下:

http://cm-server.open.hadoop:8983/solr/admin/collections?action=OVERSEERSTATUS

或者: 直接在终端的命令行中操作(注意curl之后的内容需要加单引号或双引号):

curl 'http://cm-server.open.hadoop:8983/solr/admin/collections?action=OVERSEERSTATUS'

输出结果:

{

"responseHeader":{

"status":0,

"QTime":30},

"leader":"32865649576706044-cm-agent2.open.hadoop:8983_solr",

"overseer_queue_size":0,

"overseer_work_queue_size":0,

"overseer_collection_queue_size":2,

"overseer_operations":[

"leader",{

"requests":6,

"errors":0,

"avgRequestsPerSecond":2.57440842620532E-5,

"5minRateRequestsPerSecond":1.4821969375E-313,

"15minRateRequestsPerSecond":3.4667899914971236E-113,

"avgTimePerRequest":0.0,

"medianRequestTime":0.0,

"75thPcRequestTime":0.0,

"95thPcRequestTime":0.0,

"99thPcRequestTime":0.0,

"999thPcRequestTime":0.0},

"am_i_leader",{

"requests":77675,

"errors":0,

"avgRequestsPerSecond":0.3332758672775468,

"5minRateRequestsPerSecond":0.332219159070725,

"15minRateRequestsPerSecond":0.3329514668799583,

"avgTimePerRequest":0.7559298242068595,

"medianRequestTime":0.751021,

"75thPcRequestTime":0.792274,

"95thPcRequestTime":0.868521,

"99thPcRequestTime":1.798176,

"999thPcRequestTime":1.798176},

"update_state",{

"requests":16,

"errors":0,

"avgRequestsPerSecond":6.865037365844071E-5,

"5minRateRequestsPerSecond":1.4821969375E-313,

"15minRateRequestsPerSecond":5.54073328332591E-113,

"avgTimePerRequest":0.0,

"medianRequestTime":0.0,

"75thPcRequestTime":0.0,

"95thPcRequestTime":0.0,

"99thPcRequestTime":0.0,

"999thPcRequestTime":0.0},

"state",{

"requests":9,

"errors":0,

"avgRequestsPerSecond":3.861647140071751E-5,

"5minRateRequestsPerSecond":1.4821969375E-313,

"15minRateRequestsPerSecond":4.855821033878966E-113,

"avgTimePerRequest":0.0,

"medianRequestTime":0.0,

"75thPcRequestTime":0.0,

"95thPcRequestTime":0.0,

"99thPcRequestTime":0.0,

"999thPcRequestTime":0.0},

"downnode",{

"requests":4,

"errors":0,

"avgRequestsPerSecond":1.7162589499571203E-5,

"5minRateRequestsPerSecond":1.4821969375E-313,

"15minRateRequestsPerSecond":2.754997131637382E-113,

"avgTimePerRequest":0.0,

"medianRequestTime":0.0,

"75thPcRequestTime":0.0,

"95thPcRequestTime":0.0,

"99thPcRequestTime":0.0,

"999thPcRequestTime":0.0}],

"collection_operations":[

"am_i_leader",{

"requests":116481,

"errors":0,

"avgRequestsPerSecond":0.4997787271209085,

"5minRateRequestsPerSecond":0.49895200792489797,

"15minRateRequestsPerSecond":0.4994161420804401,

"avgTimePerRequest":0.7589474647429486,

"medianRequestTime":0.717739,

"75thPcRequestTime":0.804441,

"95thPcRequestTime":0.959584,

"99thPcRequestTime":1.050389,

"999thPcRequestTime":4.35169},

"overseerstatus",{

"requests":1,

"errors":0,

"avgRequestsPerSecond":0.019957698731501362,

"5minRateRequestsPerSecond":0.17214159528501158,

"15minRateRequestsPerSecond":0.1902458849001428,

"avgTimePerRequest":26.280649,

"medianRequestTime":26.280649,

"75thPcRequestTime":26.280649,

"95thPcRequestTime":26.280649,

"99thPcRequestTime":26.280649,

"999thPcRequestTime":26.280649}],

"overseer_queue":[],

"overseer_internal_queue":[

"peek",{

"avgRequestsPerSecond":4.290645974413372E-6,

"5minRateRequestsPerSecond":1.4821969375E-313,

"15minRateRequestsPerSecond":6.887492829093455E-114,

"avgTimePerRequest":0.0,

"medianRequestTime":0.0,

"75thPcRequestTime":0.0,

"95thPcRequestTime":0.0,

"99thPcRequestTime":0.0,

"999thPcRequestTime":0.0}],

"collection_queue":[

"remove_event",{

"avgRequestsPerSecond":0.03995121354042804,

"5minRateRequestsPerSecond":0.34428319057002316,

"15minRateRequestsPerSecond":0.3804917698002856,

"avgTimePerRequest":7.591156612692196,

"medianRequestTime":4.710148,

"75thPcRequestTime":10.559905,

"95thPcRequestTime":10.559905,

"99thPcRequestTime":10.559905,

"999thPcRequestTime":10.559905},

"peektopn_wait2000",{

"avgRequestsPerSecond":0.4997702434939512,

"5minRateRequestsPerSecond":0.4988200507736645,

"15minRateRequestsPerSecond":0.4993743057741281,

"avgTimePerRequest":1941.3253837646682,

"medianRequestTime":2000.119068,

"75thPcRequestTime":2000.138274,

"95thPcRequestTime":2000.172174,

"99thPcRequestTime":2001.102665,

"999thPcRequestTime":2001.102665}]}

2. 查看集群健康状态

CLUSTERSTATUS操作可以获取集群状态,包括集合、分片、副本、配置名称以及集合别名和集群属性。

/admin/collections?action=CLUSTERSTATUS

直接在浏览器的URL地址栏进行操作, 命令如下:

curl 'http://cm-server.open.hadoop:8983/solr/admin/collections?action=CLUSTERSTATUS'

或者: 直接在终端的命令行中操作(注意curl之后的内容需要加单引号或双引号):

curl 'http://cm-server.open.hadoop:8983/solr/admin/collections?action=CLUSTERSTATUS'

输出结果:

{

"responseHeader":{

"status":0,

"QTime":3},

"cluster":{

"collections":{

"ranger_audits":{

"pullReplicas":"0",

"replicationFactor":"2",

"shards":{

"shard1":{

"range":"80000000-d554ffff",

"state":"active",

"replicas":{

"core_node3":{

"core":"ranger_audits_shard1_replica_n1",

"base_url":"http://cm-server.open.hadoop:8983/solr",

"node_name":"cm-server.open.hadoop:8983_solr",

"state":"active",

"type":"NRT",

"force_set_state":"false",

"leader":"true"},

"core_node5":{

"core":"ranger_audits_shard1_replica_n2",

"base_url":"http://cm-agent2.open.hadoop:8983/solr",

"node_name":"cm-agent2.open.hadoop:8983_solr",

"state":"active",

"type":"NRT",

"force_set_state":"false"}}},

"shard2":{

"range":"d5550000-2aa9ffff",

"state":"active",

"replicas":{

"core_node7":{

"core":"ranger_audits_shard2_replica_n4",

"base_url":"http://cm-agent1.open.hadoop:8983/solr",

"node_name":"cm-agent1.open.hadoop:8983_solr",

"state":"active",

"type":"NRT",

"force_set_state":"false"},

"core_node9":{

"core":"ranger_audits_shard2_replica_n6",

"base_url":"http://cm-server.open.hadoop:8983/solr",

"node_name":"cm-server.open.hadoop:8983_solr",

"state":"active",

"type":"NRT",

"force_set_state":"false",

"leader":"true"}}},

"shard3":{

"range":"2aaa0000-7fffffff",

"state":"active",

"replicas":{

"core_node11":{

"core":"ranger_audits_shard3_replica_n8",

"base_url":"http://cm-agent2.open.hadoop:8983/solr",

"node_name":"cm-agent2.open.hadoop:8983_solr",

"state":"active",

"type":"NRT",

"force_set_state":"false",

"leader":"true"},

"core_node12":{

"core":"ranger_audits_shard3_replica_n10",

"base_url":"http://cm-agent1.open.hadoop:8983/solr",

"node_name":"cm-agent1.open.hadoop:8983_solr",

"state":"active",

"type":"NRT",

"force_set_state":"false"}}}},

"router":{"name":"compositeId"},

"maxShardsPerNode":"3",

"autoAddReplicas":"false",

"nrtReplicas":"1",

"tlogReplicas":"0",

"znodeVersion":56,

"configName":"ranger_audits"}},

"properties":{"urlScheme":"http"},

"live_nodes":["cm-agent1.open.hadoop:8983_solr",

"cm-agent2.open.hadoop:8983_solr",

"cm-server.open.hadoop:8983_solr"]}}

可以查看到Shard的路由、活跃状态、副本状态等信息

3. 获取集群中集合的名称

获取集群中集合的名称。

/admin/collections?action=LIST

直接在浏览器的URL地址栏进行操作, 命令如下:

curl 'curl http://cm-server.open.hadoop:8983/solr/admin/collections?action=LIST'

或者: 直接在终端的命令行中操作(注意curl之后的内容需要加单引号或双引号):

curl http://cm-server.open.hadoop:8983/solr/admin/collections?action=LIST

输出结果:

{

"responseHeader":{

"status":0,

"QTime":0},

"collections":["ranger_audits"]

4. 删除Collection

直接在浏览器的URL地址栏进行操作, 命令如下:

http://cm-server.open.hadoop:8983/solr/admin/collections?action=DELETE&name=testCollection或者: 直接在终端的命令行中操作(注意curl之后的内容需要加单引号或双引号):

curl 'http://cm-server.open.hadoop:8983/solr/admin/collections?action=DELETE&name=testCollection'

输出结果:

{

"responseHeader":{

"status":0,

"QTime":825},

"success":{

"cm-agent2.open.hadoop:8983_solr":{

"responseHeader":{

"status":0,

"QTime":20}},

"cm-server.open.hadoop:8983_solr":{

"responseHeader":{

"status":0,

"QTime":37}},

"cm-agent1.open.hadoop:8983_solr":{

"responseHeader":{

"status":0,

"QTime":49}},

"cm-agent1.open.hadoop:8983_solr":{

"responseHeader":{

"status":0,

"QTime":50}}}}

5. 加载Collection

collections的API不支持LOAD操作

被卸载了的core并不会被RELOAD进来.

curl 'http://localhost:8080/solr/admin/collections?action=RELOAD&name=collection1&indent=true'

参数说明:name: 将被重新加载的集合的名称

6. 创建切片(CREATESHARD)

(1)对于使用“隐式”路由器的集合(即创建集合时,router.name=implicit),只能使用此API创建分片。可以为现有的“隐式”集合创建具有名称的新分片。

(2)对使用“compositeId”路由器(router.key=compositeId)创建的集合使用SPLITSHARD

/admin/collections?action=CREATESHARD&shard=shardName&collection=name

使用例子:

为“testCollection”集合创建“shard-z”。

curl 'http://cm-server.open.hadoop:8983/solr/admin/collections?action=CREATESHARD&collection=testCollection&shard=test-add-shard'

参数说明:

collection:包含要分割的分片的集合的名称。该参数是必需的。

shard:要创建的分片的名称。该参数是必需的。

createNodeSet:允许定义节点来传播新的集合。如果未提供,则CREATESHARD操作将在所有活动的Solr节点上创建分片副本。格式是以逗号分隔的node_name列表,例如localhost:8983_solr,localhost:8984_solr,localhost:8985_solr。

property.name=value,将核心属性name设置为value。有关受支持的属性和值的详细信息

CREATESHARD:响应,输出将包含请求的状态。如果状态不是“success”,则会显示错误消息,说明请求失败的原因

7. 切割分片(SPLITSHARD)

(1)执行命令

curl 'http://cm-server.open.hadoop:8983/solr/admin/collections?action=SPLITSHARD&collection=testCollection&shard=shard1&indent=true'

参数说明:

collection: 集合的名称;

shard: 将被切割的分片ID, 必须存在, 且当前Collection的shard个数必须大于1个

输出结果:

{

"responseHeader":{

"status":0,

"QTime":6079},

"success":{

"cm-server.open.hadoop:8983_solr":{

"responseHeader":{

"status":0,

"QTime":623},

"core":"testCollection_shard1_0_replica_n9"},

"cm-server.open.hadoop:8983_solr":{

"responseHeader":{

"status":0,

"QTime":499},

"core":"testCollection_shard1_1_replica_n10"},

"cm-server.open.hadoop:8983_solr":{

"responseHeader":{

"status":0,

"QTime":1}},

"cm-server.open.hadoop:8983_solr":{

"responseHeader":{

"status":0,

"QTime":1001}},

"cm-server.open.hadoop:8983_solr":{

"responseHeader":{

"status":0,

"QTime":113}},

"cm-server.open.hadoop:8983_solr":{

"responseHeader":{

"status":0,

"QTime":0},

"core":"testCollection_shard1_0_replica_n9",

"status":"EMPTY_BUFFER"},

"cm-server.open.hadoop:8983_solr":{

"responseHeader":{

"status":0,

"QTime":0},

"core":"testCollection_shard1_1_replica_n10",

"status":"EMPTY_BUFFER"},

"cm-agent1.open.hadoop:8983_solr":{

"responseHeader":{

"status":0,

"QTime":522},

"core":"testCollection_shard1_0_replica0"},

"cm-agent2.open.hadoop:8983_solr":{

"responseHeader":{

"status":0,

"QTime":502},

"core":"testCollection_shard1_1_replica0"},

"http://cm-server.open.hadoop:8983/solr/testCollection_shard1_replica_n1/":{

"responseHeader":{

"status":0,

"QTime":19}}}}

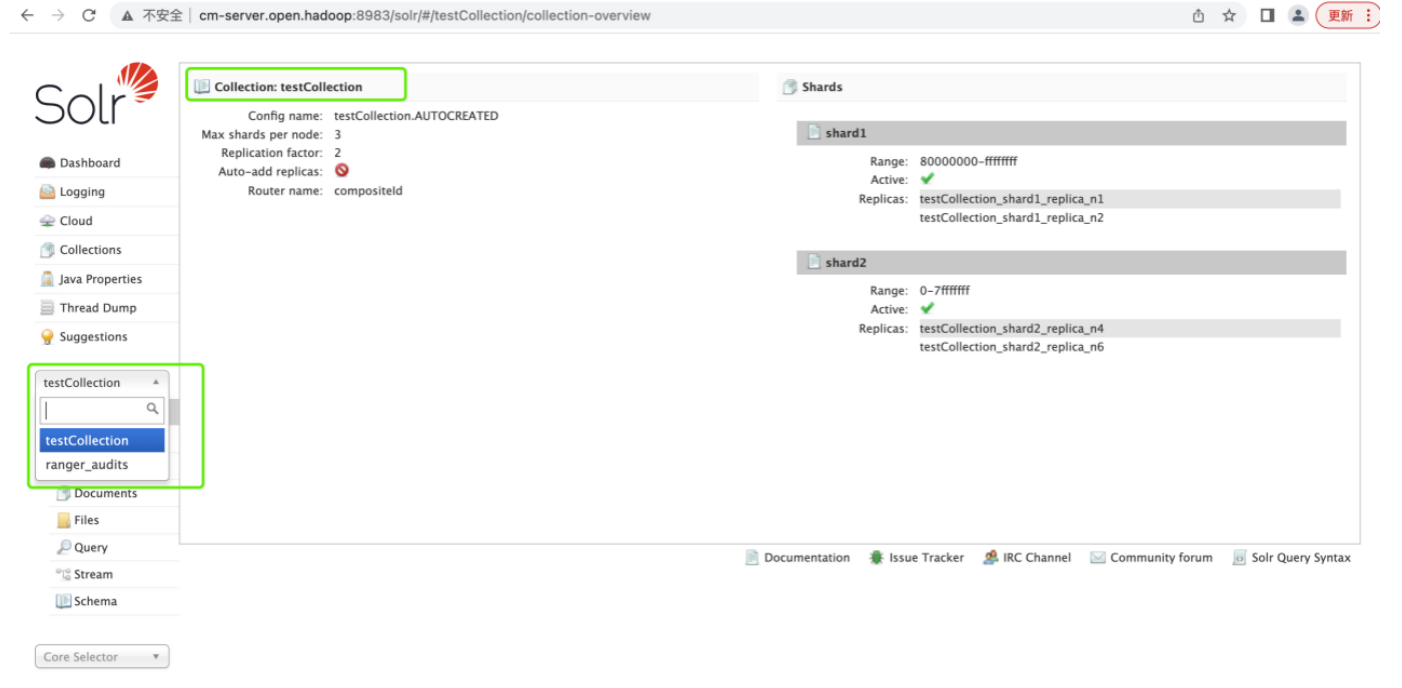

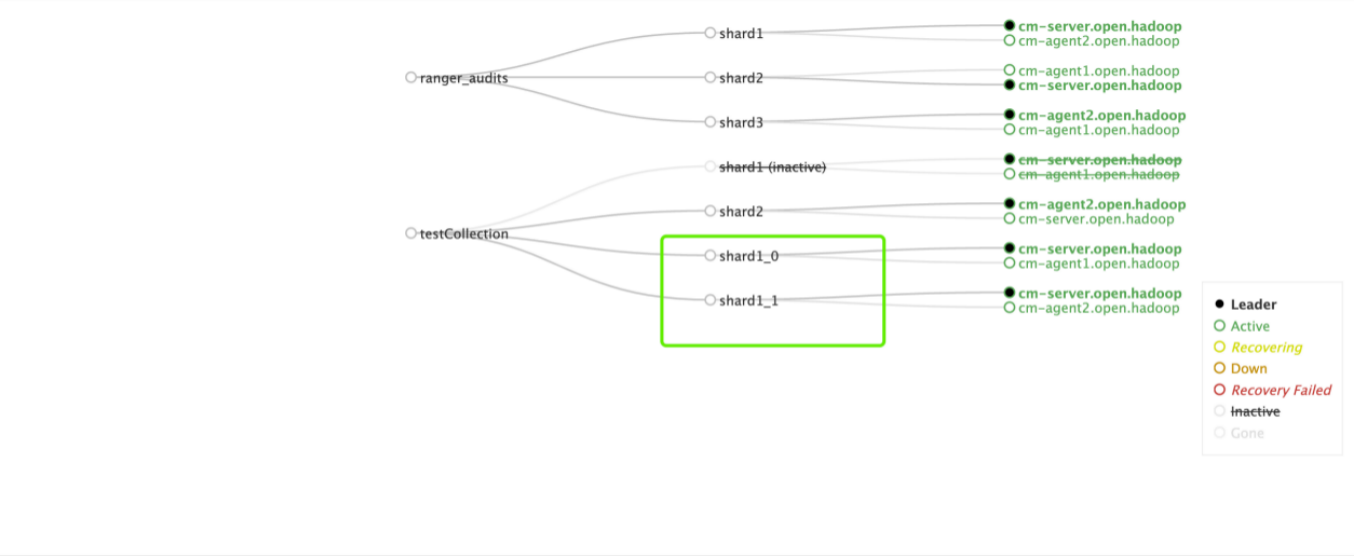

结果如图:

(2)执行说明

1)SPLITSHARD命令不能用于使用了自定义哈希的集群, 因为这样的集群

没有一个明确的哈希范围 —— 它只用于具有plain或compositeid路由的集群;

2)该命令将指定的shard的切割成 两个新的具有相同数据的分片, 并根据新分片的路由范围切割父分片 (被切割的shard) 中的文档;

3)新的分片将被命名为shardx_0和shardx_1 —— 表明是从shardx上分裂得到的新Shard;

4)一旦新分片被成功创建, 它们就会被立即激活, 同时父分片也将被暂停 —— 新的读写请求就不会被发送到父分片中了, 而是直接路由到新的切割生成的新分片中;

5)该特征能够保证无缝切割和无故障时间: 父分片数据不会被删除, 在切割操作完成之前, 父分片将继续提供读写请求, 直到切割完成.

(3)使用注意事项

1)切割分片后, 再使用DELETESHARD命令删除被切割的原始Shard, 就能保证数据不冗余, 当然也可以通过UNLOAD命令卸载被切割的Shard;

2)原Shard目录下的配置文件会变成core.properties.unloaded, 也就是加了个卸载标示; data目录下保存索引数据的index目录被删除 —— 从侧面印证了数据完成了切分和迁移;

3)SolrCloud不支持对索引到其他Shard上的数据的动态迁移, 可以通过切割分片实现SolrCloud的扩容, 这只会对 切割操作之后、路由的哈希范围仍然属于原分片的数据 进行扩容.

8. 删除节点中的副本(DELETENODE)

删除该节点中所有集合的所有副本。请注意,在此操作之后,节点本身将保持为活动节点。

/admin/collections?action=DELETENODE&node=nodeName

curl 'http://cm-server.open.hadoop:8983/solr/admin/collections?action=DELETENODE&node=nodeName

DELETENODE参数:

node:要删除的节点。该参数是必需的