hive元数据迁移

一、在新集群中创建hive数据库,作为新集群中的元数据库。

注意点:创建hive数据库时注意用户和用户的权限及使用的编码格式一致。

查看旧集群中角色权限和编码格式,在新的hive元数据库中设置相同的角色权限和编码格式。

use mysql; select * from user where user='root'; show grants for root@localhost; show variables like 'character%'

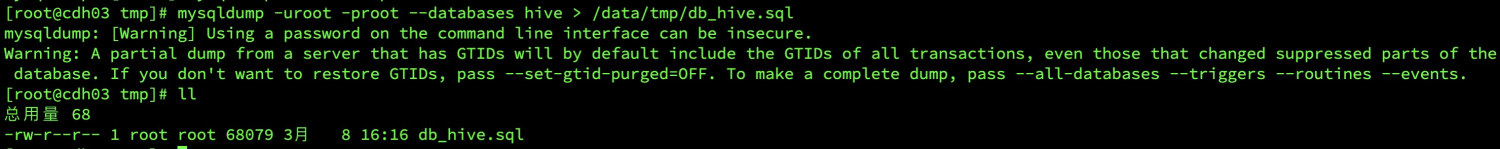

二、旧集群中获取hive元数据库,使用mysql命令将其导出为一个sql文件。

mysqldump -uroot -proot --databases hive > /data/tmp/db_hive.sql

三、将获取到的sql文件复制到新集群中,在新的hive元数据库中执行。

mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | amon | | dataxweb | | hive | | hue | | metastore | | mutong | | mysql | | nav | | navms | | oozie | | performance_schema | | rman | | rmon | | scm | | sentry | | sys | +--------------------+ 17 rows in set (0.00 sec) mysql> use hive; Database changed mysql> show tables; Empty set (0.00 sec) mysql> source /data/tmp/db_hive.sql

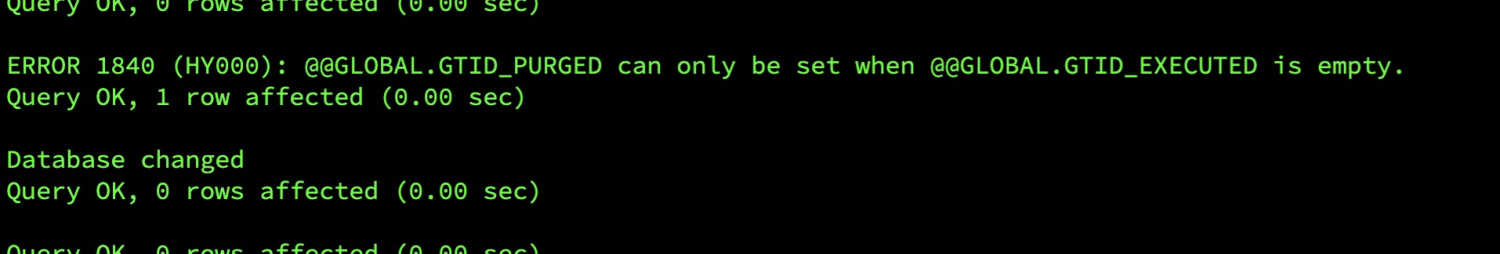

出现错误:ERROR 1840 (HY000): @@GLOBAL.GTID_PURGED can only be set when @@GLOBAL.GTID_EXECUTED is empty.

因为两个集群中都有gtid的信息,导致数据在迁移过程中报错。

解决方法:在dump生成文件的时候,使用--set-gtid-purged=off参数,忽略gtid信息。

mysqldump -uroot -proot --set-gtid-purged=off --databases hive > /data/tmp/db_hive.sql

上面的步骤重新进行一遍。运行成功。

四、修改cdh新集群中涉及到hdfs路径的信息。

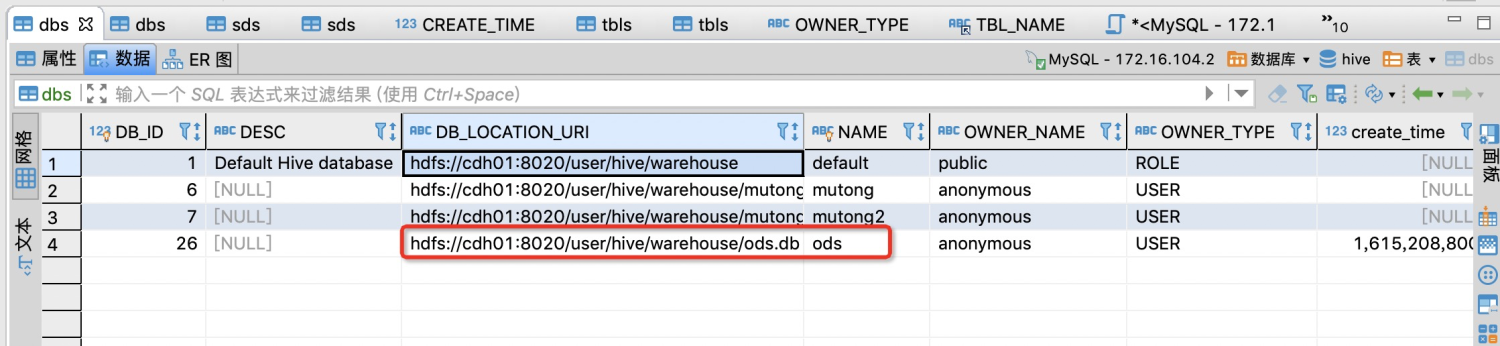

涉及到location字段hive数据库和hive数据表dbs/sds的更新。

use hive; select * from dbs d2 select * from sds s ------备份好元数据-------- update metastore.dbs set DB_LOCATION_URI = REPLACE(DB_LOCATION_URI,"hadoop:9000","cdh01:8020") ; update metastore.sds set LOCATION = REPLACE(LOCATION,"hadoop:9000","cdh01:8020") ;

五、在cdh页面配置中修改成自己刚创建的hive元数据库。

注意点:cdh元数据库涉及到cdh的表,需要添加到刚创建的hive元数据库中,否则会报错。然后重启cdh集群。

use hive CREATE TABLE `cdh_version` ( `VER_ID` bigint(20) NOT NULL, `SCHEMA_VERSION` varchar(127) NOT NULL, `VERSION_COMMENT` varchar(255) DEFAULT NULL, PRIMARY KEY (`VER_ID`) ) ENGINE=InnoDB DEFAULT CHARSET=latin1; CREATE TABLE `metastore_db_properties` ( `PROPERTY_KEY` varchar(255) NOT NULL, `PROPERTY_VALUE` varchar(1000) NOT NULL, `DESCRIPTION` varchar(1000) DEFAULT NULL, PRIMARY KEY (`PROPERTY_KEY`) ) ENGINE=InnoDB DEFAULT CHARSET=latin1;

需要导入对应的版本信息:

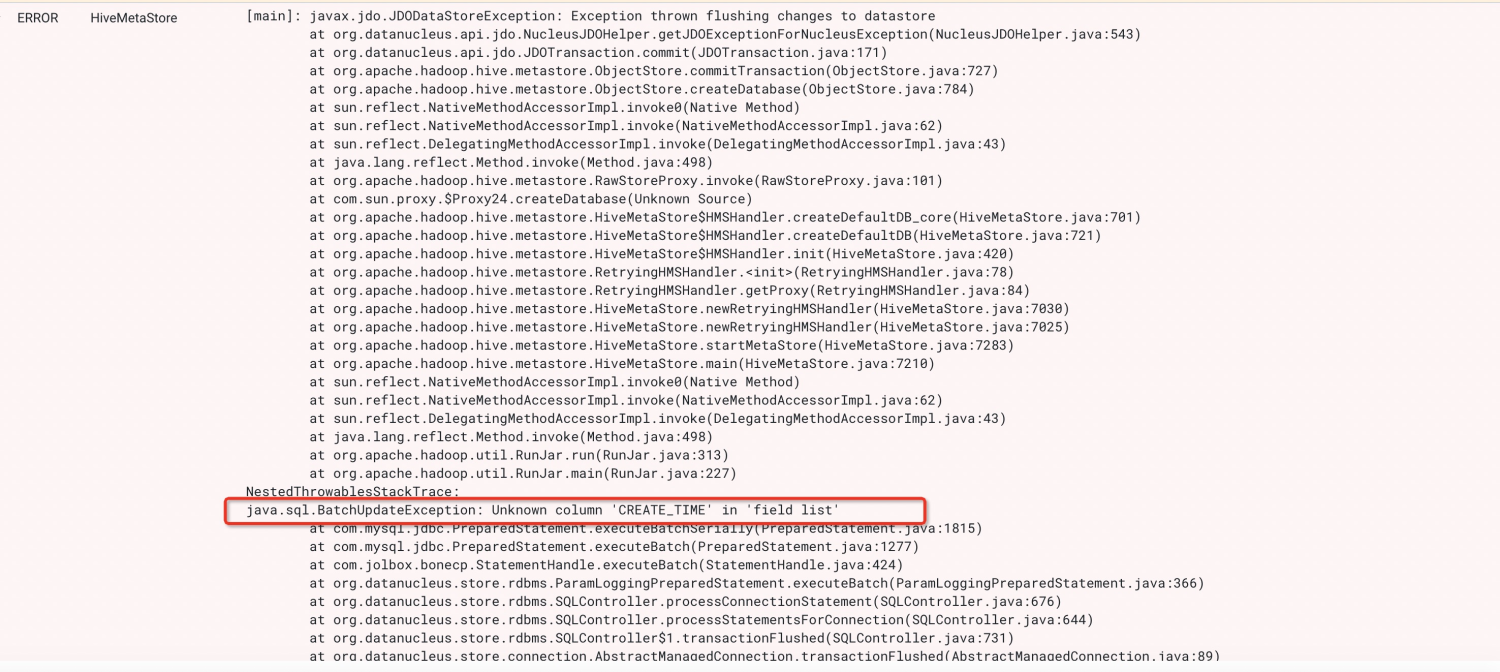

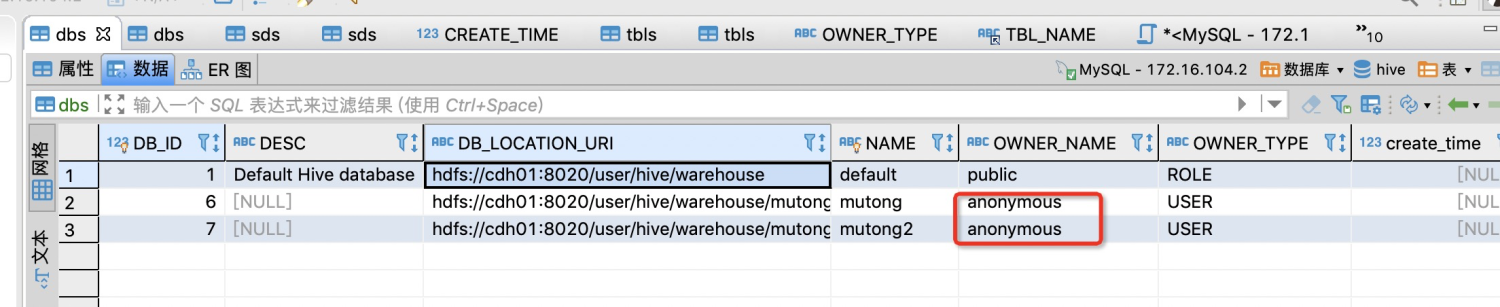

新建的hive元数据库dbs表中缺少CREATE_TIME字段,tbls中缺少owner_type

ALTER TABLE hive.dbs ADD create_time INT(11) NULL; ALTER TABLE hive.tbls ADD owner_type varchar(10) null after owner;

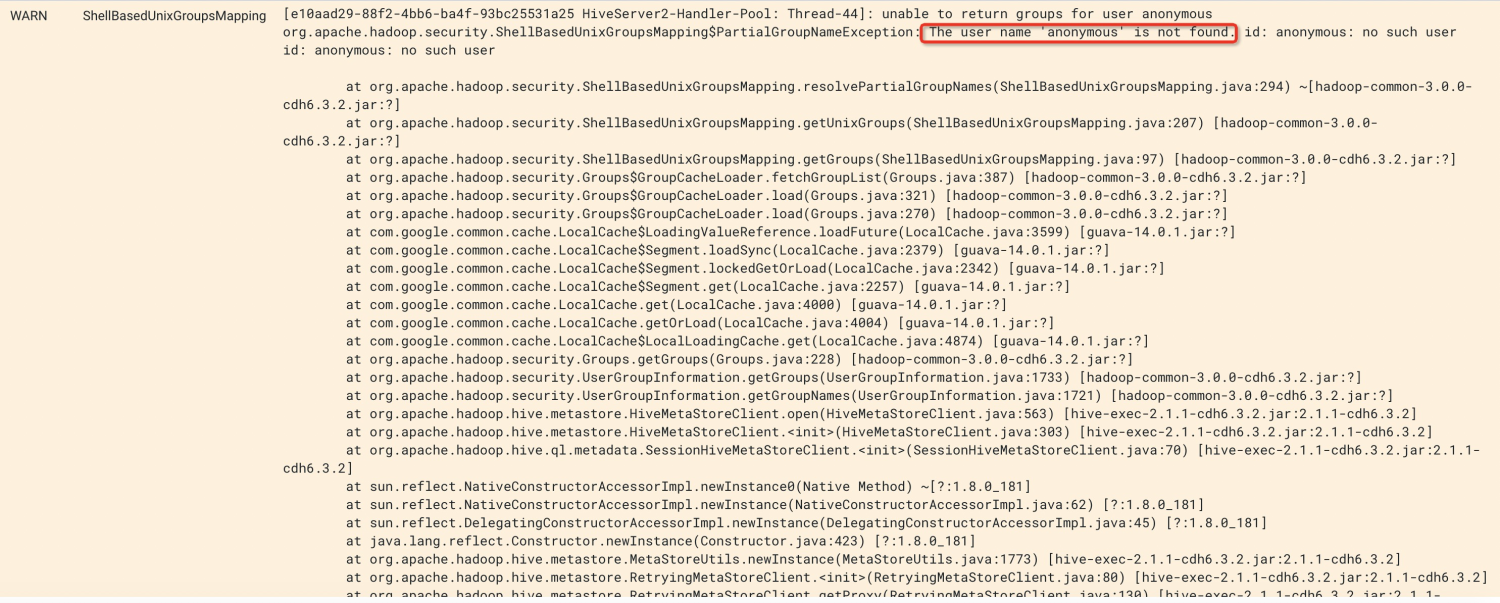

有warn信息报错:

主要是mysqldump中获取的dbs表owner_name字段中anonymous用户未找到,暂不影响使用。

使用beeline连接hive进行测试;

beeline> !connect jdbc:hive2://172.16.104.2:10000 Connecting to jdbc:hive2://172.16.104.2:10000 Enter username for jdbc:hive2://172.16.104.2:10000: Enter password for jdbc:hive2://172.16.104.2:10000: Connected to: Apache Hive (version 2.1.1-cdh6.3.2) Driver: Hive JDBC (version 2.1.1-cdh6.3.2) Transaction isolation: TRANSACTION_REPEATABLE_READ 0: jdbc:hive2://172.16.104.2:10000> show databases; INFO : Compiling command(queryId=hive_20210308210532_3326f98f-a2c7-4531-9762-d59638d3767e): show databases INFO : Semantic Analysis Completed INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:database_name, type:string, comment:from deserializer)], properties:null) INFO : Completed compiling command(queryId=hive_20210308210532_3326f98f-a2c7-4531-9762-d59638d3767e); Time taken: 0.235 seconds INFO : Executing command(queryId=hive_20210308210532_3326f98f-a2c7-4531-9762-d59638d3767e): show databases INFO : Starting task [Stage-0:DDL] in serial mode INFO : Completed executing command(queryId=hive_20210308210532_3326f98f-a2c7-4531-9762-d59638d3767e); Time taken: 0.012 seconds INFO : OK +----------------+ | database_name | +----------------+ | default | | mutong | | mutong2 | +----------------+ 3 rows selected (0.418 seconds) 0: jdbc:hive2://172.16.104.2:10000> create database ods; INFO : Compiling command(queryId=hive_20210308210640_18e24adc-24bb-495d-ae2f-a84c4c8ff2c9): create database ods INFO : Semantic Analysis Completed INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null) INFO : Completed compiling command(queryId=hive_20210308210640_18e24adc-24bb-495d-ae2f-a84c4c8ff2c9); Time taken: 0.033 seconds INFO : Executing command(queryId=hive_20210308210640_18e24adc-24bb-495d-ae2f-a84c4c8ff2c9): create database ods INFO : Starting task [Stage-0:DDL] in serial mode INFO : Completed executing command(queryId=hive_20210308210640_18e24adc-24bb-495d-ae2f-a84c4c8ff2c9); Time taken: 0.121 seconds INFO : OK No rows affected (0.197 seconds) 0: jdbc:hive2://172.16.104.2:10000> show databases; INFO : Compiling command(queryId=hive_20210308210645_5862b5c3-31fb-4950-9b23-d81401cbf9a7): show databases INFO : Semantic Analysis Completed INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:database_name, type:string, comment:from deserializer)], properties:null) INFO : Completed compiling command(queryId=hive_20210308210645_5862b5c3-31fb-4950-9b23-d81401cbf9a7); Time taken: 0.028 seconds INFO : Executing command(queryId=hive_20210308210645_5862b5c3-31fb-4950-9b23-d81401cbf9a7): show databases INFO : Starting task [Stage-0:DDL] in serial mode INFO : Completed executing command(queryId=hive_20210308210645_5862b5c3-31fb-4950-9b23-d81401cbf9a7); Time taken: 0.009 seconds INFO : OK +----------------+ | database_name | +----------------+ | default | | mutong | | mutong2 | | ods | +----------------+ 4 rows selected (0.088 seconds)

查看新建的hive元数据表,已经生成了新建的ods库,cdh中元数据库切换成功。