Greenplum数据库建立外部表加载HDFS文件实践指导

环境概述

(1) Greenplum数据库版本号

Greenplum Database 4.3.0.0POC3 build 45206(基于PostgreSQL 8.2.15)

GP软件包为:greenplum-db-4.3.0.0-SuSE10-x86_64.zip

(2) Huawei Hadoop版本号为:

root@hadoop1:~> hadoop version

Hadoop 2.2

Greenplum节点配置

(1) 要求所有Greenplum DB数据库节点都部署Hadoop客户端

方法一:可以使用IDeploy工具在GPDB节点都安装Hadoop

方法二:可以将hadoop客户端节点上面已经存在的Hadoop路径拷贝到GPDB节点上面

A. 查看Hadoop的安装路径

root@hadoopcli1:~/hadoop> pwd

/opt/hadoop/ -----Hadoop安装路径

B. 执行远程拷贝命令,将/home/hadoop/hadoop整个目录拷贝到GPDB所有节点指定目录下面(所有GP节点Hadoop目录相同):

scp -r /home/hadoop/ gpadmin@xxx.xxx.xxx.xxx:/home/gpadmin/

注:实际上GPDB使用GPHDFS协议加载Hadoop文件系统中数据文件时会用到Hadoop的一些jar包,而这些jar包基本上都位于/opt/hadoop/share/hadoop目录下面,包括HDFS、mapreduce、yarn等jar包。

(2) 要求所有Greenplum DB数据库节点都部署JDK(JDK版本号与Hadoop使用的一致)

在部署Hadoop时,已经部署了JDK

比如路径为:

root@hadoop1:~> echo $JAVA_HOME

/opt/java

可以将Hadoop环境上面已经存在的JDK安装路径拷贝到GPDB节点上面

这里,我只举例说明方法二(建议这种方法,比较省事方便):

scp -r /opt/java gpadmin@xxx.xxx.xxx.xxx:/opt/gpadmin/

(3) 配置GPDB加载Hadoop的jar包的环境变量文件(所有GPDB节点都修改)

编辑配置文件/usr/local/greenplum-db/lib/hadoop/hadoop_env.sh

将如下引入jar包脚本内容加入到hadoop_env.sh文件中:

for f in $HADOOP_HOME/share/hadoop/bdi/*.jar; do

CLASSPATH=${CLASSPATH}:$f;

done

for f in $HADOOP_HOME/share/hadoop/mapreduce/*.jar; do

CLASSPATH=${CLASSPATH}:$f;

done

for f in $HADOOP_HOME/share/hadoop/mapreduce/lib/*.jar; do

CLASSPATH=${CLASSPATH}:$f;

done

for f in $HADOOP_HOME/share/hadoop/yarn/*.jar; do

CLASSPATH=${CLASSPATH}:$f;

done

for f in $HADOOP_HOME/share/hadoop/yarn/lib/*.jar; do

CLASSPATH=${CLASSPATH}:$f;

done

for f in $HADOOP_HOME/share/hadoop/hdfs/*.jar; do

CLASSPATH=${CLASSPATH}:$f;

done

for f in $HADOOP_HOME/share/hadoop/hdfs/lib/*.jar; do

CLASSPATH=${CLASSPATH}:$f;

done

for f in $HADOOP_HOME/share/hadoop/tools/lib/*.jar; do

CLASSPATH=${CLASSPATH}:$f;

done

for f in $HADOOP_HOME/share/hadoop/common/*.jar; do

CLASSPATH=${CLASSPATH}:$f;

done

for f in $HADOOP_HOME/share/hadoop/common/lib/*.jar; do

CLASSPATH=${CLASSPATH}:$f;

done

for f in $HADOOP_HOME/hadoop/common/*.jar; do

CLASSPATH=${CLASSPATH}:$f;

done

另外将文件中的如下内容:

JAVA_PLATFORM=`CLASSPATH=${CLASSPATH} ${JAVA} -Xmx32m ${HADOOP_JAVA_PLATFORM_OPTS} org.apache.hadoop.util.PlatformName | sed -e "s/ /_/g"`

修改为:

JAVA_PLATFORM=`CLASSPATH=${CLASSPATH} ${JAVA} -Xmx32m -classpath /usr/local/greenplum-db/lib/hadoop/hadoop-auth-*.jar org.apache.hadoop.util.PlatformName | sed -e "s/ /_/g"`

(4) 添加GPDB所有节点配置环境变量

修改安装Greenplum DB数据库用户家目录下面的环境变量文件.bashrc,添加如下内容:

export JAVA_HOME=/home/gpadmin/jdk*

export CLASSPATH=$JAVA_HOME/lib/tools.jar

export HADOOP_HOME=/opt/hadoop

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

(5) 修改Master节点的/data/master/gpseg-1/postgresql.conf配置文件,添加如下内容:

gp_hadoop_home='/opt/dbadmin/hadoop/'

gp_hadoop_target_version='gphd-2.0'

(6) 重启GPDB数据库

gpstop -r

测试创建外部表

(1) Hadoop的HDFS里面准备测试数据

查看准备的全部数据文件,以及每个文件大小(共32个文件):

hadoop fs -ls -R -h /test_file/test_file_gb_1.dat-*

查看文件内容总行数:

hadoop fs -cat /test_file/test_file_gb_1.dat-* | wc -l

4174417

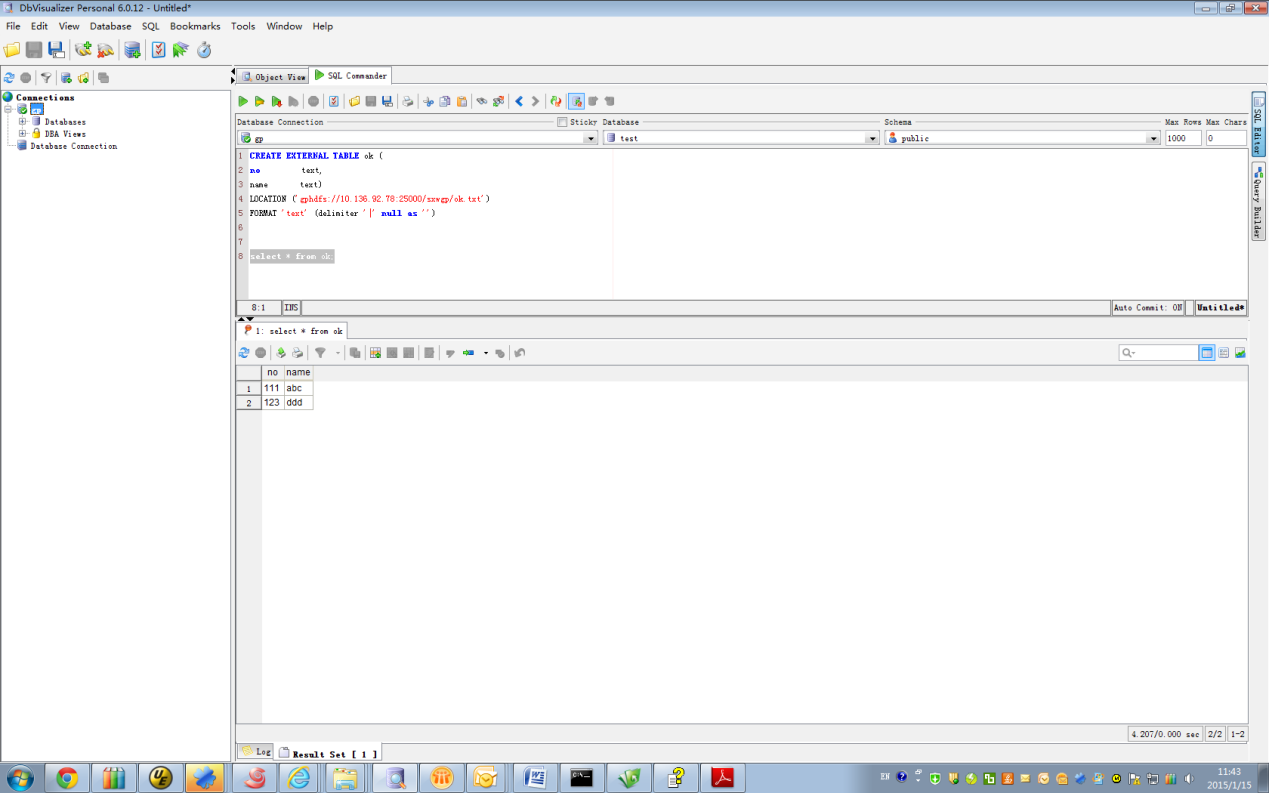

(2) 连接GPDB数据库,创建外部表

CREATE EXTERNAL TABLE test

(

cell_name text,

pro_name text,

region_name text,

city_name text,

city_id text

)

LOCATION ( 'gphdfs://[ip]:[port]/test_file/test_file_gb_1.dat-*')

FORMAT 'text' (delimiter '|')

ENCODING 'UTF8'

LOG ERRORS INTO wide_table_error SEGMENT REJECT LIMIT 1000000 ROWS ;

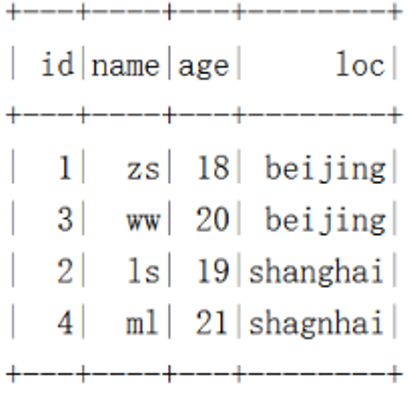

(3) 查看外部表加载的文件内容行数

select count(1) from test;

NOTICE: Found 4 data formatting errors (4 or more input rows). Rejected related input data.

count

---------

4174417 ----通过外部表可以查看到所有文件中的内容,没有丢弃数据

(1 row)

外部表数据加入到事实表

(1) 创建表

CREATE TABLE fact_test

(

cell_name text,

pro_name text,

region_name text,

city_name text

city_id text

) distributed by (city_name,city_id);

(2) 通过外部表,插入数据

noas=# insert into fact_wide_table select * from fact_test;

NOTICE: Found 4 data formatting errors (4 or more input rows). Rejected related input data.

INSERT 0 4174417 ------数据全部加载到事实表

(3) 查询

可以查询事实表的前10行看数据是否存在:

select * from fact_test limit 10;

GP外部表访问FI上的HDFS文件:

1、 安装GP(master,segment),gpadmin/gpadmin,家目录:/opt/gpadmin

2、 在GP的master,segment上安装FI的client,安装目录如:/opt/gpadmin/hadoopcli

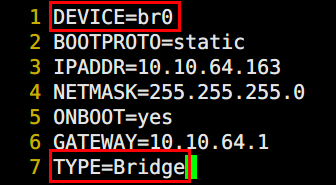

3、GP的master节点,配置:

在/opt/greenplum/master/gpseg-1目录下打开:postgresql.conf,去掉注释:

gp_external_enable_exec = on # enable external tables with EXECUTE.

gp_external_grant_privileges = on #enable create http/gpfdist for non su's

新增:

gp_hadoop_home='/opt/gpadmin/hadoopcli/HDFS/hadoop'

gp_hadoop_target_version='gphd-2.0'

4、GP的Master,segment节点,gpadmin的家目录下,修改.bashr,并source生效:

export LC_ALL=en_US.utf-8

export.utf-8

source /usr/local/greenplum-db/greenplum_path.sh

export MASTER_DATA_DIRECTORY=/opt/greenplum/master/gpseg-1

export PGPORT=5432

export PGUSER=gpadmin

export HADOOP_HOME=/opt/gpadmin/hadoopcli/HDFS/hadoop

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

export JAVA_HOME=/opt/java

export CLASSPATH=$JAVA_HOME/lib/tools.jar

#以下配置安全集群填写

export LD_LIBRARY_PATH=/opt/kerberos/lib:${LD_LIBRARY_PATH}

5、GP的master,segment节点上安装的FI客户端,修改如下目录的文件:/opt/gpadmin/hadoopcli/HDFS/hadoop/etc/hadoop/hdfs-site.xml,新增如下配置,其中hdfs.keytab是从hadoop服务端上获取并放置在该客户端目录/opt/gpadmin/hadoopcli下

<property>

<name>com.emc.greenplum.gpdb.hdfsconnector.security.user.name</name>

<value>hdfs/hadoop@HADOOP.COM</value>

</property>

<property>

<name>com.emc.greenplum.gpdb.hdfsconnector.security.user.keytab.file</name>

<value>/opt/gpadmin/hdfs.keytab</value>

</property>

6、在GP的master,segment节点上,把hadoop节点下的/etc/krb5.conf,拷贝覆盖操作系统的/etc下的krb5.conf

注意:GP上所有配置生效后,重启GP。

经过以上6步骤,可以在GP上建立外部表,并在GP上可以查询到:

FAQ1,未设置正确环境变量或未安装好FI客户端(参考上面1~4配置):

14:02:16 [SELECT - 0 row(s), 0.000 secs] [Error Code: 0, SQL State: 38000] ERROR: external table gphdfs protocol command ended with error. 15/01/14 14:02:36 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable (seg5 slice1 linux4390:40005 pid=23929)

Detail:

Exception in thread "main" org.apache.hadoop.security.AccessControlException: SIMPLE authentication is not enabled. Available:[TOKEN, KERBEROS]

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:39)

at sun.reflect.DelegatingConstructorAcc

Command: 'gphdfs://xxx.xxx.xxx.xxx:port/gp/ok.txt'

External table ok, file gphdfs://xxx.xxx.xxx.xxx:port/gp/ok.txt

... 1 statement(s) executed, 0 row(s) affected, exec/fetch time: 0.000/0.000 sec [0 successful, 0 warnings, 1 errors]

FAQ2,GP侧的FI客户端要配置读取keytab的用户和对应文件目录(参考上面第5步),否则:

... Physical database connection acquired for: gp

15:17:56 [SELECT - 0 row(s), 0.000 secs] [Error Code: 0, SQL State: 38000] ERROR: external table gphdfs protocol command ended with error. 15/01/14 15:17:54 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable (seg0 slice1 linux4390:40000 pid=8466)

Detail:

Exception in thread "main" java.io.IOException: Running in secure mode, but config doesn't have a keytab

at org.apache.hadoop.security.SecurityUtil.login(SecurityUtil.java:235)

at org.apache.hadoop.security.SecurityUtil.login(SecurityUtil.java:206)

at com.emc.greenplum.gpdb.hdfsconnector.ConnectorUtil.loginSecureHadoop(ConnectorUtil.java:84)

at com.e

Command: 'gphdfs://xxx.xxx.xxx.xxx:port/gp/ok.txt'

External table ok, file gphdfs://xxx.xxx.xxx.xxx:port/gp/ok.txt

... 1 statement(s) executed, 0 row(s) affected, exec/fetch time: 0.000/0.000 sec [0 successful, 0 warnings, 1 errors]

FAQ3,需要把hadoop节点的krb5.conf拷贝覆盖greenplum操作系统/etc目录下的krb5.conf(参考:6),否则:

... Physical database connection acquired for: gp

15:38:15 [SELECT - 0 row(s), 0.000 secs] [Error Code: 0, SQL State: 38000] ERROR: external table gphdfs protocol command ended with error. Exception in thread "main" java.lang.IllegalArgumentException: Can't get Kerberos realm (seg1 slice1 linux4390:40001 pid=40524)

Detail:

at org.apache.hadoop.security.HadoopKerberosName.setConfiguration(HadoopKerberosName.java:65)

at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:248)

at org.apache.hadoop.security.UserGroupInformation.ensureInitialized(UserGroupInformation.java:233)

at org.apache.hadoop.security.UserGroupInformation.isAuthenticationMethodEnabled(UserGroupInformation.java:310)

at org.apach

Command: 'gphdfs://xxx.xxx.xxx.xxx:port/gp/ok.txt'

External table ok, file gphdfs://xxx.xxx.xxx.xxx:port/gp/ok.txt

... 1 statement(s) executed, 0 row(s) affected, exec/fetch time: 0.000/0.000 sec [0 successful, 0 warnings, 1 errors]