Kerberos集成Hadoop

mkdir /etc/security/keytab/ chown -R root:hadoop /etc/security/keytab/ chmod 770 /etc/security/keytab/

创建完需要将文件夹分发到其他各个节点(注意所有者和权限)

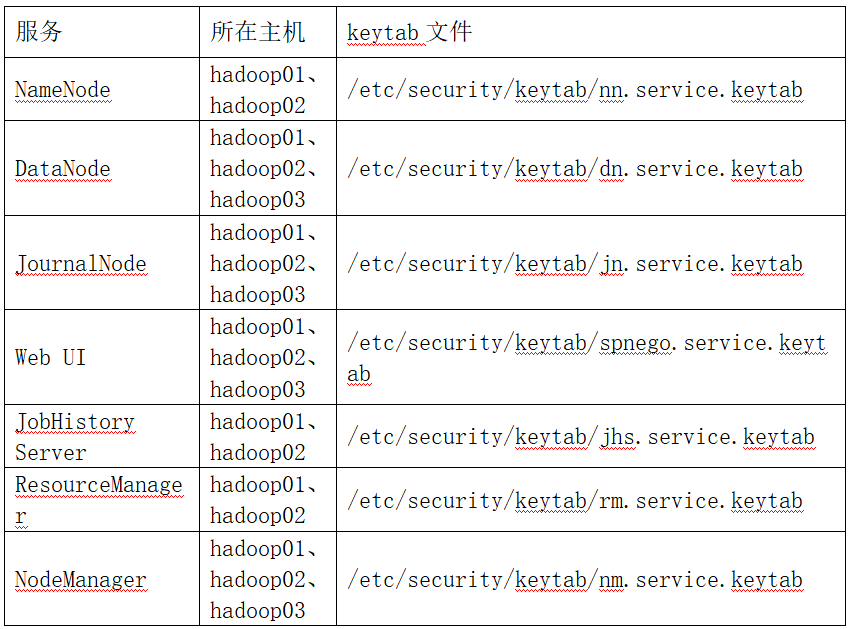

(2)为各服务创建主体及对应keytab文件addprinc -randkey nn/hadoop01

创建主体: addprinc -randkey nn/hadoop01@HADOOP.COM 创建主体对应的keytab文件 xst -k /etc/security/keytab/nn.service.keytab nn/hadoop01@HADOOP.COM

chown -R root:hadoop /etc/security/keytab/ chmod 660 /etc/security/keytab/*

增加以下内容 <!-- Kerberos主体到系统用户的映射机制 --> <property> <name>hadoop.security.auth_to_local.mechanism</name> <value>MIT</value> </property> <!-- Kerberos主体到系统用户的具体映射规则 --> <property> <name>hadoop.security.auth_to_local</name> <value> RULE:[2:$1/$2@$0]([ndj]n\/.*@HADOOP\.COM)s/.*/hdfs/ RULE:[2:$1/$2@$0]([rn]m\/.*@HADOOP\.COM)s/.*/yarn/ RULE:[2:$1/$2@$0](jhs\/.*@HADOOP\.COM)s/.*/mapred/ DEFAULT </value> </property> <!-- 启用Hadoop集群Kerberos安全认证 --> <property> <name>hadoop.security.authentication</name> <value>kerberos</value> </property> <!-- 启用Hadoop集群授权管理 --> <property> <name>hadoop.security.authorization</name> <value>true</value> </property> <!-- Hadoop集群间RPC通讯设为仅认证模式 --> <property> <name>hadoop.rpc.protection</name> <value>authentication</value> </property>

增加以下内容

<property>

<name>dfs.datanode.data.dir.perm</name>

<value>750</value>

</property>

<!-- 配置NameNode Web UI 使用HTTPS协议 -->

<property>

<name>dfs.http.policy</name>

<value>HTTP_AND_HTTPS</value>

</property>

<property>

<name>dfs.encrypt.data.transfer</name>

<value>false</value>

</property>

<property>

<name>dfs.block.access.token.enable</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.kerberos.principal</name>

<value>nn/_HOST@HADOOP.COM</value>

</property>

<property>

<name>dfs.namenode.keytab.file</name>

<value>/etc/security/keytab/nn.service.keytab</value>

</property>

<property>

<name>dfs.namenode.kerberos.internal.spnego.principal</name>

<value>${dfs.web.authentication.kerberos.principal}</value>

</property>

<property>

<name>dfs.journalnode.kerberos.principal</name>

<value>jn/_HOST@HADOOP.COM</value>

</property>

<property>

<name>dfs.journalnode.keytab.file</name>

<value>/etc/security/keytab/jn.service.keytab</value>

</property>

<property>

<name>dfs.journalnode.kerberos.internal.spnego.principal</name>

<value>${dfs.web.authentication.kerberos.principal}</value>

</property>

<property>

<name>dfs.datanode.kerberos.principal</name>

<value>dn/_HOST@HADOOP.COM</value>

</property>

<property>

<name>dfs.datanode.keytab.file</name>

<value>/etc/security/keytab/dn.service.keytab</value>

</property>

<property>

<name>dfs.web.authentication.kerberos.principal</name>

<value>HTTP/_HOST@HADOOP.COM</value>

</property>

<property>

<name>dfs.web.authentication.kerberos.keytab</name>

<value>/etc/security/keytab/spnego.service.keytab</value>

</property>

<property>

<name>dfs.data.transfer.protection</name>

<value>authentication</value>

</property>增加以下内容 <!-- 配置Node Manager使用LinuxContainerExecutor管理Container --> <property> <name>yarn.nodemanager.container-executor.class</name> <value>org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor</value> </property> <!-- 配置Node Manager的启动用户的所属组 --> <property> <name>yarn.nodemanager.linux-container-executor.group</name> <value>hadoop</value> </property> <!-- LinuxContainerExecutor脚本路径 --> <property> <name>yarn.nodemanager.linux-container-executor.path</name> <value>/opt/software/hadoop-3.2.1/bin/container-executor</value> </property> <!-- Resource Manager 服务的Kerberos主体 --> <property> <name>yarn.resourcemanager.principal</name> <value>rm/_HOST@HADOOP.COM</value> </property> <!-- Resource Manager 服务的Kerberos密钥文件 --> <property> <name>yarn.resourcemanager.keytab</name> <value>/etc/security/keytab/rm.service.keytab</value> </property> <!-- Node Manager 服务的Kerberos主体 --> <property> <name>yarn.nodemanager.principal</name> <value>nm/_HOST@HADOOP.COM</value> </property> <!-- Node Manager 服务的Kerberos密钥文件 --> <property> <name>yarn.nodemanager.keytab</name> <value>/etc/security/keytab/nm.service.keytab</value> </property>

<!-- 历史服务器的Kerberos主体 --> <property> <name>mapreduce.jobhistory.keytab</name> <value>/etc/security/keytab/jhs.service.keytab</value> </property> <!-- 历史服务器的Kerberos密钥文件 --> <property> <name>mapreduce.jobhistory.principal</name> <value>jhs/_HOST@HADOOP.COM</value> </property>

remote_scp.sh hdfs-site.xml /opt/software/hadoop-3.2.1/etc/hadoop remote_scp.sh mapred-site.xml /opt/software/hadoop-3.2.1/etc/hadoop remote_scp.sh yarn-site.xml /opt/software/hadoop-3.2.1/etc/hadoop remote_scp.sh core-site.xml /opt/software/hadoop-3.2.1/etc/hadoop

[hdfs@hadoop01 hadoop]# mkdir -p /opt/security/kerberos_https [hdfs@hadoop01 hadoop]# cd /opt/security/kerberos_https

openssl req -new -x509 -keyout bd_ca_key -out bd_ca_cert -days 9999 -subj '/C=CN/ST=beijing/L=beijing/O=test/OU=test/CN=test'

[hdfs@hadoop01 kerberos_https]# openssl req -new -x509 -keyout bd_ca_key -out bd_ca_cert -days 9999 -subj '/C=CN/ST=beijing/L=beijing/O=test/OU=test/CN=test' Generating a 2048 bit RSA private key .....................................................................................................+++ .+++ writing new private key to 'bd_ca_key' Enter PEM pass phrase: Verifying - Enter PEM pass phrase: ----- [hdfs@hadoop01 kerberos_https]# [hdfs@hadoop01 kerberos_https]# # (输入密码和确认密码是123456,此命令成功后输出bd_ca_key和bd_ca_cert两个文件) [hdfs@hadoop01 kerberos_https]# ll 总用量 8 -rw-r--r-- 1 root root 1294 3月 27 19:36 bd_ca_cert -rw-r--r-- 1 root root 1834 3月 27 19:36 bd_ca_key

scp -r /opt/security/kerberos_https root@hadoop02 : /opt/security/

cd /opt/security/kerberos_https # 所有需要输入密码的地方全部输入123456(方便起见,如果你对密码有要求请自行修改) # 1 输入密码和确认密码:123456,此命令成功后输出keystore文件 keytool -keystore keystore -alias localhost -validity 9999 -genkey -keyalg RSA -keysize 2048 -dname "CN=test, OU=test, O=test, L=beijing, ST=beijing, C=CN" # 2 输入密码和确认密码:123456,提示是否信任证书:输入yes,此命令成功后输出truststore文件 keytool -keystore truststore -alias CARoot -import -file bd_ca_cert # 3 输入密码和确认密码:123456,此命令成功后输出cert文件 keytool -certreq -alias localhost -keystore keystore -file cert # 4 此命令成功后输出cert_signed文件 openssl x509 -req -CA bd_ca_cert -CAkey bd_ca_key -in cert -out cert_signed -days 9999 -CAcreateserial -passin pass:123456 # 5 输入密码和确认密码:123456,是否信任证书,输入yes,此命令成功后更新keystore文件 keytool -keystore keystore -alias CARoot -import -file bd_ca_cert # 6 输入密码和确认密码:123456 keytool -keystore keystore -alias localhost -import -file cert_signed 最终得到: -rw-r--r-- 1 root root 1294 3月 27 19:36 bd_ca_cert -rw-r--r-- 1 root root 17 3月 27 19:43 bd_ca_cert.srl -rw-r--r-- 1 root root 1834 3月 27 19:36 bd_ca_key -rw-r--r-- 1 root root 1081 3月 27 19:43 cert -rw-r--r-- 1 root root 1176 3月 27 19:43 cert_signed -rw-r--r-- 1 root root 4055 3月 27 19:43 keystore -rw-r--r-- 1 root root 978 3月 27 19:42 truststore

<configuration> <property> <name>ssl.server.truststore.location</name> <value>/opt/security/kerberos_https/truststore</value> <description>Truststore to be used by NN and DN. Must be specified.</description> </property> <property> <name>ssl.server.truststore.password</name> <value>123456</value> <description>Optional. Default value is "". </description> </property> <property> <name>ssl.server.truststore.type</name> <value>jks</value> <description>Optional. The keystore file format, default value is "jks".</description> </property> <property> <name>ssl.server.truststore.reload.interval</name> <value>10000</value> <description>Truststore reload check interval, in milliseconds. Default value is 10000 (10 seconds). </description> </property> <property> <name>ssl.server.keystore.location</name> <value>/opt/security/kerberos_https/keystore</value> <description>Keystore to be used by NN and DN. Must be specified.</description> </property> <property> <name>ssl.server.keystore.password</name> <value>123456</value> <description>Must be specified.</description> </property> <property> <name>ssl.server.keystore.keypassword</name> <value>123456</value> <description>Must be specified.</description> </property> <property> <name>ssl.server.keystore.type</name> <value>jks</value> <description>Optional. The keystore file format, default value is "jks".</description> </property> <property> <name>ssl.server.exclude.cipher.list</name> <value>TLS_ECDHE_RSA_WITH_RC4_128_SHA,SSL_DHE_RSA_EXPORT_WITH_DES40_CBC_SHA, SSL_RSA_WITH_DES_CBC_SHA,SSL_DHE_RSA_WITH_DES_CBC_SHA, SSL_RSA_EXPORT_WITH_RC4_40_MD5,SSL_RSA_EXPORT_WITH_DES40_CBC_SHA, SSL_RSA_WITH_RC4_128_MD5</value> <description>Optional. The weak security cipher suites that you want excludedfrom SSL communication.</description> </property> </configuration>

<configuration> <property> <name>ssl.client.truststore.location</name> <value>/opt/security/kerberos_https/truststore</value> <description>Truststore to be used by clients like distcp. Must be specified. </description> </property> <property> <name>ssl.client.truststore.password</name> <value>123456</value> <description>Optional. Default value is "". </description> </property> <property> <name>ssl.client.truststore.type</name> <value>jks</value> <description>Optional. The keystore file format, default value is "jks".</description> </property> <property> <name>ssl.client.truststore.reload.interval</name> <value>10000</value> <description>Truststore reload check interval, in milliseconds. Default value is 10000 (10 seconds). </description> </property> <property> <name>ssl.client.keystore.location</name> <value>/opt/security/kerberos_https/keystore</value> <description>Keystore to be used by clients like distcp. Must be specified. </description> </property> <property> <name>ssl.client.keystore.password</name> <value>123456</value> <description>Optional. Default value is "". </description> </property> <property> <name>ssl.client.keystore.keypassword</name> <value>123456</value> <description>Optional. Default value is "". </description> </property> <property> <name>ssl.client.keystore.type</name> <value>jks</value> <description>Optional. The keystore file format, default value is "jks". </description> </property> </configuration>

(7)开启https

在hdfs-site.xml配置文件中增加以下配置

<property> <name>dfs.http.policy</name> <value>HTTPS_ONLY</value> <property>

所有开启的web页面均使用https, 细节在ssl server 和c

chmod 6050 /opt/module/hadoop-3.1.3/bin/container-executor chown root:hadoop /opt/module/hadoop-3.1.3/bin/container-executor

[root@hadoop01 ~]# chown root:hadoop /opt/software/hadoop-3.2.1/etc/hadoop/container-executor.cfg [root@hadoop01 ~]# chown root:hadoop /opt/software/hadoop-3.2.1/etc/hadoop/ [root@hadoop01 ~]# chown root:hadoop /opt/software/hadoop-3.2.1/etc/ [root@hadoop01 ~]# chown root:hadoop /opt/software/hadoop-3.2.1 [root@hadoop01 ~]# chown root:hadoop /opt/software/ [root@hadoop01 ~]# chmod 400 /opt/software/hadoop-3.2.1/etc/hadoop/container-executor.cfg

[root@hadoop01 ~]# vim $HADOOP_HOME/etc/hadoop/container-executor.cfg 内容如下 yarn.nodemanager.linux-container-executor.group=hadoop banned.users=hdfs,yarn,mapred min.user.id=1000 allowed.system.users= feature.tc.enabled=false

[root@hadoop01 ~]# vim $HADOOP_HOME/etc/hadoop/yarn-site.xml 增加以下内容 <!-- 配置Node Manager使用LinuxContainerExecutor管理Container --> <property> <name>yarn.nodemanager.container-executor.class</name> <value>org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor</value> </property> <!-- 配置Node Manager的启动用户的所属组 --> <property> <name>yarn.nodemanager.linux-container-executor.group</name> <value>hadoop</value> </property> <!-- LinuxContainerExecutor脚本路径 --> <property> <name>yarn.nodemanager.linux-container-executor.path</name> <value>/opt/software/hadoop-3.2.1/bin/container-executor</value> </property>

[root@hadoop01 ~]# remote_scp.sh $HADOOP_HOME/etc/hadoop/container-executor.cfg $HADOOP_HOME/etc/hadoop/ [root@hadoop01 ~]# remote_scp.sh $HADOOP_HOME/etc/hadoop/yarn-site.xml $HADOOP_HOME/etc/hadoop

该变量位于hadoop-env.sh文件,默认值为${HADOOP_HOME}/logs

remote_op.sh "chown hdfs:hadoop /opt/software/hadoop-3.2.1/logs/"

remote_op.sh "chmod 775 /opt/software/hadoop-3.2.1/logs/"该参数位于hdfs-site.xml文件,默认值为file://${hadoop.tmp.dir}/dfs/name

remote_op.sh "chown -R hdfs:hadoop /data/hadoop/dfs/name/"

remote_op.sh "chmod 700 /data/hadoop/dfs/name/"该参数为于hdfs-site.xml文件,默认值为file://${hadoop.tmp.dir}/dfs/data

remote_op.sh "chown -R hdfs:hadoop /data/hadoop/dfs/data"

remote_op.sh "chmod 700 /data/hadoop/dfs/data/"该参数位于yarn-site.xml文件,默认值为file://${hadoop.tmp.dir}/nm-local-dir

remote_op.sh "chown -R yarn:hadoop /data/hadoop/tmp/nm-local-dir/"

remote_op.sh "chown -R 775 /data/hadoop/tmp/nm-local-dir/"该参数位于yarn-site.xml文件,默认值为$HADOOP_LOG_DIR/userlogs remote_op.sh "chown yarn:hadoop /opt/software/hadoop-3.2.1/logs/userlogs/"

[root@hadoop102 ~]# vim $HADOOP_HOME/sbin/start-dfs.sh 在顶部增加如下内容 HDFS_DATANODE_USER=hdfs HDFS_NAMENODE_USER=hdfs HDFS_SECONDARYNAMENODE_USER=hdfs 以root用户执行脚本启动hdfs

修改/、/tmp、/user路径所有者和权限 hadoop fs -chown hdfs:hadoop / /tmp /user hadoop fs -chmod 755 / hadoop fs -chmod 1777 /tmp hadoop fs -chmod 775 /user 参数yarn.nodemanager.remote-app-log-dir位于yarn-site.xml文件,默认值/tmp/logs hadoop fs -chown yarn:hadoop /tmp/logs hadoop fs -chmod 1777 /tmp/logs 参数mapreduce.jobhistory.intermediate-done-dir位于mapred-site.xml文件,默认值为/tmp/hadoop-yarn/staging/history/done_intermediate,需保证该路径的所有上级目录(除/tmp)的所有者均为mapred,所属组为hadoop,权限为770 hadoop fs -chown -R mapred:hadoop /tmp/hadoop-yarn/staging/history/done_intermediate hadoop fs -chmod -R 1777 /tmp/hadoop-yarn/staging/history/done_intermediate hadoop fs -chown mapred:hadoop /tmp/hadoop-yarn/staging/history/ hadoop fs -chown mapred:hadoop /tmp/hadoop-yarn/staging/ hadoop fs -chown mapred:hadoop /tmp/hadoop-yarn/ hadoop fs -chmod 770 /tmp/hadoop-yarn/staging/history/ hadoop fs -chmod 770 /tmp/hadoop-yarn/staging/ hadoop fs -chmod 770 /tmp/hadoop-yarn/ 参数mapreduce.jobhistory.done-dir位于mapred-site.xml文件,默认值为/tmp/hadoop-yarn/staging/history/done,需保证该路径的所有上级目录(除/tmp)的所有者均为mapred,所属组为hadoop,权限为770 hadoop fs -chown -R mapred:hadoop /tmp/hadoop-yarn/staging/history/done hadoop fs -chmod -R 750 /tmp/hadoop-yarn/staging/history/done hadoop fs -chown mapred:hadoop /tmp/hadoop-yarn/staging/history/ hadoop fs -chown mapred:hadoop /tmp/hadoop-yarn/staging/ hadoop fs -chown mapred:hadoop /tmp/hadoop-yarn/ hadoop fs -chmod 770 /tmp/hadoop-yarn/staging/history/ hadoop fs -chmod 770 /tmp/hadoop-yarn/staging/ hadoop fs -chmod 770 /tmp/hadoop-yarn/

[root@hadoop02 ~]# vim $HADOOP_HOME/sbin/start-yarn.sh 在顶部增加如下内容 YARN_RESOURCEMANAGER_USER=yarn YARN_NODEMANAGER_USER=yarn