helm安装部署trino对接hive(一)

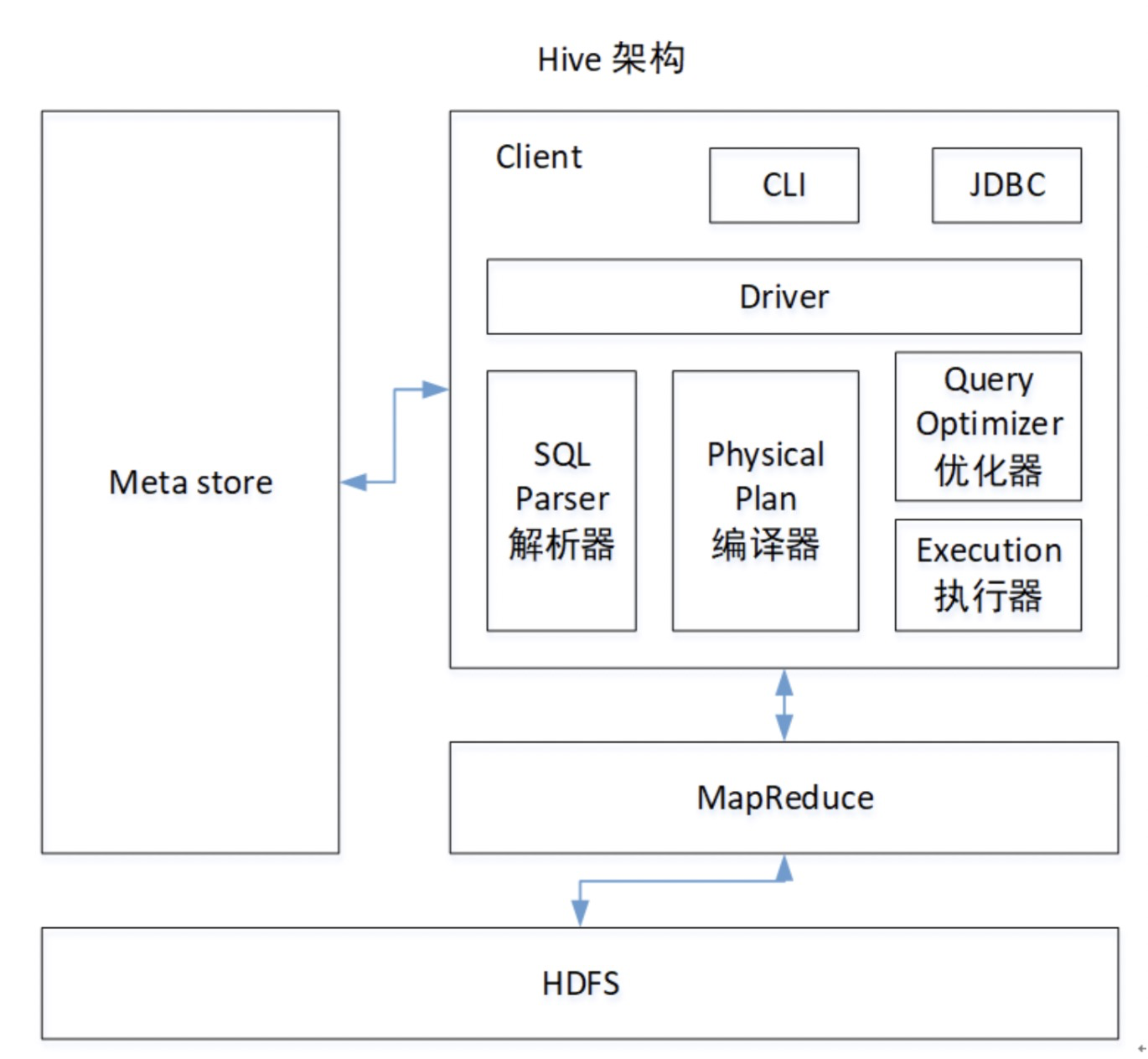

前提:本文前提是基于hive组件已经提前安装的情况下,安装部署好trino容器之后进行对hive组件的对接。

helm trino地址:https://artifacthub.io/packages/helm/trino/trino

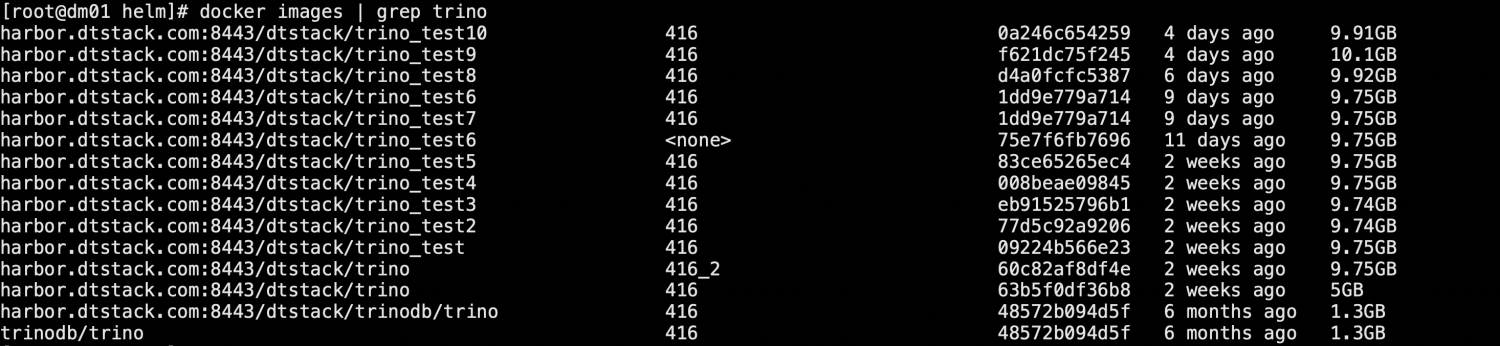

设置trino镜像

准备工作:下载trino-server-416.tar.gz、zulu17.46.19-ca-jdk17.0.9-linux_x64.tar.gz、trino-cli-416-executable.jar包

📎zulu17.46.19-ca-jdk17.0.9-linux_x64.tar.gz

1、设置Dockerfile文件

在后面版本中可以将from改成harbor.dtstack.com:8443/dtstack/trino:416,方便快速build。

FROM registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/centos:7.7.1908

#FROM harbor.dtstack.com:8443/dtstack/trino:416

RUN rm -f /etc/localtime && ln -sv /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo "Asia/Shanghai" > /etc/timezone

RUN export.UTF-8

# 创建用户和用户组,跟yaml编排里的user: 10000:10000

RUN groupadd --system --gid=1000 admin && useradd --system --home-dir /home/admin --uid=1000 --gid=admin admin

# 安装sudo

RUN yum -y install sudo ; chmod 640 /etc/sudoers

# 给admin添加sudo权限

RUN echo "admin ALL=(ALL) NOPASSWD: ALL" >> /etc/sudoers

RUN yum -y install install net-tools telnet wget nc

RUN mkdir /opt/apache/

# 添加配置 JDK

ADD zulu17.46.19-ca-jdk17.0.9-linux_x64.tar.gz /opt/apache/

ENV JAVA_HOME=/opt/apache/zulu17.46.19-ca-jdk17.0.9-linux_x64

ENV PATH=$JAVA_HOME/bin:$PATH

# 添加配置 trino server

ENV TRINO_VERSION 416

ADD trino-server-${TRINO_VERSION}.tar.gz /opt/apache/

ENV TRINO_HOME=/opt/apache/trino-server-416

ENV PATH=$TRINO_HOME/bin:$PATH

# 创建配置目录和数据源catalog目录

RUN mkdir -p ${TRINO_HOME}/etc/catalog

# 创建放置其他配置信息的目录

RUN mkdir -p /opt/apache/trino-hiveconf

RUN mkdir -p /opt/apache/trino-resource

RUN mkdir -p /opt/apache/trino-ldap

# 添加配置 trino cli

COPY trino-cli-416-executable.jar $TRINO_HOME/bin/trino-cli

RUN chmod +x $TRINO_HOME/bin/trino-cli

RUN chown -R admin:admin /opt/apache/

WORKDIR $TRINO_HOME

ENTRYPOINT $TRINO_HOME/bin/launcher run --verbose

2、进行build镜像

docker build -t harbor.dtstack.com:8443/dtstack/trino_test5:416 .

3、push到私有仓库

docker push harbor.dtstack.com:8443/dtstack/trino_test5:416

设置helm chart

1、增加trino repo

helm repo add trino https://trinodb.github.io/charts/

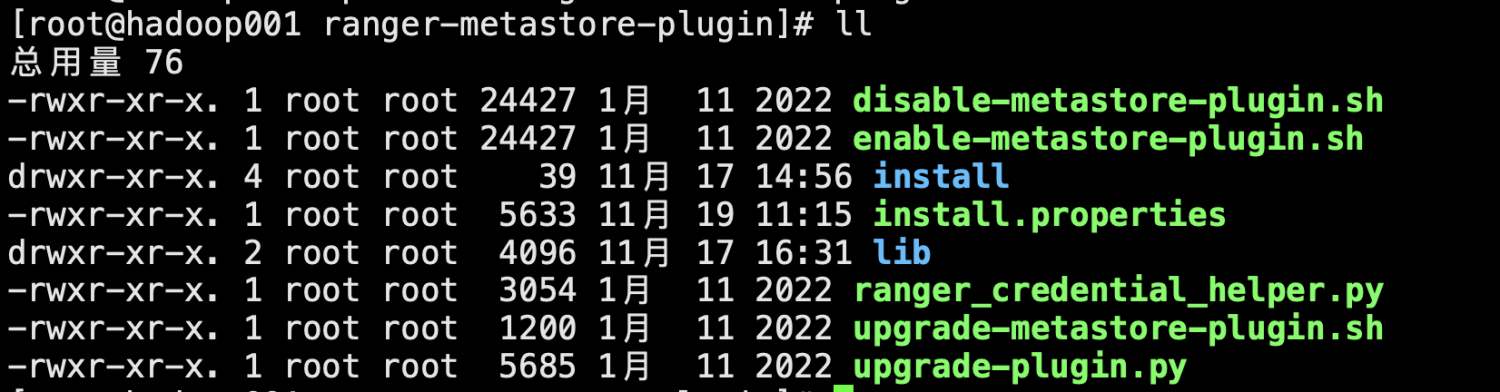

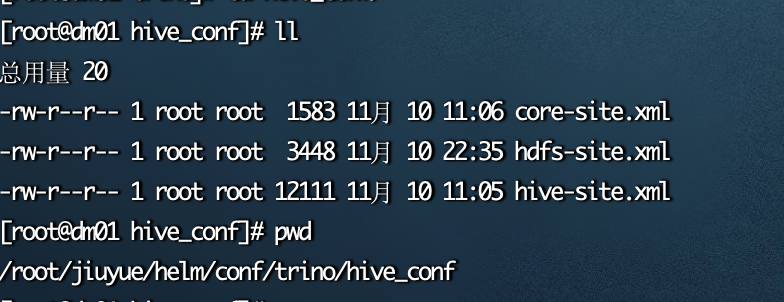

2、设置集群hive相关配置文件的configmap

kubectl -n trino-test create cm hive-conf --from-file=hive_conf/ kubectl get cm -n trino-test

3、下载的trino helm chart,修改values.yaml

# Default values for trino.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

## E.g.

# ## imagePullSecrets:

# ## - myRegistryKeySecretName

# ##

# imagePullSecrets: []

# storageClass: ""

#

image:

repository: harbor.dtstack.com:8443/dtstack/trino_test5

pullPolicy: IfNotPresent

# Overrides the image tag whose default is the chart version.

tag: 416

#pullSecrets: []

#debug: false

imagePullSecrets:

- name: registry-credentials

server:

workers: 2

node:

environment: production

dataDir: /opt/apache/trino-server-416/data

pluginDir: /opt/apache/trino-server-416/plugin

log:

trino:

level: INFO

config:

path: /opt/apache/trino-server-416/etc

http:

port: 8080

https:

enabled: false

port: 8443

keystore:

path: ""

# Trino supports multiple authentication types: PASSWORD, CERTIFICATE, OAUTH2, JWT, KERBEROS

# For more info: https://trino.io/docs/current/security/authentication-types.html

authenticationType: ""

query:

maxMemory: "1GB"

maxMemoryPerNode: "512MB"

memory:

heapHeadroomPerNode: "512MB"

exchangeManager:

name: "filesystem"

baseDir: "/tmp/trino-local-file-system-exchange-manager"

workerExtraConfig: ""

coordinatorExtraConfig: ""

autoscaling:

enabled: false

maxReplicas: 5

targetCPUUtilizationPercentage: 50

accessControl: {}

# type: configmap

# refreshPeriod: 60s

# # Rules file is mounted to /etc/trino/access-control

# configFile: "rules.json"

# rules:

# rules.json: |-

# {

# "catalogs": [

# {

# "user": "admin",

# "catalog": "(mysql|system)",

# "allow": "all"

# },

# {

# "group": "finance|human_resources",

# "catalog": "postgres",

# "allow": true

# },

# {

# "catalog": "hive",

# "allow": "all"

# },

# {

# "user": "alice",

# "catalog": "postgresql",

# "allow": "read-only"

# },

# {

# "catalog": "system",

# "allow": "none"

# }

# ],

# "schemas": [

# {

# "user": "admin",

# "schema": ".*",

# "owner": true

# },

# {

# "user": "guest",

# "owner": false

# },

# {

# "catalog": "default",

# "schema": "default",

# "owner": true

# }

# ]

# }

additionalNodeProperties: {}

additionalConfigProperties: {}

additionalLogProperties: {}

additionalExchangeManagerProperties: {}

eventListenerProperties: {}

#additionalCatalogs: {}

additionalCatalogs:

mysql: |-

connector.name=mysql

connection-url=jdbc:mysql://10.68.102.107:3306

connection-user=root

connection-password=123456

hive: |-

connector.name=hive

hive.metastore.uri=thrift://hadoop001:9083

hive.config.resources=/opt/apache/trino-hiveconf/hdfs-site.xml,/opt/apache/trino-hiveconf/core-site.xml

# Array of EnvVar (https://v1-18.docs.kubernetes.io/docs/reference/generated/kubernetes-api/v1.18/#envvar-v1-core)

env: []

initContainers: {}

# coordinator:

# - name: init-coordinator

# image: busybox:1.28

# imagePullPolicy: IfNotPresent

# command: ['sh', '-c', "until nslookup myservice.$(cat /var/run/secrets/kubernetes.io/serviceaccount/namespace).svc.cluster.local; do echo waiting for myservice; sleep 2; done"]

# worker:

# - name: init-worker

# image: busybox:1.28

# command: ['sh', '-c', 'echo The worker is running! && sleep 3600']

securityContext:

runAsUser: 1000

runAsGroup: 1000

service:

type: NodePort

port: 8080

nodePort: 31880

nodeSelector: {}

tolerations: []

affinity: {}

auth: {}

# Set username and password

# https://trino.io/docs/current/security/password-file.html#file-format

# passwordAuth: "username:encrypted-password-with-htpasswd"

serviceAccount:

# Specifies whether a service account should be created

create: false

# The name of the service account to use.

# If not set and create is true, a name is generated using the fullname template

name: ""

# Annotations to add to the service account

annotations: {}

secretMounts: []

coordinator:

jvm:

maxHeapSize: "2G"

gcMethod:

type: "UseG1GC"

g1:

heapRegionSize: "32M"

config:

memory:

heapHeadroomPerNode: ""

query:

maxMemoryPerNode: "512MB"

additionalJVMConfig: {}

resources: {}

# We usually recommend not to specify default resources and to leave this as a conscious

# choice for the user. This also increases chances charts run on environments with little

# resources, such as Minikube. If you do want to specify resources, uncomment the following

# lines, adjust them as necessary, and remove the curly braces after 'resources:'.

# limits:

# cpu: 100m

# memory: 128Mi

# requests:

# cpu: 100m

# memory: 128Mi

livenessProbe: {}

# initialDelaySeconds: 20

# periodSeconds: 10

# timeoutSeconds: 5

# failureThreshold: 6

# successThreshold: 1

readinessProbe: {}

# initialDelaySeconds: 20

# periodSeconds: 10

# timeoutSeconds: 5

# failureThreshold: 6

# successThreshold: 1

additionalExposedPorts: {}

worker:

jvm:

maxHeapSize: "2G"

gcMethod:

type: "UseG1GC"

g1:

heapRegionSize: "32M"

config:

memory:

heapHeadroomPerNode: ""

query:

maxMemoryPerNode: "512MB"

additionalJVMConfig: {}

resources: {}

# We usually recommend not to specify default resources and to leave this as a conscious

# choice for the user. This also increases chances charts run on environments with little

# resources, such as Minikube. If you do want to specify resources, uncomment the following

# lines, adjust them as necessary, and remove the curly braces after 'resources:'.

# limits:

# cpu: 100m

# memory: 128Mi

# requests:

# cpu: 100m

# memory: 128Mi

livenessProbe: {}

readinessProbe: {}

additionalExposedPorts: {}

#hive:

#mountPath: "/opt/apache"

#volumes:

#- name: trino-hive

# configMap:

# name: trino-hive

# items:

### - key: key-qijing-file.txt

# path: key-qijing-file.txt4、将新加的configmap进行挂载

修改deployment-coordinator.yaml和deployment-worker.yaml,增加如下内容(注意空格):

volumes: - name: hiveconf-volume configMap: name: hive-conf items: - key: hdfs-site.xml path: hdfs-site.xml - key: core-site.xml path: core-site.xml - key: hive-site.xml path: hive-site.xml

volumeMounts: - mountPath: /opt/apache/trino-hiveconf name: hiveconf-volume

完整配置deployment-coordinator.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ template "trino.coordinator" . }}

labels:

app: {{ template "trino.name" . }}

chart: {{ template "trino.chart" . }}

release: {{ .Release.Name }}

heritage: {{ .Release.Service }}

component: coordinator

spec:

selector:

matchLabels:

app: {{ template "trino.name" . }}

release: {{ .Release.Name }}

component: coordinator

template:

metadata:

labels:

app: {{ template "trino.name" . }}

release: {{ .Release.Name }}

component: coordinator

spec:

serviceAccountName: {{ include "trino.serviceAccountName" . }}

{{- with .Values.securityContext }}

securityContext:

runAsUser: {{ .runAsUser }}

runAsGroup: {{ .runAsGroup }}

{{- end }}

volumes:

- name: hiveconf-volume

configMap:

name: hive-conf

items:

- key: hdfs-site.xml

path: hdfs-site.xml

- key: core-site.xml

path: core-site.xml

- key: hive-site.xml

path: hive-site.xml

- name: config-volume

configMap:

name: {{ template "trino.coordinator" . }}

- name: catalog-volume

configMap:

name: {{ template "trino.catalog" . }}

{{- if .Values.accessControl }}{{- if eq .Values.accessControl.type "configmap" }}

- name: access-control-volume

configMap:

name: trino-access-control-volume-coordinator

{{- end }}{{- end }}

{{- if eq .Values.server.config.authenticationType "PASSWORD" }}

- name: password-volume

secret:

secretName: trino-password-authentication

{{- end}}

{{- if .Values.initContainers.coordinator }}

initContainers:

{{- tpl (toYaml .Values.initContainers.coordinator) . | nindent 6 }}

{{- end }}

{{- range .Values.secretMounts }}

- name: {{ .name }}

secret:

secretName: {{ .secretName }}

{{- end }}

imagePullSecrets:

{{- toYaml .Values.imagePullSecrets | nindent 8 }}

containers:

- name: {{ .Chart.Name }}-coordinator

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

env:

{{- toYaml .Values.env | nindent 12 }}

volumeMounts:

- mountPath: /opt/apache/trino-hiveconf

name: hiveconf-volume

- mountPath: {{ .Values.server.config.path }}

name: config-volume

- mountPath: {{ .Values.server.config.path }}/catalog

name: catalog-volume

{{- if .Values.accessControl }}{{- if eq .Values.accessControl.type "configmap" }}

- mountPath: {{ .Values.server.config.path }}/access-control

name: access-control-volume

{{- end }}{{- end }}

{{- range .Values.secretMounts }}

- name: {{ .name }}

mountPath: {{ .path }}

{{- end }}

{{- if eq .Values.server.config.authenticationType "PASSWORD" }}

- mountPath: {{ .Values.server.config.path }}/auth

name: password-volume

{{- end }}

ports:

- name: http

containerPort: {{ .Values.service.port }}

protocol: TCP

{{- range $key, $value := .Values.coordinator.additionalExposedPorts }}

- name: {{ $value.name }}

containerPort: {{ $value.port }}

protocol: {{ $value.protocol }}

{{- end }}

livenessProbe:

httpGet:

path: /v1/info

port: http

initialDelaySeconds: {{ .Values.coordinator.livenessProbe.initialDelaySeconds | default 20 }}

periodSeconds: {{ .Values.coordinator.livenessProbe.periodSeconds | default 10 }}

timeoutSeconds: {{ .Values.coordinator.livenessProbe.timeoutSeconds | default 5 }}

failureThreshold: {{ .Values.coordinator.livenessProbe.failureThreshold | default 6 }}

successThreshold: {{ .Values.coordinator.livenessProbe.successThreshold | default 1 }}

readinessProbe:

httpGet:

path: /v1/info

port: http

initialDelaySeconds: {{ .Values.coordinator.readinessProbe.initialDelaySeconds | default 20 }}

periodSeconds: {{ .Values.coordinator.readinessProbe.periodSeconds | default 10 }}

timeoutSeconds: {{ .Values.coordinator.readinessProbe.timeoutSeconds | default 5 }}

failureThreshold: {{ .Values.coordinator.readinessProbe.failureThreshold | default 6 }}

successThreshold: {{ .Values.coordinator.readinessProbe.successThreshold | default 1 }}

resources:

{{- toYaml .Values.coordinator.resources | nindent 12 }}

{{- with .Values.coordinator.nodeSelector }}

nodeSelector:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.coordinator.affinity }}

affinity:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.coordinator.tolerations }}

tolerations:

{{- toYaml . | nindent 8 }}

{{- end }}完整配置deployment-worker.yaml

{{- if gt (int .Values.server.workers) 0 }}

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ template "trino.worker" . }}

labels:

app: {{ template "trino.name" . }}

chart: {{ template "trino.chart" . }}

release: {{ .Release.Name }}

heritage: {{ .Release.Service }}

component: worker

spec:

replicas: {{ .Values.server.workers }}

selector:

matchLabels:

app: {{ template "trino.name" . }}

release: {{ .Release.Name }}

component: worker

template:

metadata:

labels:

app: {{ template "trino.name" . }}

release: {{ .Release.Name }}

component: worker

spec:

serviceAccountName: {{ include "trino.serviceAccountName" . }}

volumes:

- name: hiveconf-volume

configMap:

name: hive-conf

items:

- key: hdfs-site.xml

path: hdfs-site.xml

- key: core-site.xml

path: core-site.xml

- key: hive-site.xml

path: hive-site.xml

- name: config-volume

configMap:

name: {{ template "trino.worker" . }}

- name: catalog-volume

configMap:

name: {{ template "trino.catalog" . }}

{{- if .Values.initContainers.worker }}

initContainers:

{{- tpl (toYaml .Values.initContainers.worker) . | nindent 6 }}

{{- end }}

imagePullSecrets:

{{- toYaml .Values.imagePullSecrets | nindent 8 }}

containers:

- name: {{ .Chart.Name }}-worker

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

env:

{{- toYaml .Values.env | nindent 12 }}

volumeMounts:

- mountPath: /opt/apache/trino-hiveconf

name: hiveconf-volume

- mountPath: {{ .Values.server.config.path }}

name: config-volume

- mountPath: {{ .Values.server.config.path }}/catalog

name: catalog-volume

ports:

- name: http

containerPort: {{ .Values.service.port }}

protocol: TCP

{{- range $key, $value := .Values.worker.additionalExposedPorts }}

- name: {{ $value.name }}

containerPort: {{ $value.port }}

protocol: {{ $value.protocol }}

{{- end }}

livenessProbe:

httpGet:

path: /v1/info

port: http

initialDelaySeconds: {{ .Values.worker.livenessProbe.initialDelaySeconds | default 20 }}

periodSeconds: {{ .Values.worker.livenessProbe.periodSeconds | default 10 }}

timeoutSeconds: {{ .Values.worker.livenessProbe.timeoutSeconds | default 5 }}

failureThreshold: {{ .Values.worker.livenessProbe.failureThreshold | default 6 }}

successThreshold: {{ .Values.worker.livenessProbe.successThreshold | default 1 }}

readinessProbe:

httpGet:

path: /v1/info

port: http

initialDelaySeconds: {{ .Values.worker.readinessProbe.initialDelaySeconds | default 20 }}

periodSeconds: {{ .Values.worker.readinessProbe.periodSeconds | default 10 }}

timeoutSeconds: {{ .Values.worker.readinessProbe.timeoutSeconds | default 5 }}

failureThreshold: {{ .Values.worker.readinessProbe.failureThreshold | default 6 }}

successThreshold: {{ .Values.worker.readinessProbe.successThreshold | default 1 }}

resources:

{{- toYaml .Values.worker.resources | nindent 12 }}

{{- with .Values.worker.nodeSelector }}

nodeSelector:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.worker.affinity }}

affinity:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.worker.tolerations }}

tolerations:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- end }}5、进行安装

helm install trino /root/jiuyue/helm/trino/ -n trino-test

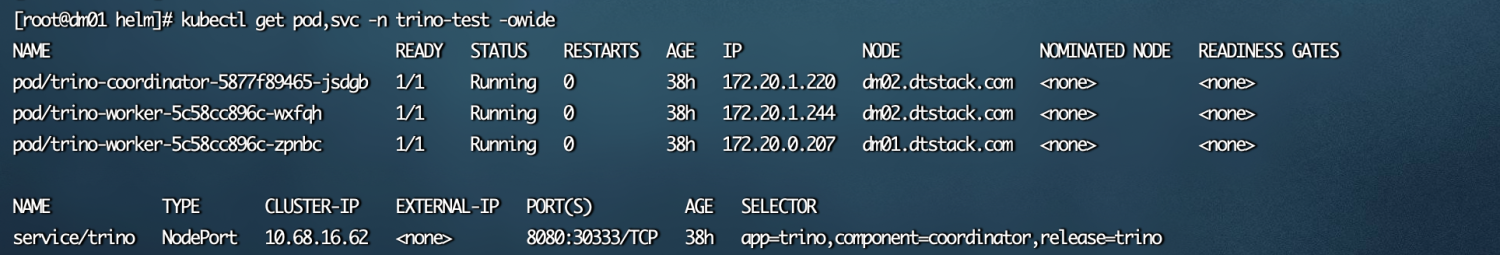

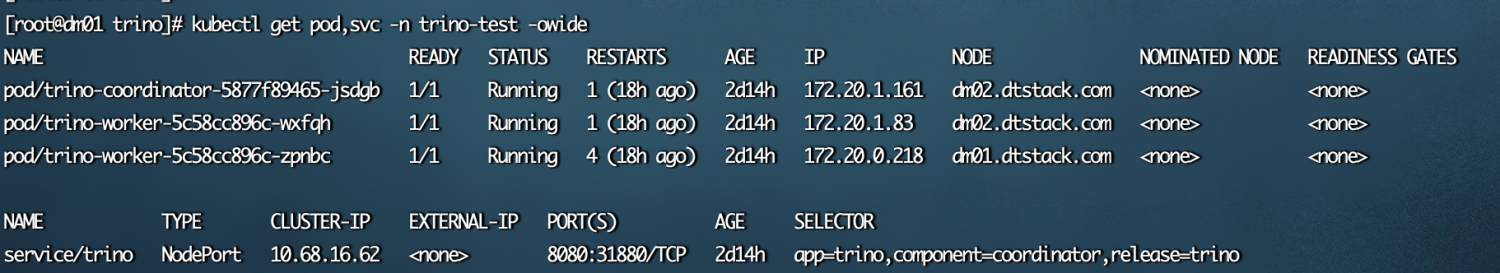

6、查看生成的pod

kubectl get pod,svc -n trino-test -owide

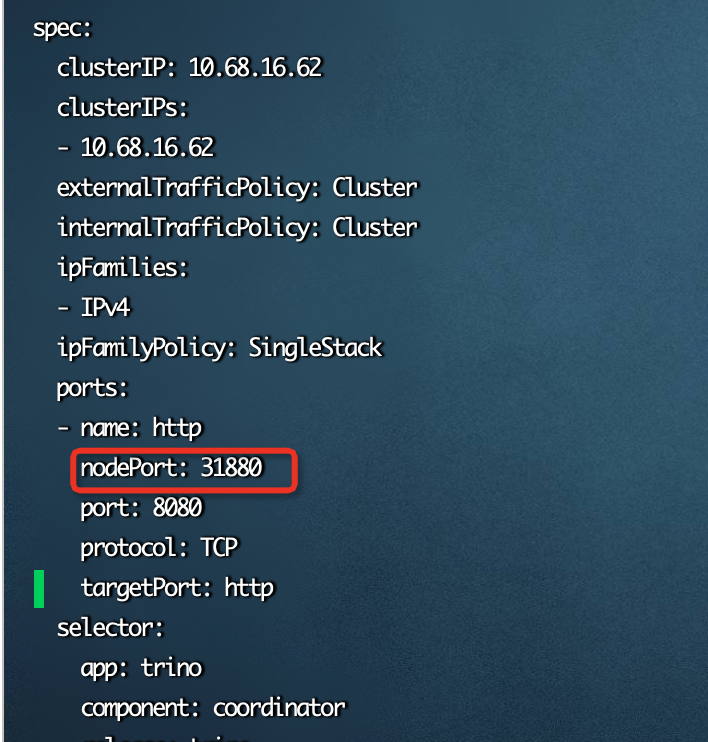

修改web端口

kubectl edit service -n trino-test 端口改为31880

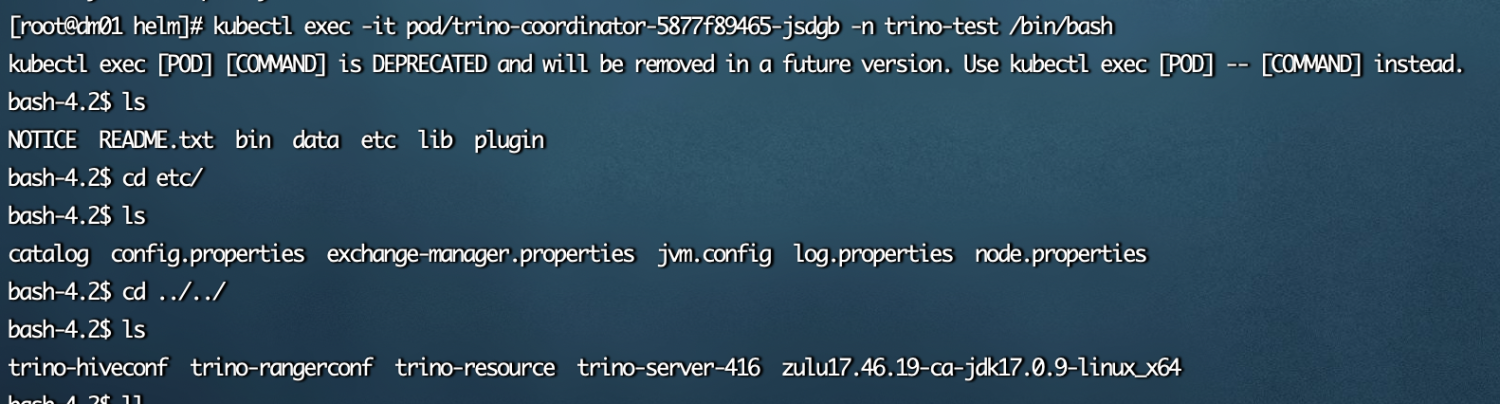

#查看pod内部 kubectl exec -it pod/trino-coordinator-5877f89465-jsdgb -n trino-test /bin/bash

7、冒烟测试

测试链接mysql

./trino-cli-416-executable.jar --server http://172.16.121.114:31880 --user admin trino> show schemas; Schema -------------------- dtstack information_schema metastore performance_schema (4 rows) Query 20231109_120504_00000_px53j, FINISHED, 3 nodes Splits: 36 total, 36 done (100.00%) 1.51 [4 rows, 72B] [2 rows/s, 48B/s] trino> use metastore; trino:metastore> create schema trino_test; CREATE SCHEMA trino:metastore> create table trino_test.user(id int not null, username varchar(32) not null, password varchar(32) not null); CREATE TABLE trino:metastore> insert into trino_test.user values(1,'user1','pwd1'); INSERT: 1 row Query 20231109_122925_00023_px53j, FINISHED, 3 nodes Splits: 52 total, 52 done (100.00%) 1.70 [0 rows, 0B] [0 rows/s, 0B/s] trino:metastore> insert into trino_test.user values(2,'user2','pwd2'); INSERT: 1 row Query 20231109_122938_00024_px53j, FINISHED, 3 nodes Splits: 52 total, 52 done (100.00%) 1.01 [0 rows, 0B] [0 rows/s, 0B/s] trino:metastore> select * from trino_test.user; id | username | password ----+----------+---------- 1 | user1 | pwd1 2 | user2 | pwd2 (2 rows) Query 20231109_122944_00025_px53j, FINISHED, 1 node Splits: 1 total, 1 done (100.00%) 0.29 [2 rows, 0B] [6 rows/s, 0B/s]

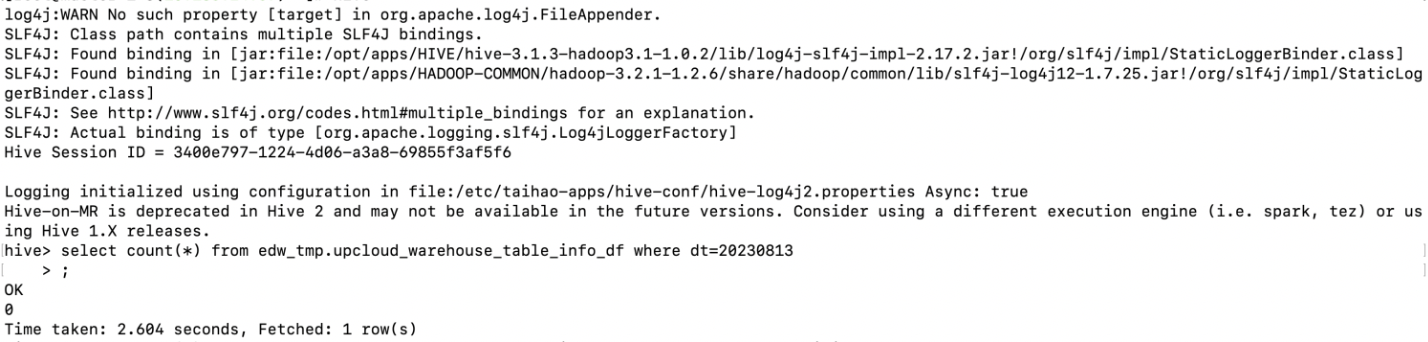

测试链接hive

[root@dm01 trino]# ./trino-cli-416-executable.jar --server http://172.16.121.114:31880 --user admin --catalog=hive trino> use test; USE trino:test> select * from hive_student; s_no | s_name | s_sex | s_birth | s_class ------+--------+-------+---------+--------- (0 rows) Query 20231110_144640_00002_p2fru, FINISHED, 1 node Splits: 1 total, 1 done (100.00%) 2.06 [0 rows, 0B] [0 rows/s, 0B/s] trino:test> trino:test> trino:test> show schemas; Schema -------------------- default information_schema test (3 rows) Query 20231110_144730_00004_p2fru, FINISHED, 3 nodes Splits: 36 total, 36 done (100.00%) 0.36 [3 rows, 44B] [8 rows/s, 123B/s] trino:test> use default; USE trino:default> show tables; Table ------- test (1 row) Query 20231110_144739_00008_p2fru, FINISHED, 3 nodes Splits: 36 total, 36 done (100.00%) 0.66 [1 rows, 21B] [1 rows/s, 32B/s] trino:default> select * from test; id ---- (0 rows) Query 20231110_144751_00009_p2fru, FINISHED, 1 node Splits: 1 total, 1 done (100.00%) 0.12 [0 rows, 0B] [0 rows/s, 0B/s]

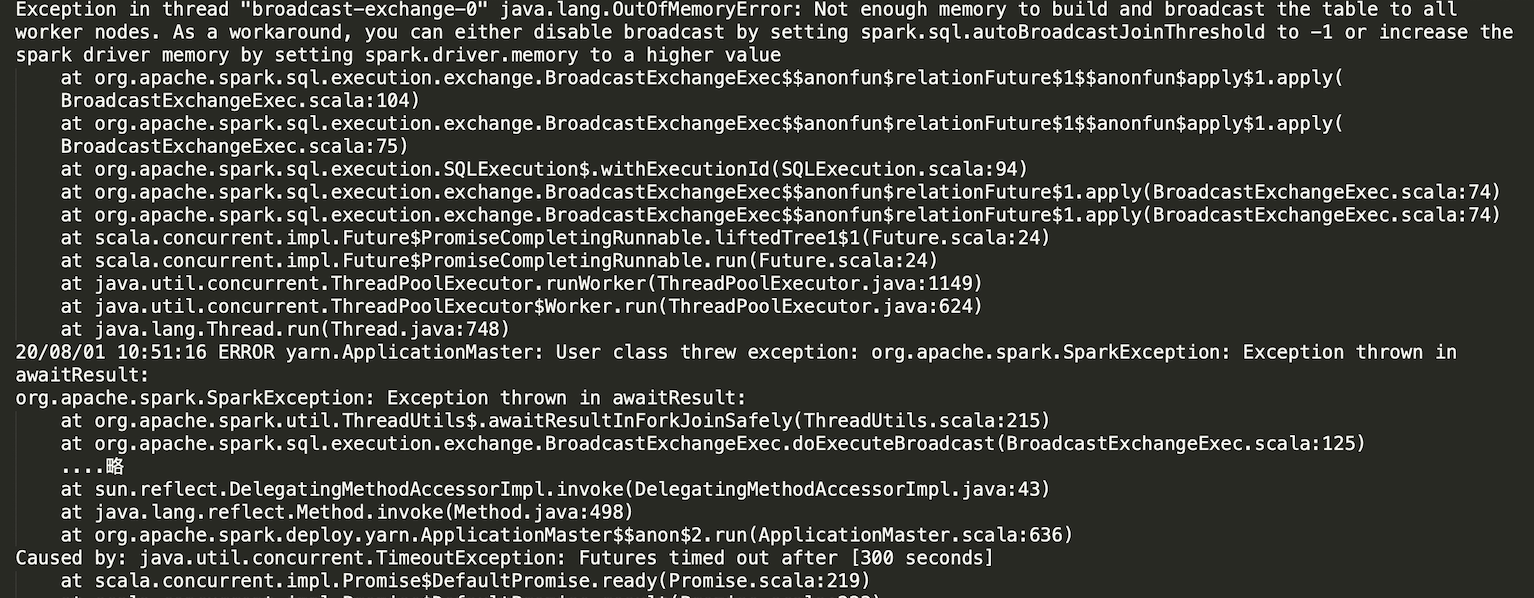

测试导入导出

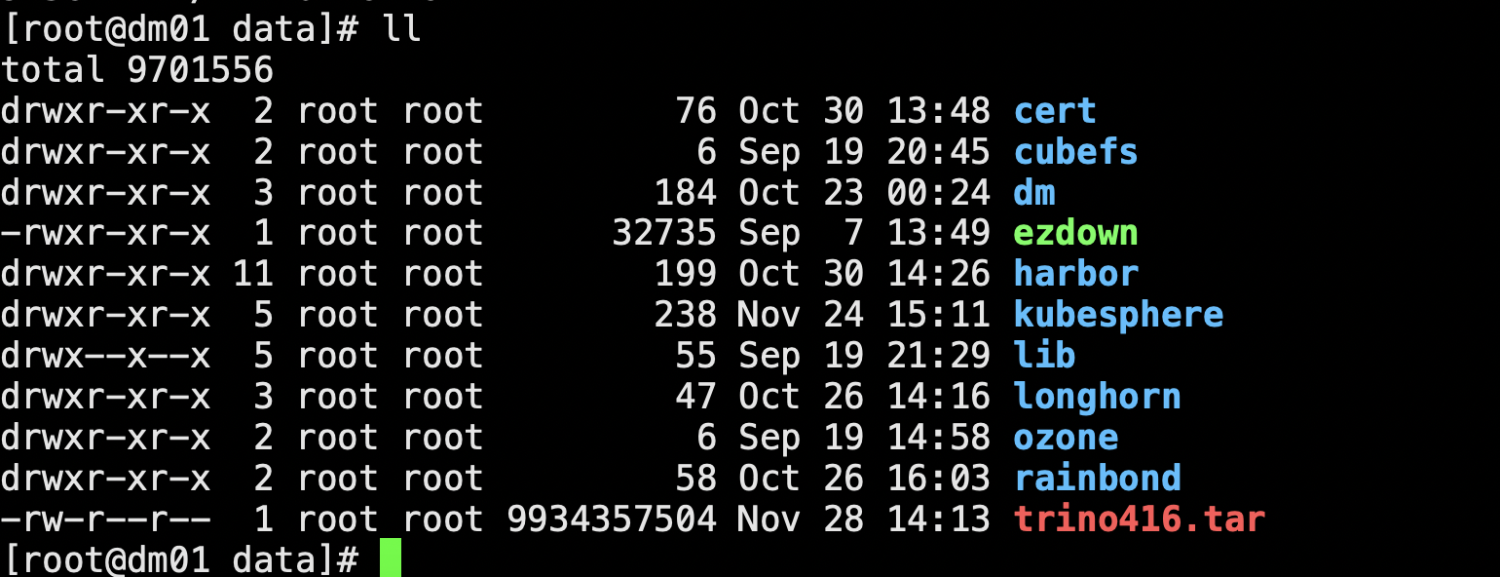

查看trino镜像

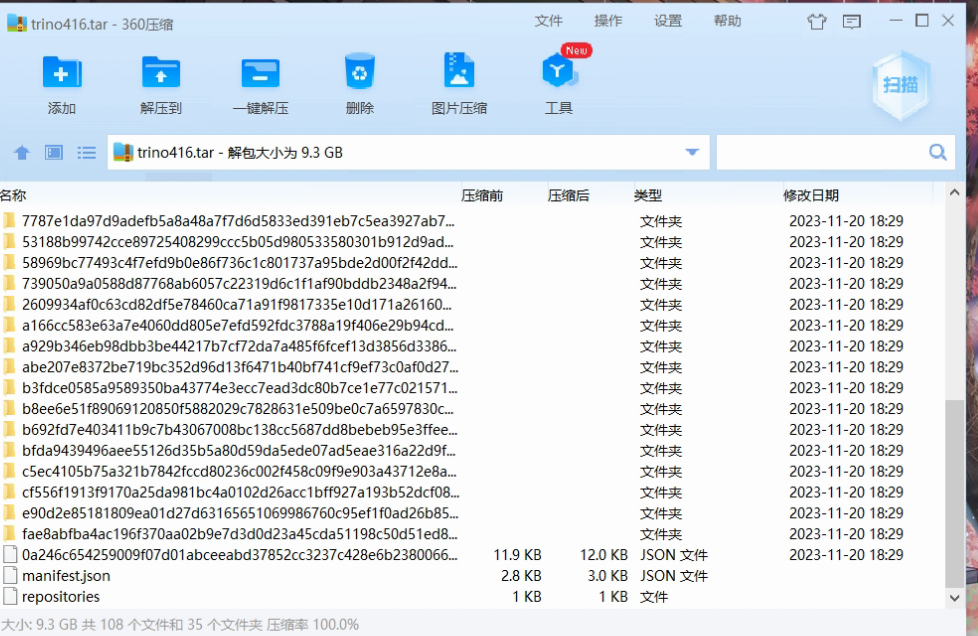

导出镜像

#docker save 0a246c654259 > /root/jiuyue/image/trino/trino:416.tar docker save harbor.dtstack.com:8443/dtstack/trino_test10:416 > trino416.tar

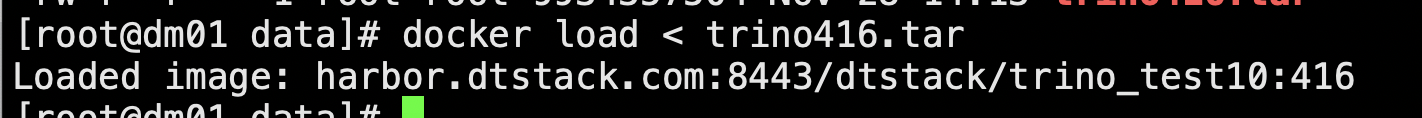

导入镜像

docker load < trino416.tar