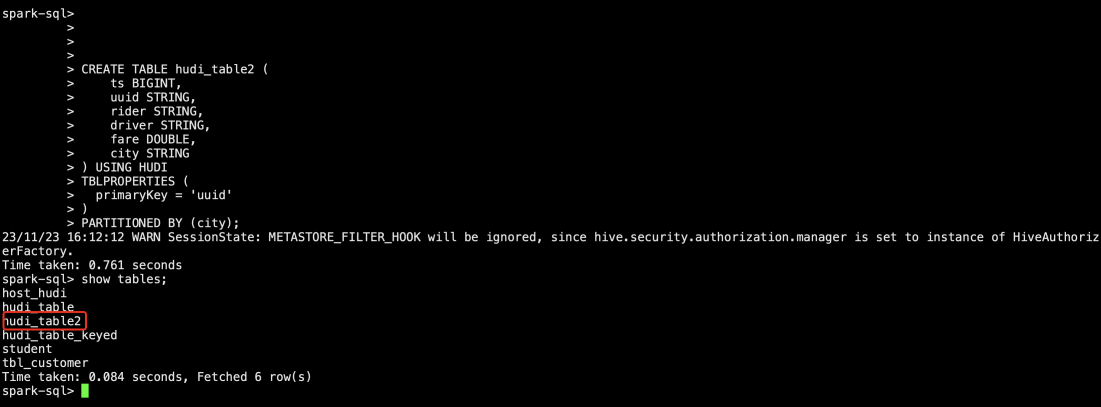

trino容器对接ldap(二)

前提:本文前提是在trino容器已经对接上hive组件,并且ldap已经部署完成的基础上进行的对接。前提文章见:helm安装部署trino对接hive(一)

安装部署

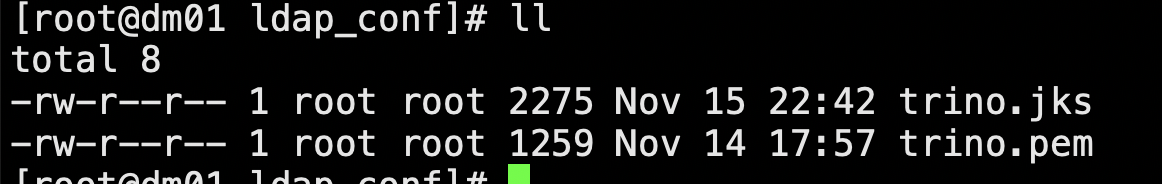

1、设置证书

因为不确定是在哪台机器中启动coordinator,,所以证书需要所有的k8s节点都进行认证。

keytool -genkeypair -validity 36500 -ext SAN=IP:172.16.121.210,IP:172.16.121.72,IP:172.16.121.114,DNS:dm01.dtstack.com,DNS:dm02.dtstack.com,DNS:dm03.dtstack.com -alias trino -keypass admin@123 -storepass admin@123 -keyalg RSA -dname CN=dm01.dtstack.com,OU=,O=,L=,ST=,C= -keystore trino4.jks keytool -export -rfc -keystore trino.jks --alias trino -file trino.pem

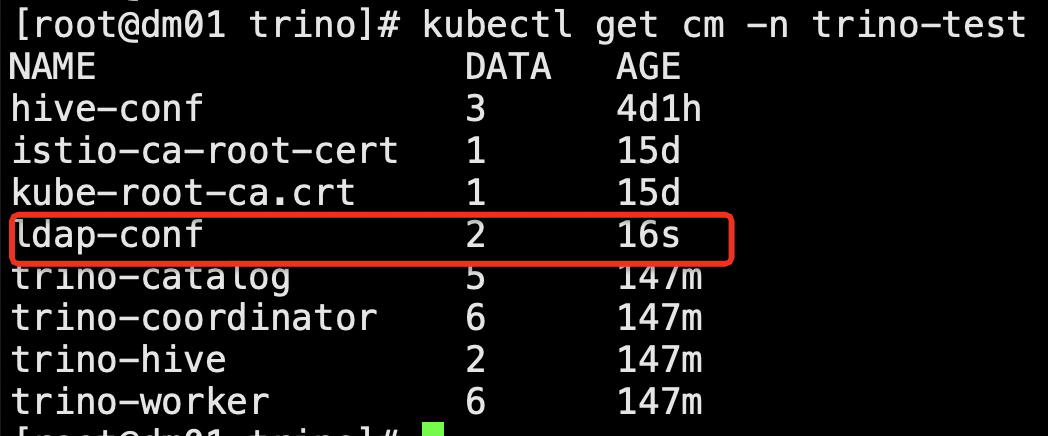

2、创建configmap并进行挂载

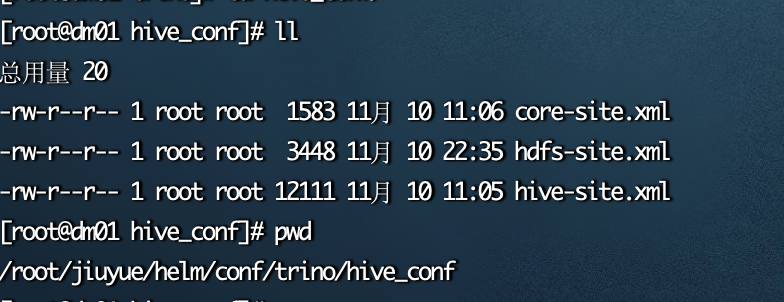

创建ladp_conf路径,将生成的证书文件放在该路径下,以configmap的方式挂载到容器中

kubectl -n trino-test create cm ldap-conf --from-file=/root/jiuyue/helm/conf/trino/ldap_conf/

修改deployment-coordinator.yaml和deployment-worker.yaml,增加如下内容:

- name: ldap-trino-volume configMap: name: ldap-conf items: - key: trino.jks path: trino.jks - key: trino.pem path: trino.pem

- mountPath: /opt/apache/trino-ldap name: ldap-trino-volume

完整的deployment-coordinator.yaml文档:

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ template "trino.coordinator" . }}

labels:

app: {{ template "trino.name" . }}

chart: {{ template "trino.chart" . }}

release: {{ .Release.Name }}

heritage: {{ .Release.Service }}

component: coordinator

spec:

selector:

matchLabels:

app: {{ template "trino.name" . }}

release: {{ .Release.Name }}

component: coordinator

template:

metadata:

labels:

app: {{ template "trino.name" . }}

release: {{ .Release.Name }}

component: coordinator

spec:

serviceAccountName: {{ include "trino.serviceAccountName" . }}

{{- with .Values.securityContext }}

securityContext:

runAsUser: {{ .runAsUser }}

runAsGroup: {{ .runAsGroup }}

{{- end }}

volumes:

- name: hiveconf-volume

configMap:

name: hive-conf

items:

- key: hdfs-site.xml

path: hdfs-site.xml

- key: core-site.xml

path: core-site.xml

- key: hive-site.xml

path: hive-site.xml

- name: config-volume

configMap:

name: {{ template "trino.coordinator" . }}

- name: ldap-trino-volume

configMap:

name: ldap-conf

items:

- key: trino.jks

path: trino.jks

- key: trino.pem

path: trino.pem

- name: catalog-volume

configMap:

name: {{ template "trino.catalog" . }}

{{- if .Values.accessControl }}{{- if eq .Values.accessControl.type "configmap" }}

- name: access-control-volume

configMap:

name: trino-access-control-volume-coordinator

{{- end }}{{- end }}

{{- if eq .Values.server.config.authenticationType "PASSWORD" }}

- name: password-volume

secret:

secretName: trino-password-authentication

{{- end}}

{{- if .Values.initContainers.coordinator }}

initContainers:

{{- tpl (toYaml .Values.initContainers.coordinator) . | nindent 6 }}

{{- end }}

{{- range .Values.secretMounts }}

- name: {{ .name }}

secret:

secretName: {{ .secretName }}

{{- end }}

imagePullSecrets:

{{- toYaml .Values.imagePullSecrets | nindent 8 }}

containers:

- name: {{ .Chart.Name }}-coordinator

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

env:

{{- toYaml .Values.env | nindent 12 }}

volumeMounts:

- mountPath: /opt/apache/trino-hiveconf

name: hiveconf-volume

- mountPath: {{ .Values.server.config.path }}

name: config-volume

- mountPath: /opt/apache/trino-ldap

name: ldap-trino-volume

- mountPath: {{ .Values.server.config.path }}/catalog

name: catalog-volume

{{- if .Values.accessControl }}{{- if eq .Values.accessControl.type "configmap" }}

- mountPath: {{ .Values.server.config.path }}/access-control

name: access-control-volume

{{- end }}{{- end }}

{{- range .Values.secretMounts }}

- name: {{ .name }}

mountPath: {{ .path }}

{{- end }}

{{- if eq .Values.server.config.authenticationType "PASSWORD" }}

- mountPath: {{ .Values.server.config.path }}/auth

name: password-volume

{{- end }}

ports:

- name: http

containerPort: {{ .Values.service.port }}

protocol: TCP

{{- range $key, $value := .Values.coordinator.additionalExposedPorts }}

- name: {{ $value.name }}

containerPort: {{ $value.port }}

protocol: {{ $value.protocol }}

{{- end }}

livenessProbe:

httpGet:

path: /v1/info

port: http

initialDelaySeconds: {{ .Values.coordinator.livenessProbe.initialDelaySeconds | default 20 }}

periodSeconds: {{ .Values.coordinator.livenessProbe.periodSeconds | default 10 }}

timeoutSeconds: {{ .Values.coordinator.livenessProbe.timeoutSeconds | default 5 }}

failureThreshold: {{ .Values.coordinator.livenessProbe.failureThreshold | default 6 }}

successThreshold: {{ .Values.coordinator.livenessProbe.successThreshold | default 1 }}

readinessProbe:

httpGet:

path: /v1/info

port: http

initialDelaySeconds: {{ .Values.coordinator.readinessProbe.initialDelaySeconds | default 20 }}

periodSeconds: {{ .Values.coordinator.readinessProbe.periodSeconds | default 10 }}

timeoutSeconds: {{ .Values.coordinator.readinessProbe.timeoutSeconds | default 5 }}

failureThreshold: {{ .Values.coordinator.readinessProbe.failureThreshold | default 6 }}

successThreshold: {{ .Values.coordinator.readinessProbe.successThreshold | default 1 }}

resources:

{{- toYaml .Values.coordinator.resources | nindent 12 }}

{{- with .Values.coordinator.nodeSelector }}

nodeSelector:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.coordinator.affinity }}

affinity:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.coordinator.tolerations }}

tolerations:

{{- toYaml . | nindent 8 }}

{{- end }}完整deployment-worker.yaml文档:

{{- if gt (int .Values.server.workers) 0 }}

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ template "trino.worker" . }}

labels:

app: {{ template "trino.name" . }}

chart: {{ template "trino.chart" . }}

release: {{ .Release.Name }}

heritage: {{ .Release.Service }}

component: worker

spec:

replicas: {{ .Values.server.workers }}

selector:

matchLabels:

app: {{ template "trino.name" . }}

release: {{ .Release.Name }}

component: worker

template:

metadata:

labels:

app: {{ template "trino.name" . }}

release: {{ .Release.Name }}

component: worker

spec:

serviceAccountName: {{ include "trino.serviceAccountName" . }}

volumes:

- name: hiveconf-volume

configMap:

name: hive-conf

items:

- key: hdfs-site.xml

path: hdfs-site.xml

- key: core-site.xml

path: core-site.xml

- key: hive-site.xml

path: hive-site.xml

- name: config-volume

configMap:

name: {{ template "trino.worker" . }}

- name: ldap-trino-volume

configMap:

name: ldap-conf

items:

- key: trino.jks

path: trino.jks

- key: trino.pem

path: trino.pem

- name: catalog-volume

configMap:

name: {{ template "trino.catalog" . }}

{{- if .Values.initContainers.worker }}

initContainers:

{{- tpl (toYaml .Values.initContainers.worker) . | nindent 6 }}

{{- end }}

imagePullSecrets:

{{- toYaml .Values.imagePullSecrets | nindent 8 }}

containers:

- name: {{ .Chart.Name }}-worker

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

env:

{{- toYaml .Values.env | nindent 12 }}

volumeMounts:

- mountPath: /opt/apache/trino-hiveconf

name: hiveconf-volume

- mountPath: {{ .Values.server.config.path }}

name: config-volume

- mountPath: /opt/apache/trino-ldap

name: ldap-trino-volume

- mountPath: {{ .Values.server.config.path }}/catalog

name: catalog-volume

ports:

- name: http

containerPort: {{ .Values.service.port }}

protocol: TCP

{{- range $key, $value := .Values.worker.additionalExposedPorts }}

- name: {{ $value.name }}

containerPort: {{ $value.port }}

protocol: {{ $value.protocol }}

{{- end }}

livenessProbe:

httpGet:

path: /v1/info

port: http

initialDelaySeconds: {{ .Values.worker.livenessProbe.initialDelaySeconds | default 20 }}

periodSeconds: {{ .Values.worker.livenessProbe.periodSeconds | default 10 }}

timeoutSeconds: {{ .Values.worker.livenessProbe.timeoutSeconds | default 5 }}

failureThreshold: {{ .Values.worker.livenessProbe.failureThreshold | default 6 }}

successThreshold: {{ .Values.worker.livenessProbe.successThreshold | default 1 }}

readinessProbe:

httpGet:

path: /v1/info

port: http

initialDelaySeconds: {{ .Values.worker.readinessProbe.initialDelaySeconds | default 20 }}

periodSeconds: {{ .Values.worker.readinessProbe.periodSeconds | default 10 }}

timeoutSeconds: {{ .Values.worker.readinessProbe.timeoutSeconds | default 5 }}

failureThreshold: {{ .Values.worker.readinessProbe.failureThreshold | default 6 }}

successThreshold: {{ .Values.worker.readinessProbe.successThreshold | default 1 }}

resources:

{{- toYaml .Values.worker.resources | nindent 12 }}

{{- with .Values.worker.nodeSelector }}

nodeSelector:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.worker.affinity }}

affinity:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.worker.tolerations }}

tolerations:

{{- toYaml . | nindent 8 }}

{{- end }}

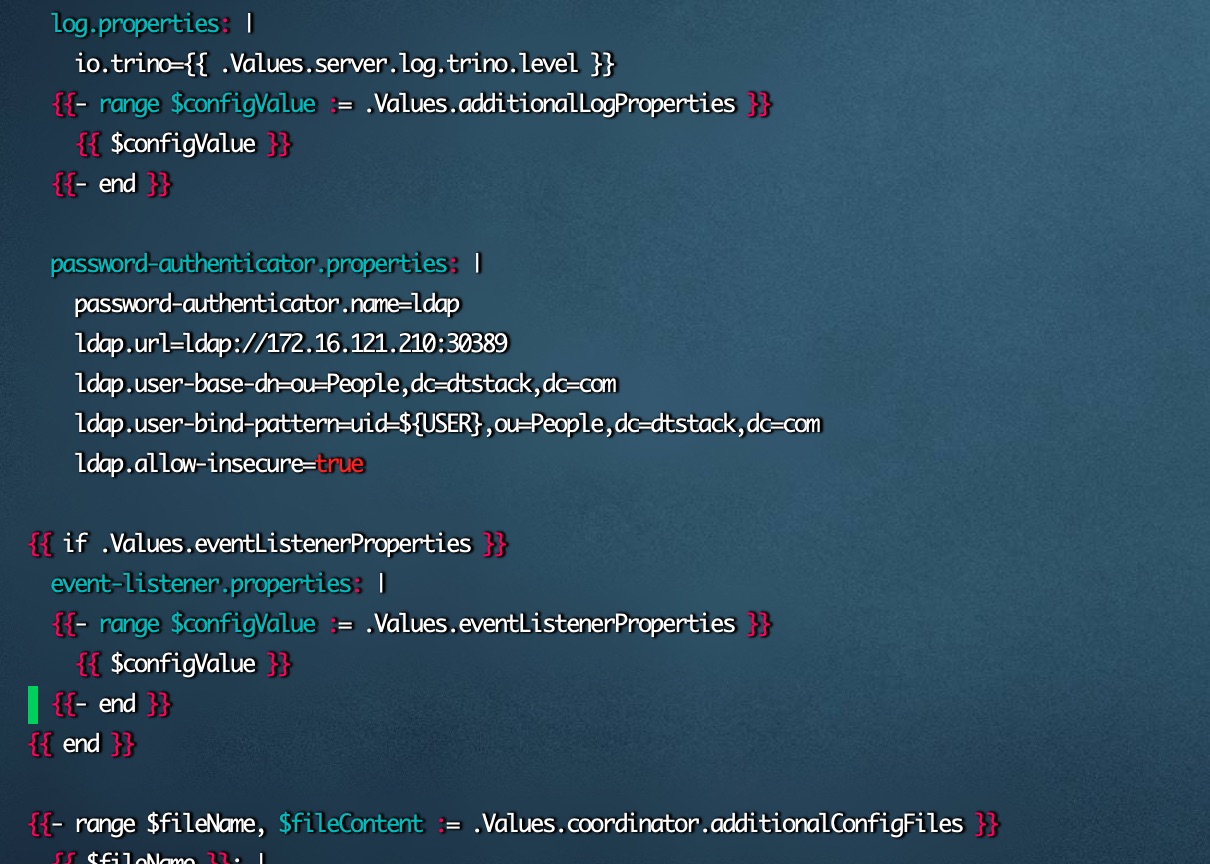

{{- end }}3、配置password-authenticator.properties配置文件

因为etc路径在configmap中已经进行了添加,所以直接将文件加在configmap-coordinator.yaml和configmap-worker.yaml中

#进入下载的chart路径

cd /root/jiuyue/helm/trino/templates

#在声明文件的地方,添加上如下配置

vim configmap-coordinator.yaml

password-authenticator.properties: |

password-authenticator.name=ldap

ldap.url=ldap://172.16.121.210:30389

ldap.user-base-dn=ou=People,dc=dtstack,dc=com

ldap.user-bind-pattern=uid=${USER},ou=People,dc=dtstack,dc=com

ldap.allow-insecure=true

vim configmap-worker.yaml

password-authenticator.properties: |

password-authenticator.name=ldap

ldap.url=ldap://172.16.121.210:30389

ldap.user-base-dn=ou=People,dc=dtstack,dc=com

ldap.user-bind-pattern=uid=${USER},ou=People,dc=dtstack,dc=com

ldap.allow-insecure=true

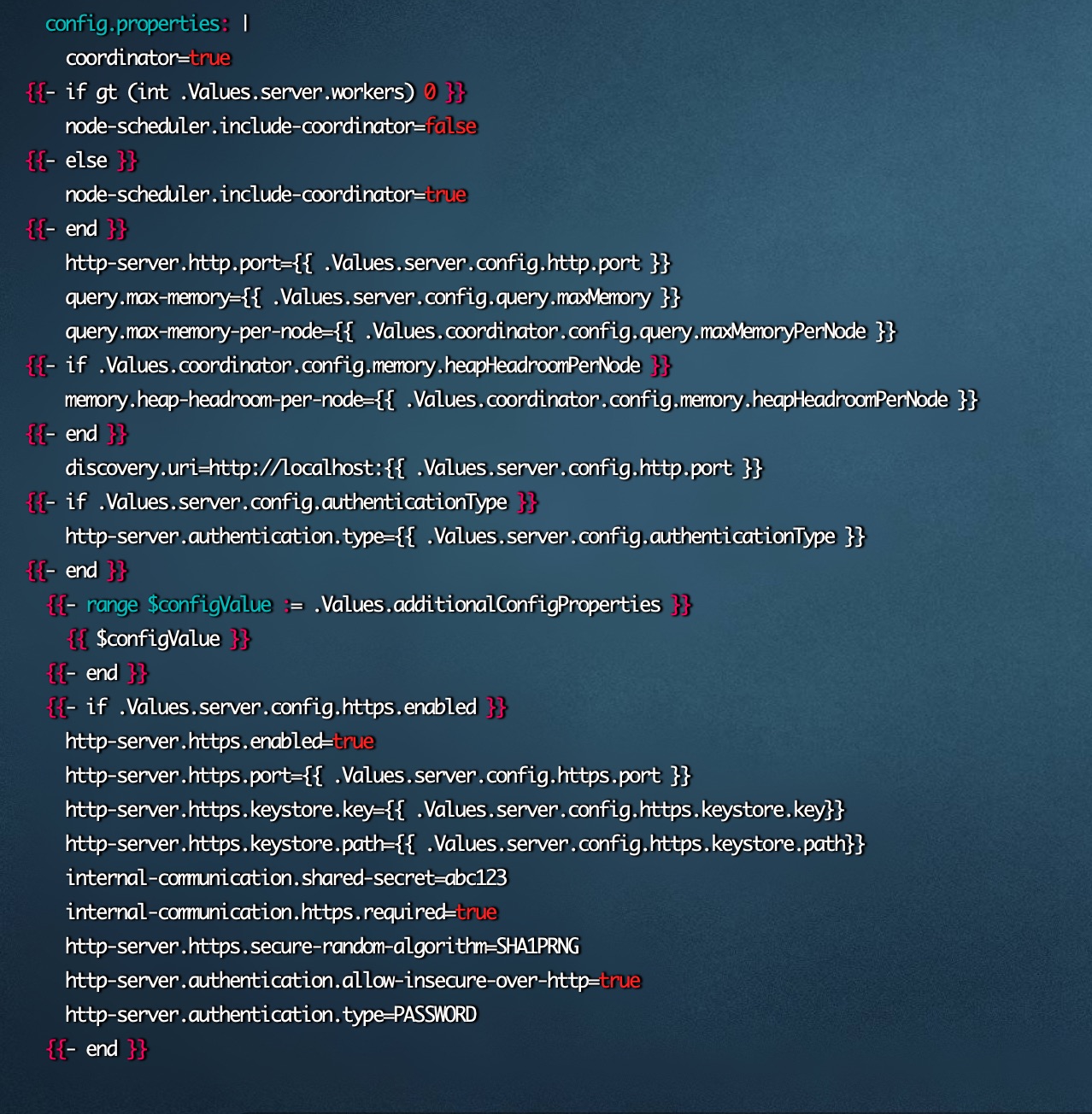

4、增加config.properties的配置

同上,找到声明config.properties的地方,直接将配置加在configmap-coordinator.yaml和configmap-worker.yaml中

vi configmap-coordinator.yaml

{{- if .Values.server.config.https.enabled }}

http-server.https.enabled=true

http-server.https.port={{ .Values.server.config.https.port }}

http-server.https.keystore.key={{ .Values.server.config.https.keystore.key}}

http-server.https.keystore.path={{ .Values.server.config.https.keystore.path}}

internal-communication.shared-secret=abc123

internal-communication.https.required=true

http-server.https.secure-random-algorithm=SHA1PRNG

http-server.authentication.allow-insecure-over-http=true

http-server.authentication.type=PASSWORD

{{- end }}

vi configmap-worker.yaml

{{- if .Values.server.config.https.enabled }}

http-server.https.enabled=true

http-server.https.port={{ .Values.server.config.https.port }}

http-server.https.keystore.key={{ .Values.server.config.https.keystore.key}}

http-server.https.keystore.path={{ .Values.server.config.https.keystore.path}}

internal-communication.shared-secret=abc123

internal-communication.https.required=true

http-server.https.secure-random-algorithm=SHA1PRNG

http-server.authentication.allow-insecure-over-http=true

http-server.authentication.type=PASSWORD

{{- end }}

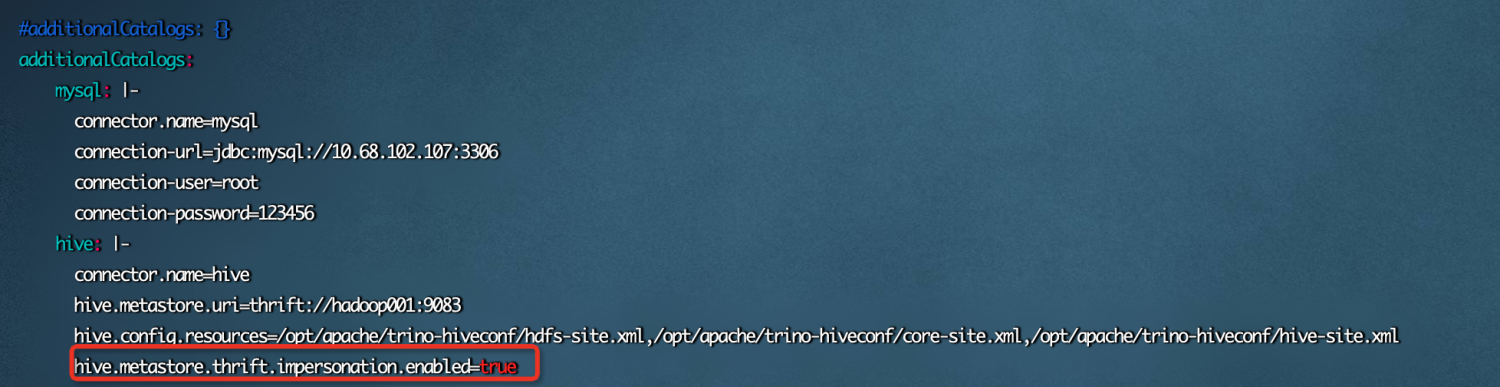

5、hive.properties增加配置

hive.properties是暴露在values.yaml中的,所以在values.yaml文件中进行增加配置,该参数用于设置Hive 元数据存储(Metastore)服务对客户端请求进行用户身份模拟

hive.metastore.thrift.impersonation.enabled=true

6、设置https相关配置

https相关配置暴露在values.yaml中,直接在文件中进行修改

server: workers: 2 node: environment: production dataDir: /opt/apache/trino-server-416/data pluginDir: /opt/apache/trino-server-416/plugin log: trino: level: INFO config: path: /opt/apache/trino-server-416/etc http: port: 8080 https: enabled: true port: 8443 keystore: key: "admin@123" path: "/opt/apache/trino-ldap/trino.jks"

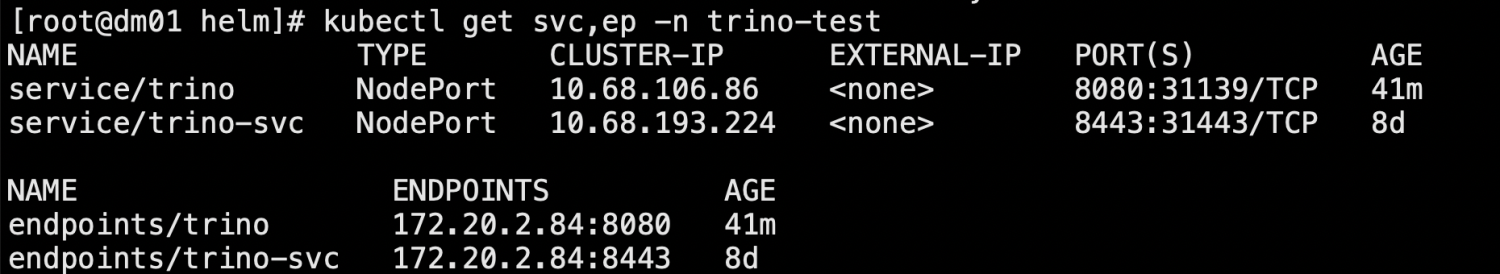

创建https的svc,修改trino-service.yaml中的内容,把版本和时间相关的信息删掉,将对应释放的端口信息配置好

kubectl -n trino-test get svc trino -o yaml >trino-service.yaml

vim trino-service.yaml

apiVersion: v1 kind: Service metadata: labels: app: trino app.kubernetes.io/managed-by: Helm chart: trino-0.10.2 heritage: Helm release: trino name: trino-svc namespace: trino-test spec: ports: - name: https nodePort: 31443 port: 8443 protocol: TCP targetPort: 8443 selector: app: trino component: coordinator release: trino type: NodePort

kubectl apply -f trino-service.yaml kubectl get svc,ep -n trino-test

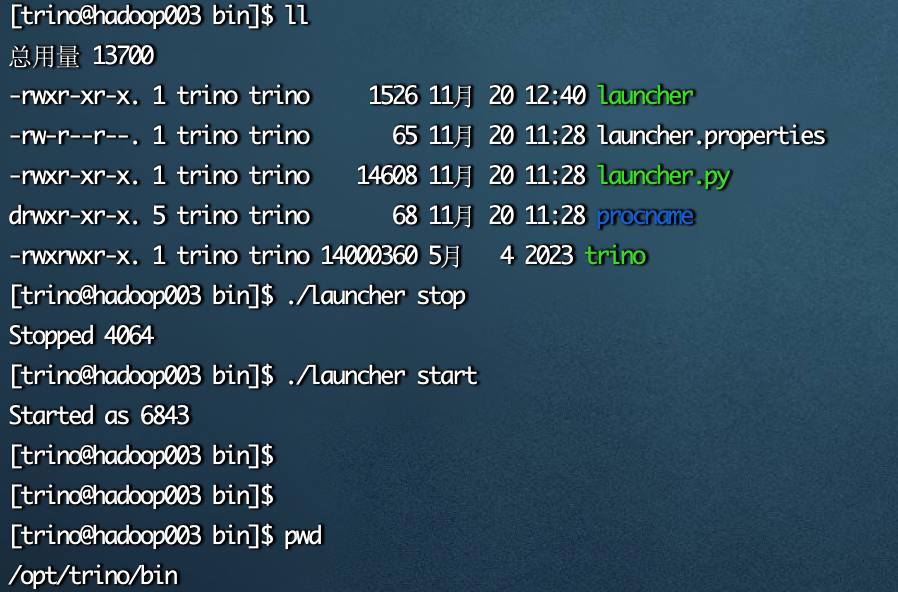

7、重新install trino

helm -n trino-test uninstall trino helm install trino /root/jiuyue/helm/trino/ -n trino-test

冒烟测试

在trino客户端所在地址进行测试。

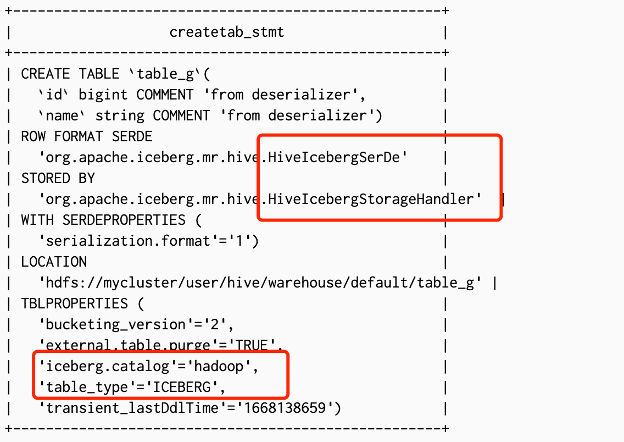

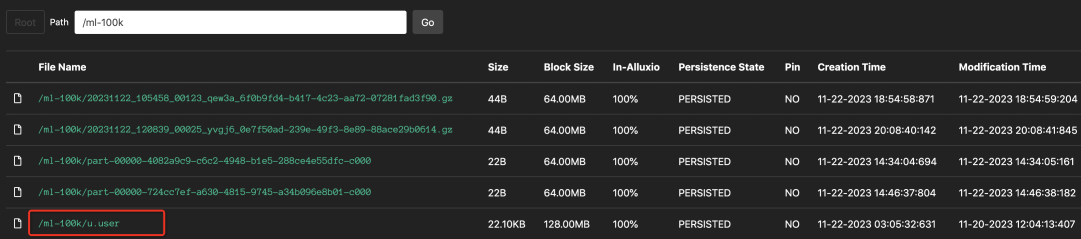

[root@dm01 trino]# ./trino-cli-416-executable.jar --server https://172.16.121.72:31443 --keystore-path /root/jiuyue/helm/conf/trino/ldap_conf/trino.jks --keystore-password admin@123 --catalog hive --user test --password Password: trino> show schemas; Schema -------------------- default information_schema test (3 rows) Query 20231116_031739_00007_cghf7, FINISHED, 3 nodes Splits: 36 total, 36 done (100.00%) 0.31 [3 rows, 44B] [9 rows/s, 144B/s] trino> use test; USE trino:test> show tables; Table --------------- hive_student hive_student1 u_user (3 rows) Query 20231116_031751_00011_cghf7, FINISHED, 3 nodes Splits: 36 total, 36 done (100.00%) 0.23 [3 rows, 73B] [12 rows/s, 313B/s] trino:test> select * from hive_student1; s_no | s_name | s_sex | s_birth | s_class ------+--------+-------+------------+--------- 108 | 曾华 | 男 | 1977-09-01 | 95033 107 | 曾华 | 男 | 1977-09-01 | 95033 (2 rows) Query 20231116_031806_00012_cghf7, FINISHED, 2 nodes Splits: 2 total, 2 done (100.00%) 1.18 [2 rows, 64B] [1 rows/s, 54B/s] trino:test> insert into test values(108,'张三',95033); INSERT: 1 row Query 20231119_035335_00013_bybwu, FINISHED, 3 nodes https://172.16.121.114:31443/ui/query.html?20231119_035335_00013_bybwu Splits: 50 total, 50 done (100.00%) CPU Time: 0.5s total, 0 rows/s, 0B/s, 55% active Per Node: 0.1 parallelism, 0 rows/s, 0B/s Parallelism: 0.3 Peak Memory: 2.99KB 1.72 [0 rows, 0B] [0 rows/s, 0B/s] trino:test> select * from test limit 10; id | name | age -----+------+------- 108 | 张三 | 95033