Ambari开启kerberos+安全方案

安装Kerberos

server节点安装kerberos相关软件

yum install -y krb5-server krb5-workstation krb5-libs

client节点安装

yum install -y krb5-workstation krb5-libs

Ps 离线安装

需要准备以下软件包

krb5-libs-1.15.1-55.el7_9.x86_64.rpm

krb5-server-1.15.1-55.el7_9.x86_64.rpm(服务端安装,其他节点不装)

krb5-workstation-1.15.1-55.el7_9.x86_64.rpm

libevent-2.0.21-4.el7.x86_64.rpm

libkadm5-1.15.1-55.el7_9.x86_64.rpm

libverto-libevent-0.2.5-4.el7.x86_64.rpm

words-3.0-22.el7.noarch.rpm

rpm -ivh 包名

修改配置文件krb5.conf

(#以下需要在服务端和客户端都配置,可以在服务端配置好以后使用scp拷贝。)

vim /etc/krb5.conf

# Configuration snippets may be placed in this directory as well

includedir /etc/krb5.conf.d/

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

dns_lookup_realm = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

rdns = false

pkinit_anchors = FILE:/etc/pki/tls/certs/ca-bundle.crt

default_realm = HADOOP.COM

default_ccache_name = KEYRING:persistent:%{uid}

[realms]

HADOOP.COM = {

kdc = 172.16.120.50

admin_server = 172.16.120.50

}

[domain_realm]

分发其他节点

scp /etc/krb5.conf hdp01:/etc

scp /etc/krb5.conf hdp02:/etc

scp /etc/krb5.conf hdp03:/etc

修改server服务端的配置文件kdc.conf

vim /var/kerberos/krb5kdc/kdc.conf

[kdcdefaults]

kdc_ports = 88

kdc_tcp_ports = 88

[realms]

HADOOP.COM = {

#master_key_type = aes256-cts

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/share/dict/words

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

}

配置阶段

创建kerberos数据库

[root@hdp01 ~]# kdb5_util create -s -r HADOOP.COM

Loading random data

Initializing database '/var/kerberos/krb5kdc/principal' for realm 'HADOOP.COM',

master key name 'K/M@HADOOP.COM'

You will be prompted for the database Master Password.

It is important that you NOT FORGET this password.

Enter KDC database master key:

Re-enter KDC database master key to verify:

(123456)

[root@hdp01 ~]#

创建管理员admin

[root@hdp01 ~]# kadmin.local -q "addprinc admin/admin"

Authenticating as principal root/admin@HADOOP.COM with password.

WARNING: no policy specified for admin/admin@HADOOP.COM; defaulting to no policy

Enter password for principal "admin/admin@HADOOP.COM":

Re-enter password for principal "admin/admin@HADOOP.COM":

Principal "admin/admin@HADOOP.COM" created.

(123456)

[root@hdp01 ~]#

给管理员账户添加acl权限

[root@hdp01 ~]# cat /var/kerberos/krb5kdc/kadm5.acl

*/admin@HADOOP.COM *

启动服务和设置开机自启

systemctl start krb5kdc

systemctl start kadmin

systemctl enable krb5kdc

systemctl enable kadmin

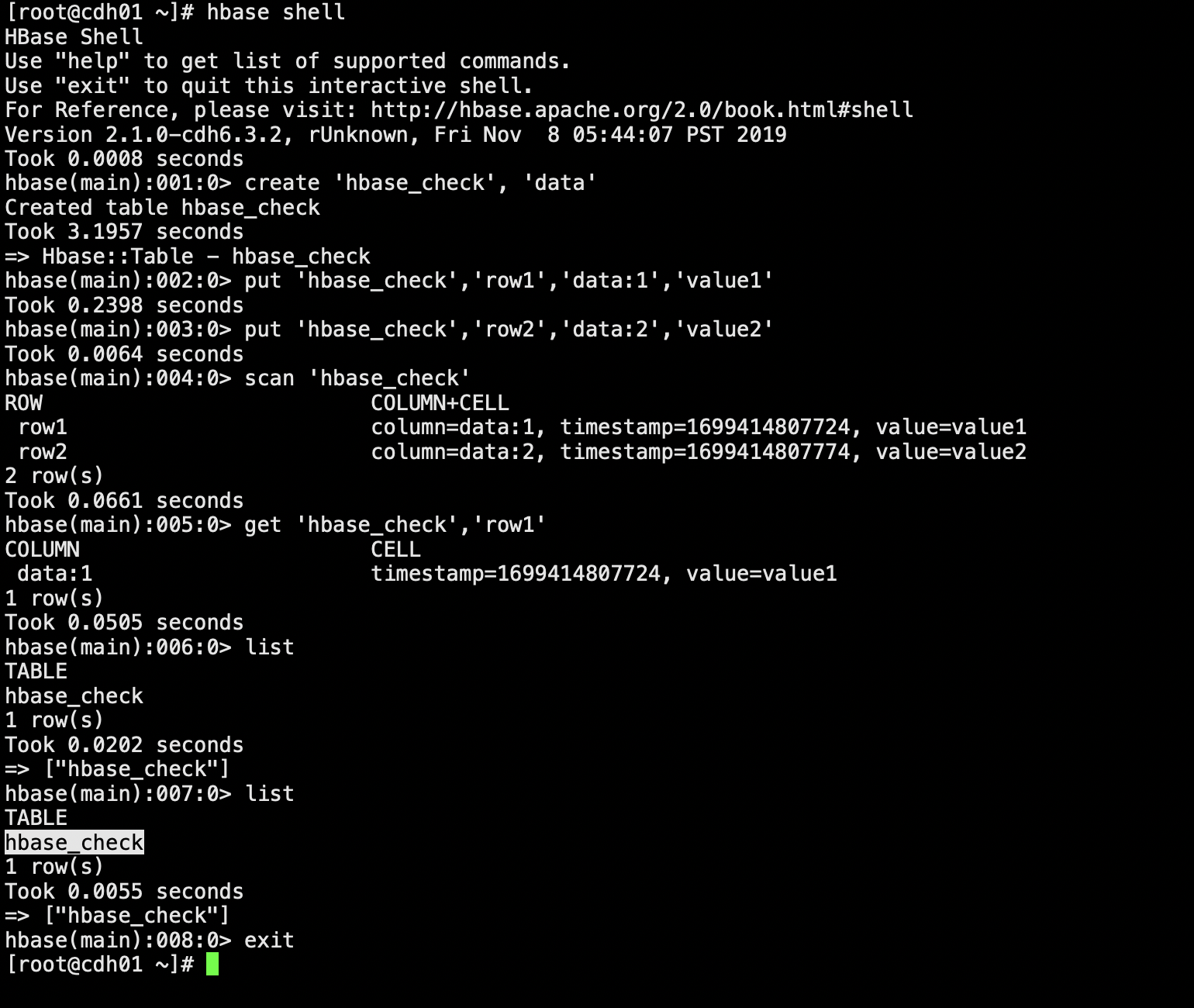

在客户端测试连接

[root@hdp01 ~]# kadmin -p admin/adminkadmin -p admin/admin

Authenticating as principal admin/admin with password.

Password for admin/admin@HADOOP.COM:

kadmin: listprincs

K/M@HADOOP.COM

admin/admin@HADOOP.COM

kadmin/admin@HADOOP.COM

kadmin/changepw@HADOOP.COM

kadmin/hdp01@HADOOP.COM

kiprop/hdp01@HADOOP.COM

krbtgt/HADOOP.COM@HADOOP.COM

重新启动ambari-server

ambari-server restart

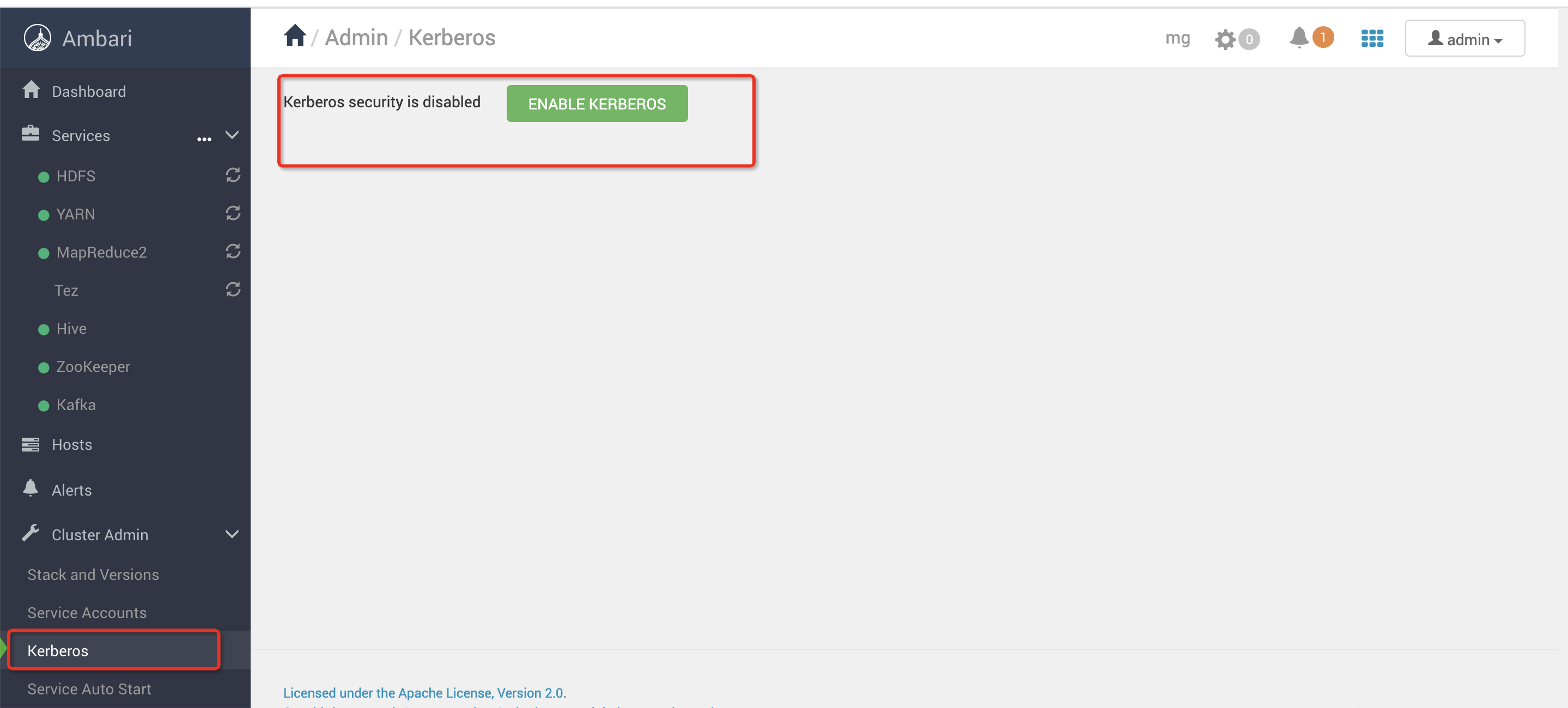

在Ambari上添加kerberos

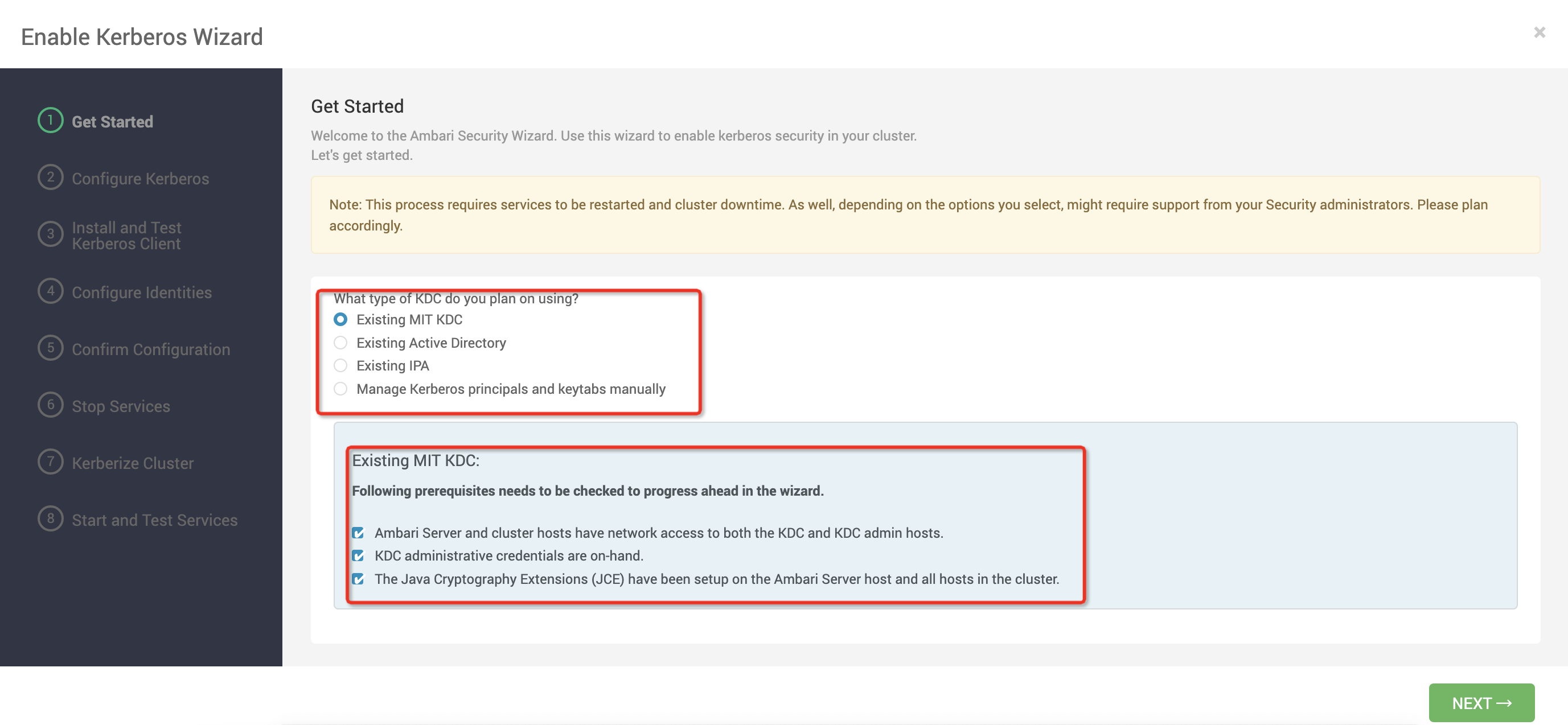

开启Kerberos

选择MIT 现有的kerberos模式

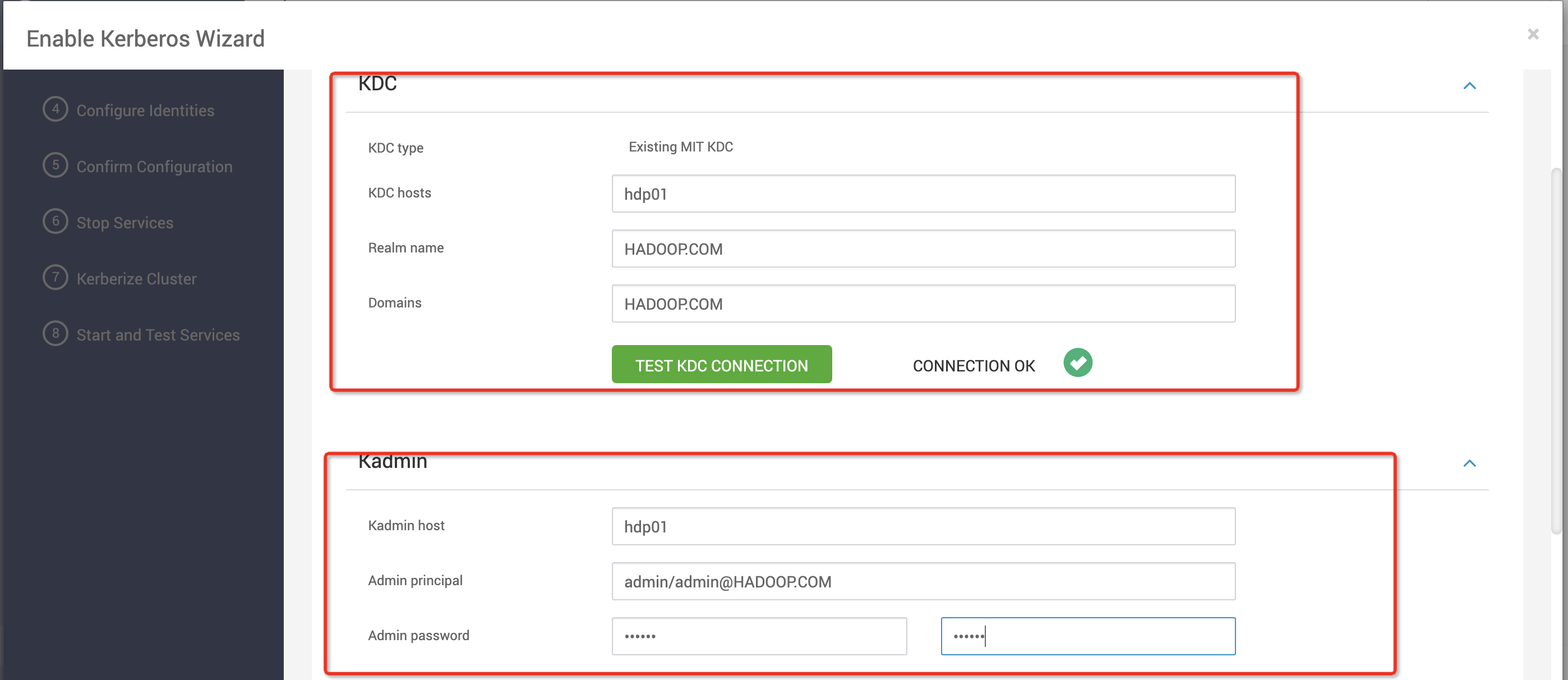

配置Kerberos的kdc 和kadmin

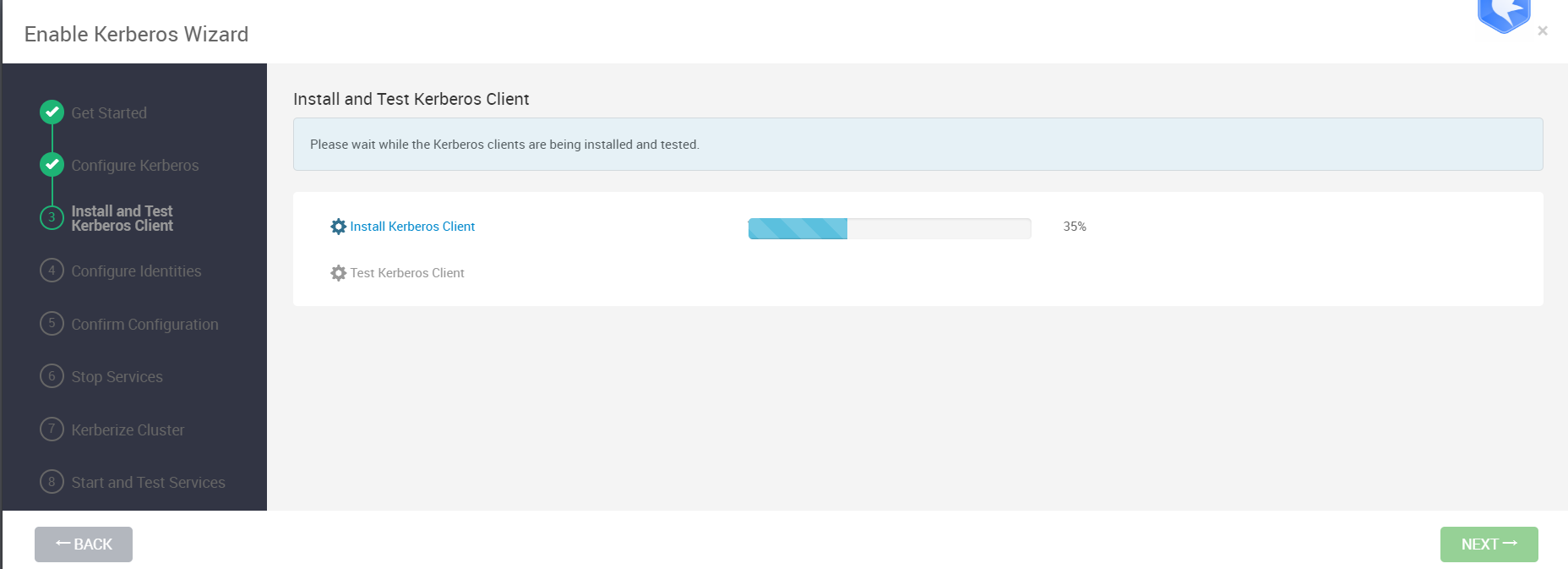

安装和测试Kerberos客户端

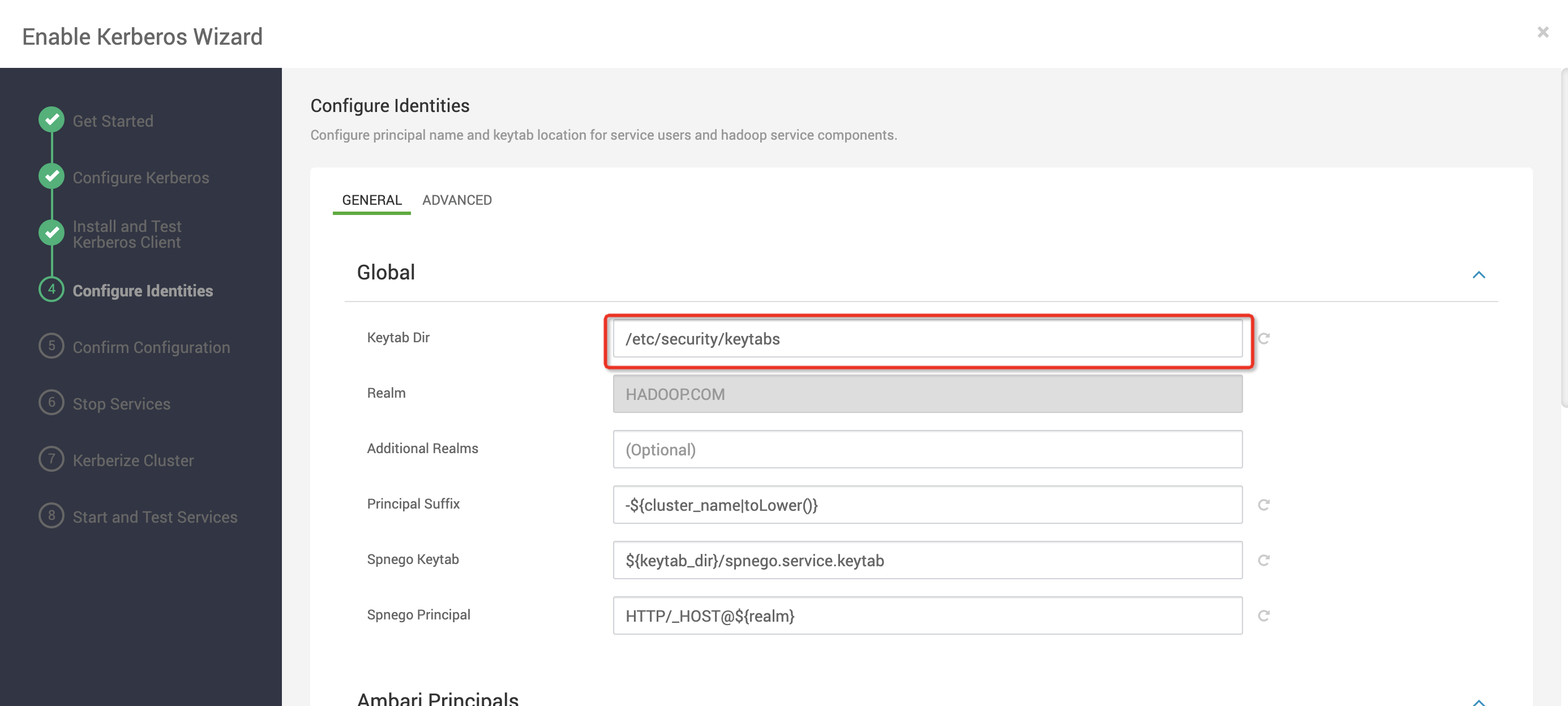

配置相关信息

第一个目录那个 之后的keytab会放在那里的

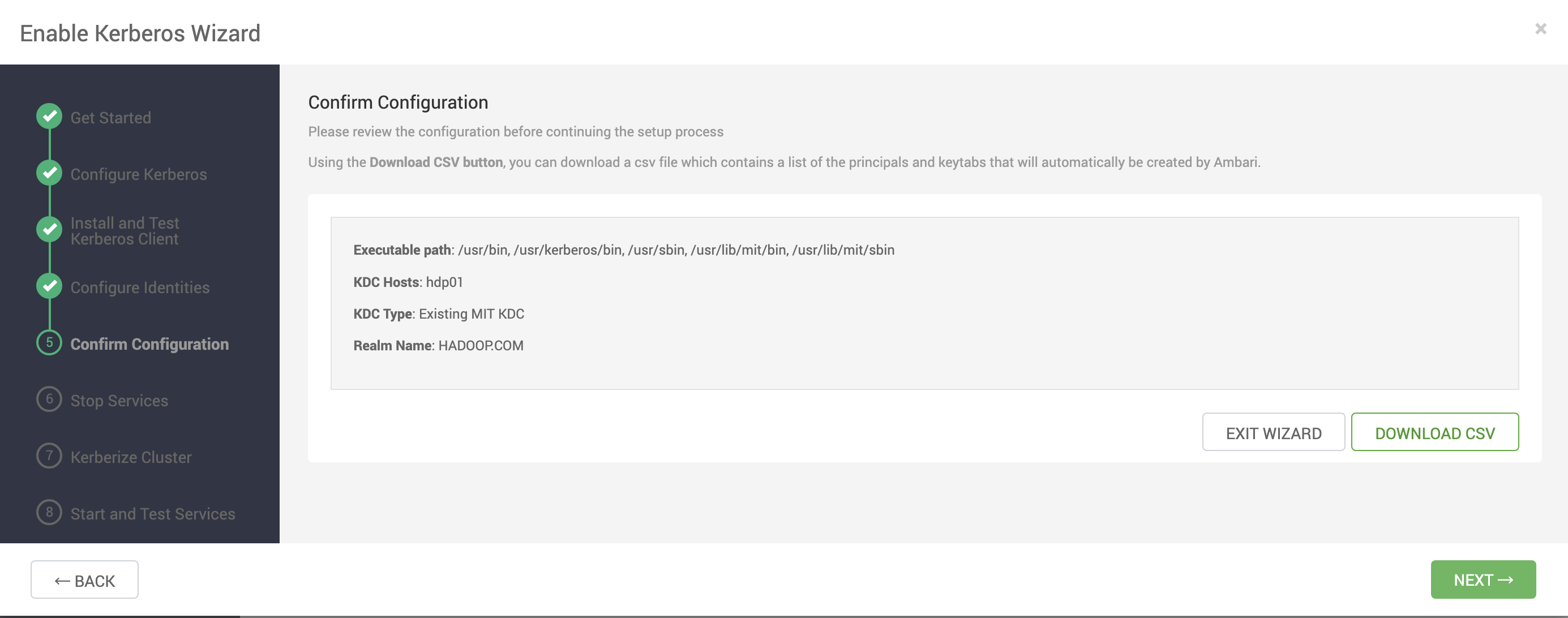

确认配置信息

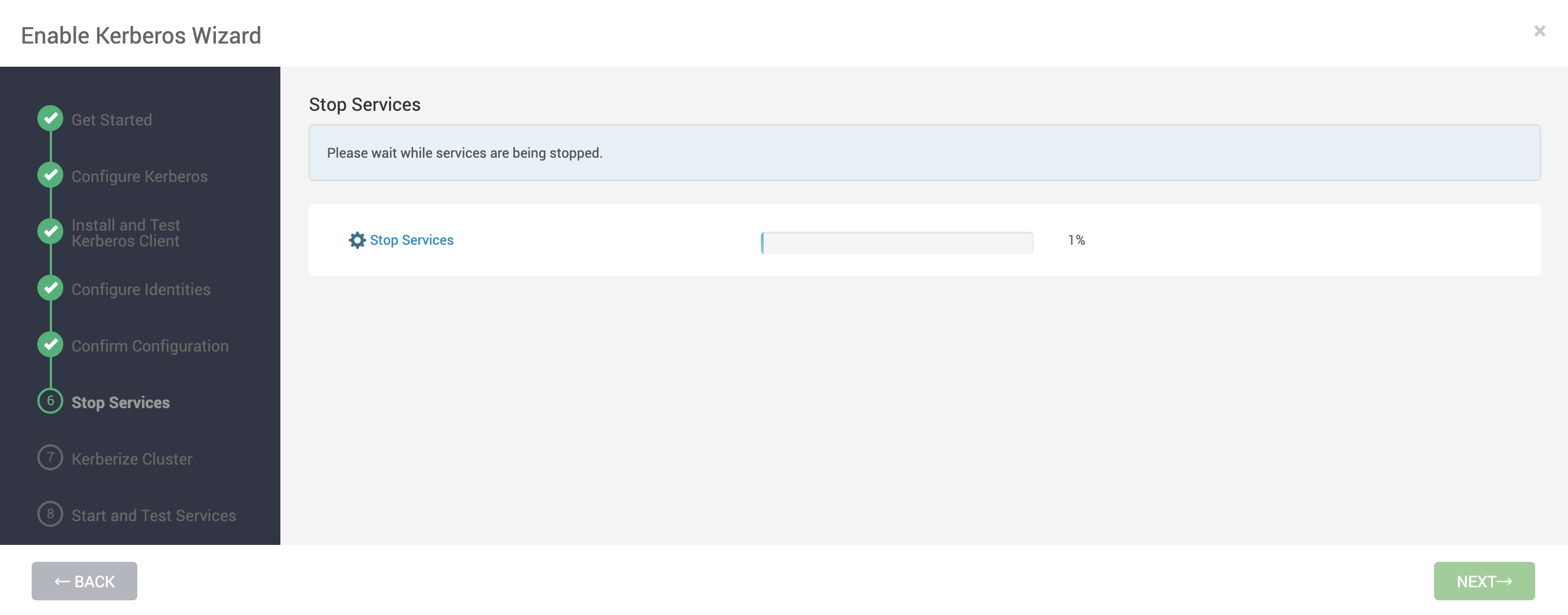

等待停止其他服务

安装kerberos集群

准备操作

创建principals

创建keytabs

配置ambari 身份

分发密钥表

更新配置

完成操作