trino组件对接hudi(四)

安装部署

本文是基于已经部署了trino组件的环境上,进行的trino和hudi的对接,使trino组件能够正常查询hudi表。

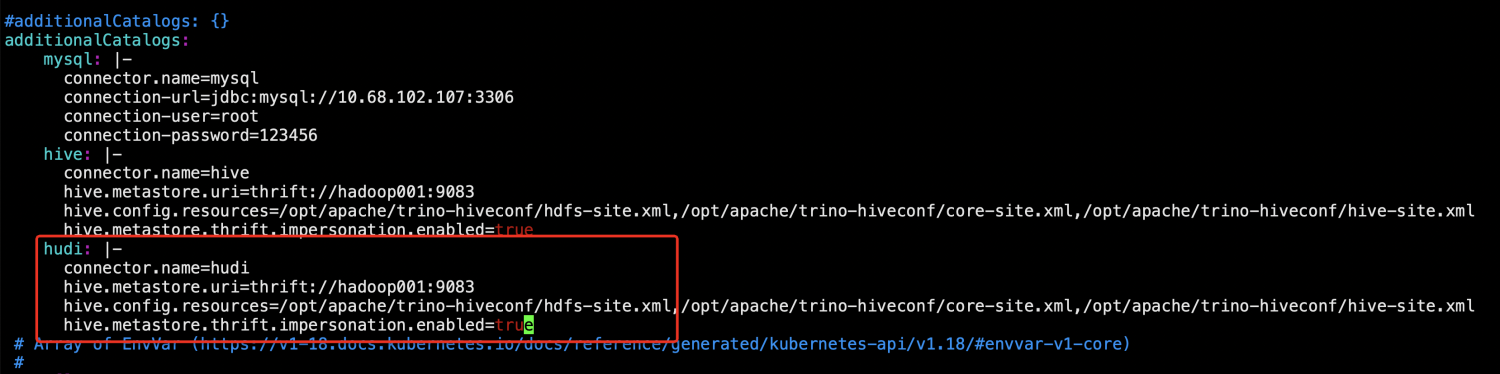

1、增加hudi connector配置

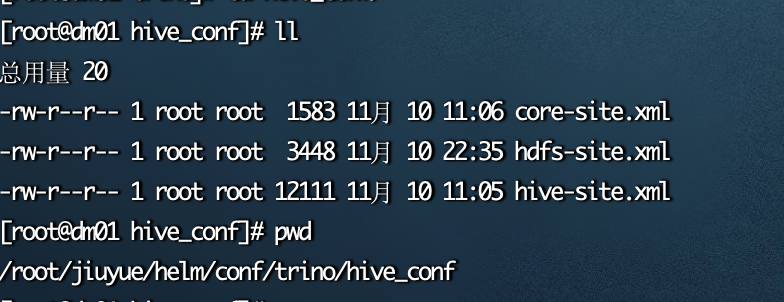

在trino安装部署下的etc/catalog下,创建hudi.properties文件,将集群中的core-site.xml、hdfs-site.xml放在etc下面,在hudi.properties中进行指定。将配置文件hive.properties、core-site.xml、hdfs-site.xml分发到所有trino节点。

vim hudi.properties connector.name=hudi hive.metastore.uri=thrift://hadoop001:9083 hive.config.resources=/opt/trino/etc/core-site.xml,/opt/trino/etc/hdfs-site.xml hive.metastore.thrift.impersonation.enabled=true

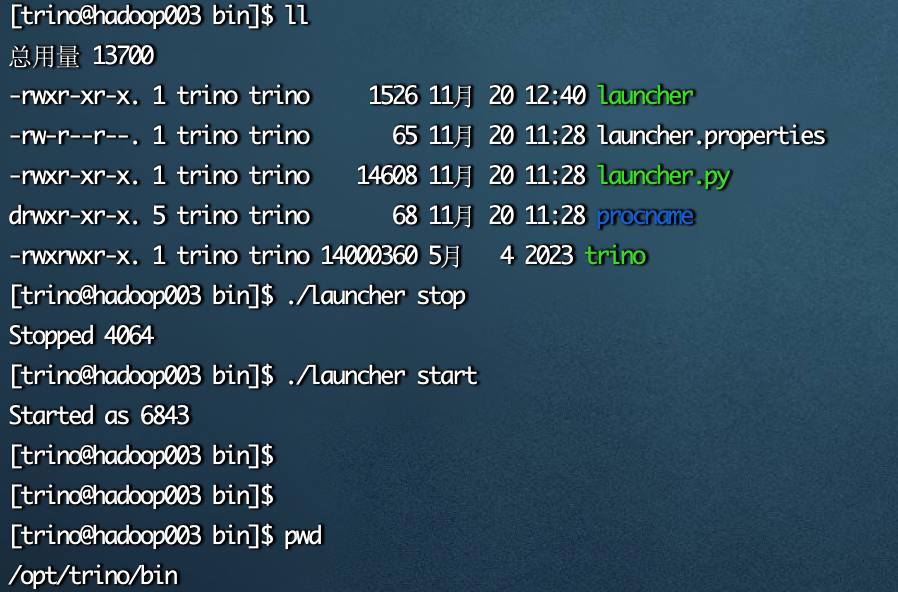

2、trino进行重启

/opt/trino/bin/launcher stop /opt/trino/bin/launcher start

冒烟测试

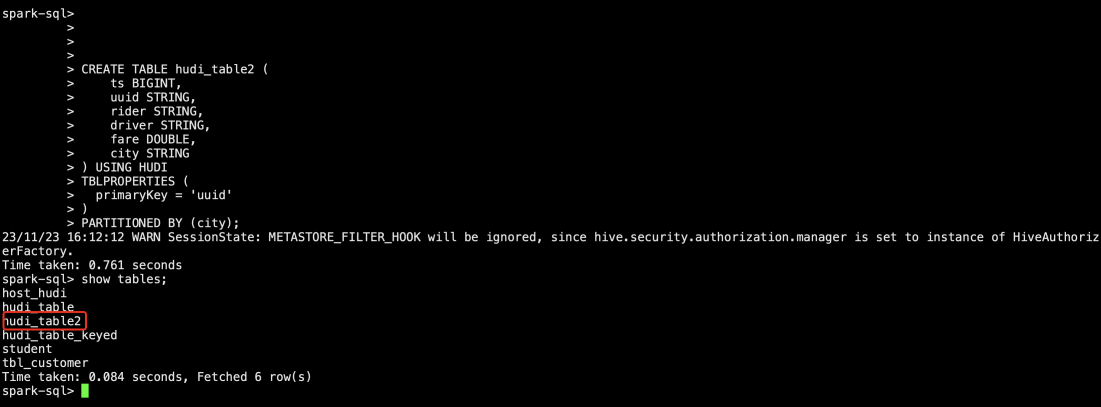

1、spark创建hudi表

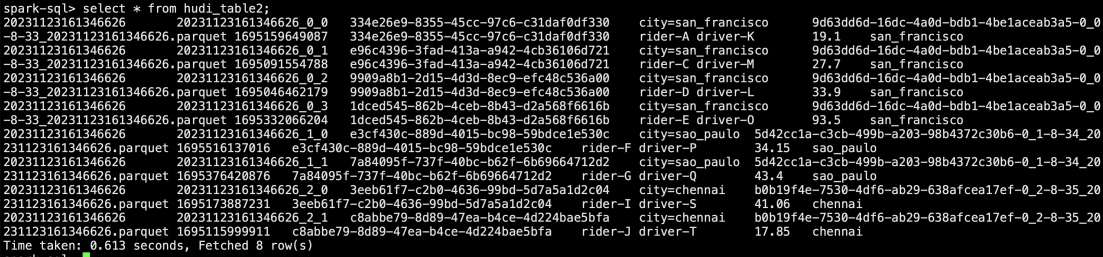

export SPARK_VERSION=3.3 #进入spark部署目录 ./bin/spark-sql --packages org.apache.hudi:hudi-spark$SPARK_VERSION-bundle_2.12:0.14.0 --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer' --conf 'spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension' --conf 'spark.sql.catalog.spark_catalog=org.apache.spark.sql.hudi.catalog.HoodieCatalog' --conf 'spark.kryo.registrator=org.apache.spark.HoodieSparkKryoRegistrar' --proxy-user=hive use test; CREATE TABLE hudi_table2 ( ts BIGINT, uuid STRING, rider STRING, driver STRING, fare DOUBLE, city STRING ) USING HUDI TBLPROPERTIES ( primaryKey = 'uuid' ) PARTITIONED BY (city); INSERT INTO hudi_table2 VALUES (1695159649087,'334e26e9-8355-45cc-97c6-c31daf0df330','rider-A','driver-K',19.10,'san_francisco'), (1695091554788,'e96c4396-3fad-413a-a942-4cb36106d721','rider-C','driver-M',27.70 ,'san_francisco'), (1695046462179,'9909a8b1-2d15-4d3d-8ec9-efc48c536a00','rider-D','driver-L',33.90 ,'san_francisco'), (1695332066204,'1dced545-862b-4ceb-8b43-d2a568f6616b','rider-E','driver-O',93.50,'san_francisco'), (1695516137016,'e3cf430c-889d-4015-bc98-59bdce1e530c','rider-F','driver-P',34.15,'sao_paulo' ), (1695376420876,'7a84095f-737f-40bc-b62f-6b69664712d2','rider-G','driver-Q',43.40 ,'sao_paulo' ), (1695173887231,'3eeb61f7-c2b0-4636-99bd-5d7a5a1d2c04','rider-I','driver-S',41.06 ,'chennai' ), (1695115999911,'c8abbe79-8d89-47ea-b4ce-4d224bae5bfa','rider-J','driver-T',17.85,'chennai'); CREATE TABLE fare_adjustment (ts BIGINT, uuid STRING, rider STRING, driver STRING, fare DOUBLE, city STRING) USING HUDI TBLPROPERTIES ( primaryKey = 'uuid' )PARTITIONED BY (city); INSERT INTO fare_adjustment VALUES (1695091554788,'e96c4396-3fad-413a-a942-4cb36106d721','rider-C','driver-M',-2.70 ,'san_francisco'), (1695530237068,'3f3d9565-7261-40e6-9b39-b8aa784f95e2','rider-K','driver-U',64.20 ,'san_francisco'), (1695241330902,'ea4c36ff-2069-4148-9927-ef8c1a5abd24','rider-H','driver-R',66.60 ,'sao_paulo' ), (1695115999911,'c8abbe79-8d89-47ea-b4ce-4d224bae5bfa','rider-J','driver-T',1.85,'chennai' ); DELETE FROM hudi_table2 WHERE uuid = '3f3d9565-7261-40e6-9b39-b8aa784f95e2';

2、trino 客户端进行测试

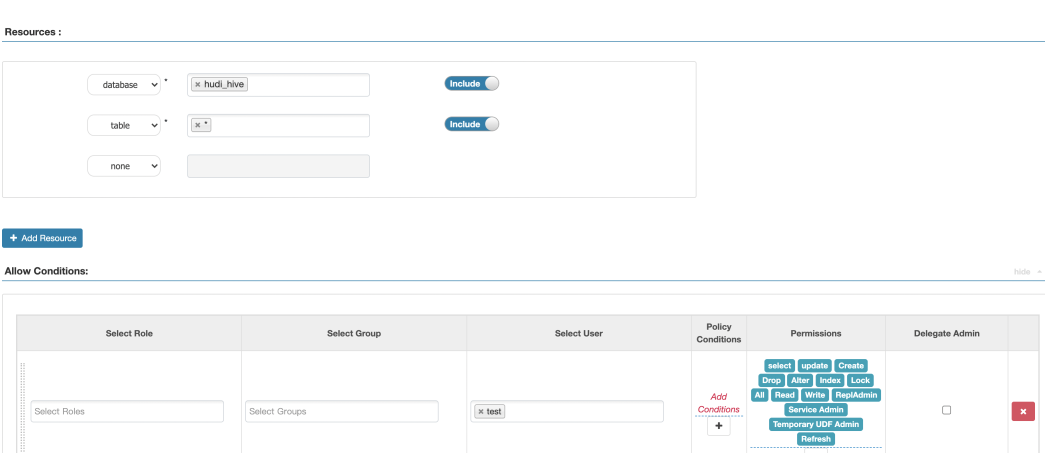

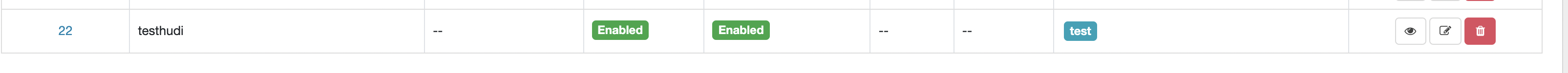

使用test用户进行测试,因为test用户没有hudi_hive库的权限,首先进行添加

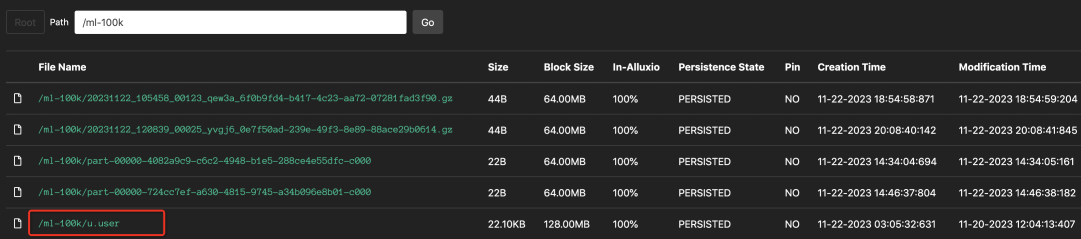

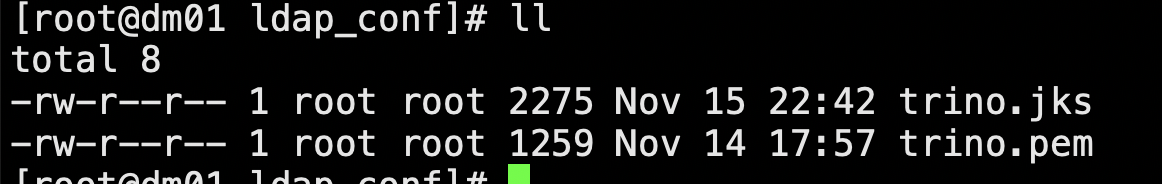

/opt/trino/trino-cli --server https://172.16.121.0:8443 --keystore-path /opt/trino/etc/trino.jks --keystore-password admin@123 --catalog hudi --user test --password --debug #输入test密码 trino> use hudi_hive; USE trino:hudi_hive> show tables; Table ------------------ fare_adjustment host_hudi hudi_table hudi_table2 hudi_table_keyed student tbl_customer (7 rows) trino:hudi_hive> select * from hudi_table2; _hoodie_commit_time | _hoodie_commit_seqno | _hoodie_record_key | _hoodie_partition_path | _hoodie_file_n ---------------------+-----------------------+--------------------------------------+------------------------+------------------------------------------ 20231123161346626 | 20231123161346626_1_0 | e3cf430c-889d-4015-bc98-59bdce1e530c | city=sao_paulo | 5d42cc1a-c3cb-499b-a203-98b4372c30b6-0_1- 20231123161346626 | 20231123161346626_1_1 | 7a84095f-737f-40bc-b62f-6b69664712d2 | city=sao_paulo | 5d42cc1a-c3cb-499b-a203-98b4372c30b6-0_1- 20231123161346626 | 20231123161346626_2_0 | 3eeb61f7-c2b0-4636-99bd-5d7a5a1d2c04 | city=chennai | b0b19f4e-7530-4df6-ab29-638afcea17ef-0_2- 20231123161346626 | 20231123161346626_2_1 | c8abbe79-8d89-47ea-b4ce-4d224bae5bfa | city=chennai | b0b19f4e-7530-4df6-ab29-638afcea17ef-0_2- 20231123161346626 | 20231123161346626_0_0 | 334e26e9-8355-45cc-97c6-c31daf0df330 | city=san_francisco | 9d63dd6d-16dc-4a0d-bdb1-4be1aceab3a5-0_0- 20231123161346626 | 20231123161346626_0_1 | e96c4396-3fad-413a-a942-4cb36106d721 | city=san_francisco | 9d63dd6d-16dc-4a0d-bdb1-4be1aceab3a5-0_0- 20231123161346626 | 20231123161346626_0_2 | 9909a8b1-2d15-4d3d-8ec9-efc48c536a00 | city=san_francisco | 9d63dd6d-16dc-4a0d-bdb1-4be1aceab3a5-0_0- 20231123161346626 | 20231123161346626_0_3 | 1dced545-862b-4ceb-8b43-d2a568f6616b | city=san_francisco | 9d63dd6d-16dc-4a0d-bdb1-4be1aceab3a5-0_0- (8 rows)

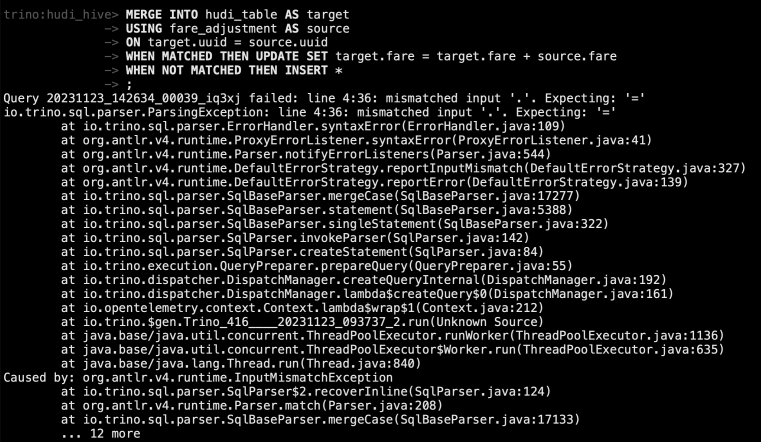

merger:trino语法不支持

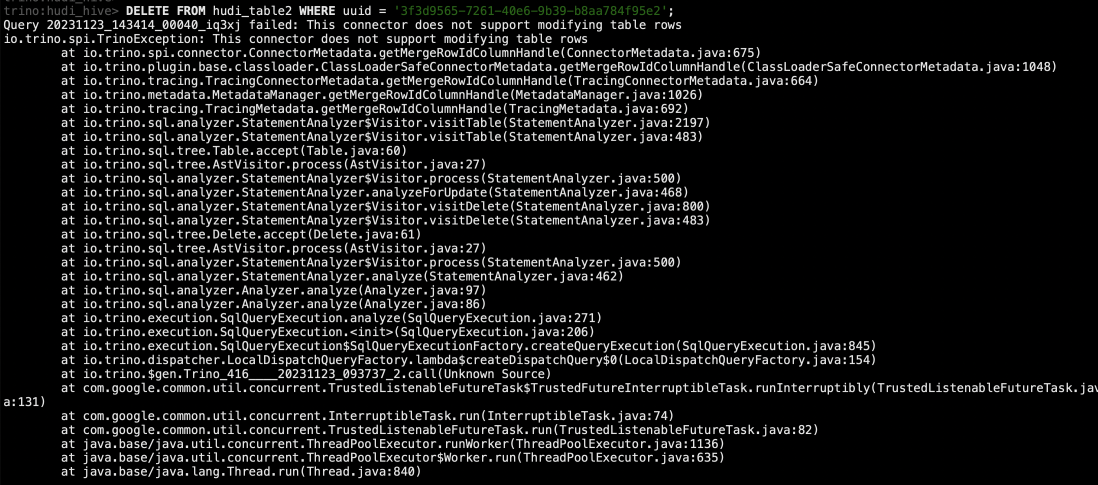

delete: trino hudi connector不支持修改表行数

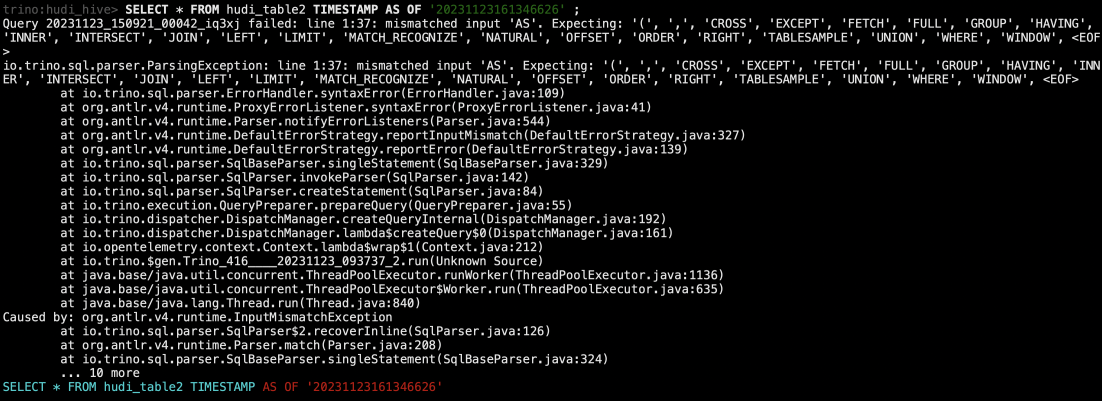

时间旅行:语法不支持